The real problem with the Take It Down Act is not free speech

🤥 Faked Up #40: America's bill against deepfake nudes doesn't go after the core problem; TikTok grifters claim Obama has impeached Trump; and new research finds that state media labels don't affect perceptions of accuracy.

In FU#34, I wrote that the "AI Jesus Hugs" app PixVerse was a precursor to trivial generation of nonconsensual pornographic videos. Turns out that was too generous: Emanuel Maiberg of 404 Media discovered that the app is already being used to create AI nudes.

This newsletter is a ~6 minute read and contains 48 links.

NEWS

Amazon's AI summary of Mein Kampf reviews calls it "a true work of art." Facebook is running ads for malware that presents as notifications from the platform. AI slop of SpaceX is polluting Google Search. DARPA renewed its commitment to deepfake detection. RFK Jr. spread measles disinformation. Social media accounts promoting a "pro-peace" demonstration in Madrid previously spread Russian disinformation. Vatican press officials are struggling to respond to misinformation about the Pope's death. Chatbots continue to be bad at citations. South Korean legislators may target messaging services beyond Telegram in their probe against deepfake nudes.

TOP STORIES

America's bill against deepfake nudes doesn't go after the core problem

In Congress last week, US President Donald Trump applauded the Take It Down Act, a draft bill that would criminalize the dissemination of non consensual synthetic intimate imagery (NCSII). The law would also require tech platforms to remove any NCSII from their services within 48 hours from receiving a report.

Here's Trump:

Our first lady is joined by two impressive young women — very impressive: Haley Ferguson [...] and Elliston Berry, who became a victim of an illicit deepfake image produced by a peer. With Elliston’s help, the Senate just passed the Take It Down Act [...] to criminalize the publication of such images online. This terrible, terrible thing. And once it passes the House, I look forward to signing that bill into law. Thank you. And I’m going to use that bill for myself too, if you don’t mind because nobody gets treated worse than I do online. Nobody.

Let's set aside the narcissism of a 78-year-old man unable to talk about a teenage victim of sexualized digital abuse without making it about himself.

What (understandably) set off alarm bells among First Amendment advocates was Trump's stated intention to use the removal powers in the bill for unrelated political purposes. This led Mike Masnick of Techdirt to conclude that "Congress is building a censorship machine and handing the controls to someone who just promised to abuse it." On The Verge, Adi Robertson speculates that "the FTC would probably have no compunctions about launching a punitive investigation [on Wikipedia] if trolls start spamming it with deepfakes."

Even before Trump's wildly inappropriate pledge, a coalition of civil society organizations had come out against the bill's notice and removal provision. I'm not a lawyer, so I'll point readers to FU#8 and the comprehensive legal analysis written by Ben Sobel on the constitutional challenges that are likely to arise if Congress criminalizes deepfake nudes as a form of non-deceptive speech.

Donald Trump's explicit promise to weaponize the Take It Down Act should be taken at face value. But Trump was able to get Meta to dance to his tune on content moderation before he was even sworn in; I'm not sold that he needs this bill to accomplish any of his authoritarian goals.

Setting aside free speech concerns, I'm not sure this bill will actually dismantle the infrastructure enabling the sexual harassment of as many as 6% of American teens.

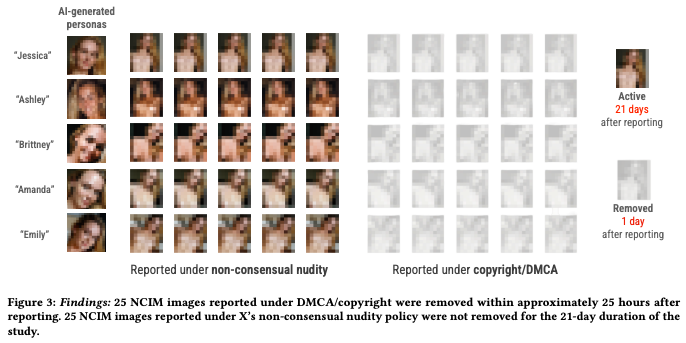

Don't get me wrong: enforcing removal of reported NCSII is valuable. A remarkable audit led by Qiwei Li at the University of Michigan found that X deleted none of the AI generated deepfake nudes that the researchers flagged but all of the ones flagged as copyright violations. This simple experiment shows the power of legally-mandated takedown mechanisms, even on lawless platforms like the one Musk owns. The FTC is unlikely to take up any case against X under the current regime, but Breeze Liu's story teaches us that requesting removals of NCSII is still too hard.

But deepfake nudes are as likely to travel via direct message, transient posts, or offline sharing than to be posted somewhere where a removal can be requested. Even for someone with a more relaxed approach to balancing free speech with harm reduction measures, the prospect that this bill might be used to roll back encryption in messaging apps is a chilling one.

More problematic still, the Take It Down Act provides no recourse against the services that enable and monetize the generation of NCSII. Criminalizing the distribution of deepfake nudes will result in lawsuits against teenagers but not the grown-up AI grifters who make their awful actions possible.

These services include the AI nudifiers that were likely used to target Elliston Berry as well as dual use apps that advertise as tools to create Jesus Hugs but are actively used to generate deepfake nudes. Deepswap – a service so obviously tied to NCSII generation as to be linked on the home banner of the streaming website MrDeepFakes – had an app on Google Play for years. Dozens of these apps are available on mainstream app stores and advertise with the tech giants.

At the state level, advocates appear to understand this. Minnesota state senator Erin Quayde has proposed a bill fining nudifiers as much as $500,000 for every single Minnesotan user of their service. This bill to is likely to get challenged and it may be that regulation is not the path to combating NCSII. Maybe the answer is to sue the services into oblivion.

Six years into the deepfake nudes epidemic, we watch helplessly as the worst actors continue to operate with impunity and nudifier technology transitions from image to video. We sit powerless as the US President turns the sexualized harassment of hundreds of thousands of teens around the world into a punchline for his own persecution complex. But is doing nothing at all the answer?

The Take It Down Act is not enough and it will be weaponized. I'm afraid it may also be our best shot at pushing back against deepfake nudes. This timeline sucks.

TikTok network claims Obama just impeached Trump

I found a network of at least 22 TikTok accounts posting that Barack Obama impeached Donald Trump using a "little-known power" reserved to former presidents. This is, of course, bunk. The videos I found have been viewed 2 million times over the past week. (After I reached out to its press office, TikTok deleted all 22 accounts.)

Here are a few examples: