🤥 Faked Up #9

Buongiorno a tutti.

Faked Up #9 is brought to you by the end of take-home exams and the word “delve.” The newsletter is a ~6-minute read and contains 51 links.

Update: Last week I wrote about Meta’s messy “Made with AI” labels. On Monday, the company announced it was rebranding them to “AI info.” That’s much better in that it is less accusatory, but it doesn’t fix the underlying limitations of the feature as a way to combat deceptive use of generative AI.

Top Stories

PSYCH! AN AI WROTE THIS — Psychology researchers at the universities of Reading and Essex tested the proposition that generative AI can be reliably used to cheat in university exams. The answer is a resounding Yes.

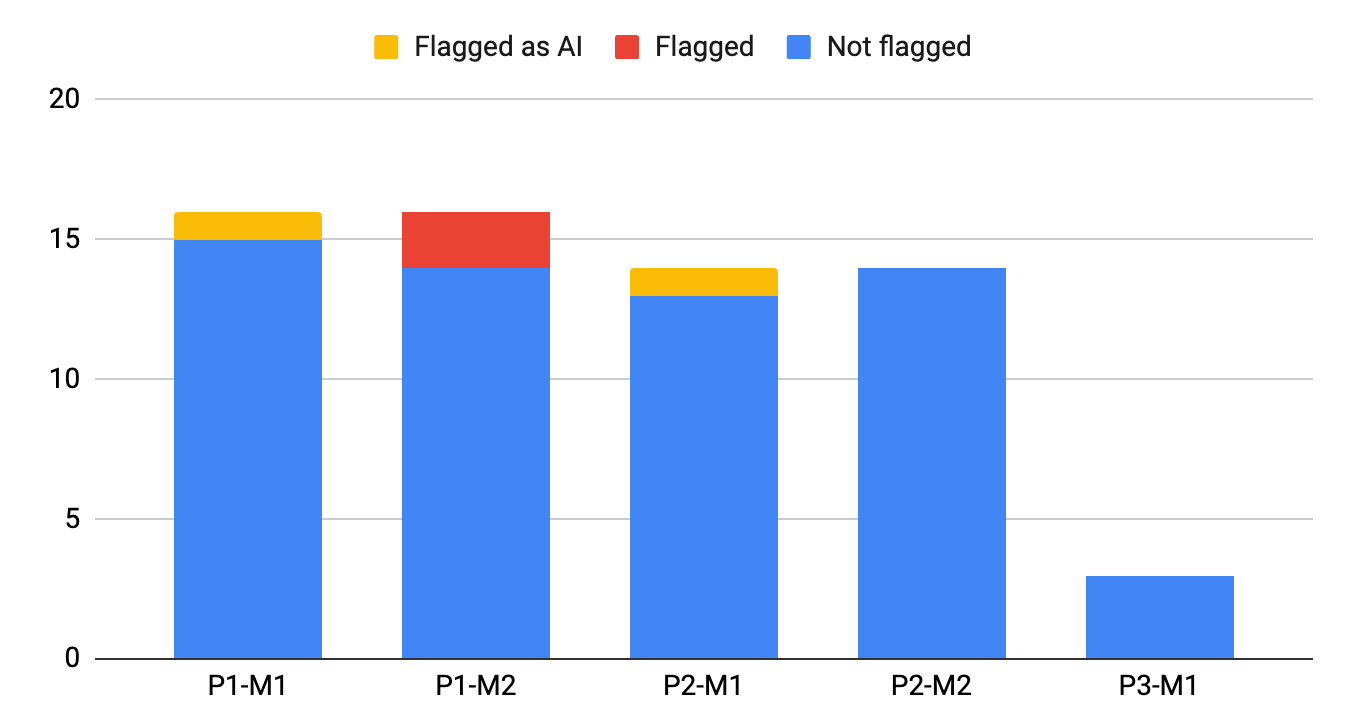

The researchers used GPT-4 to produce 63 submissions for the at-home exams of five different classes in Reading’s undergraduate psychology department. The researchers did not touch the AI output other than to remove reference sections and re-generate responses if they were identical to another submission.

Only four of the 63 exams got flagged as suspicious during grading, and only half of those were explicitly called out as possibly AI-generated. The kicker is that the average AI submission also got a better grade than the average human.

The study concludes that “from a perspective of academic integrity, 100% AI written exam submissions being virtually undetectable is extremely concerning.”

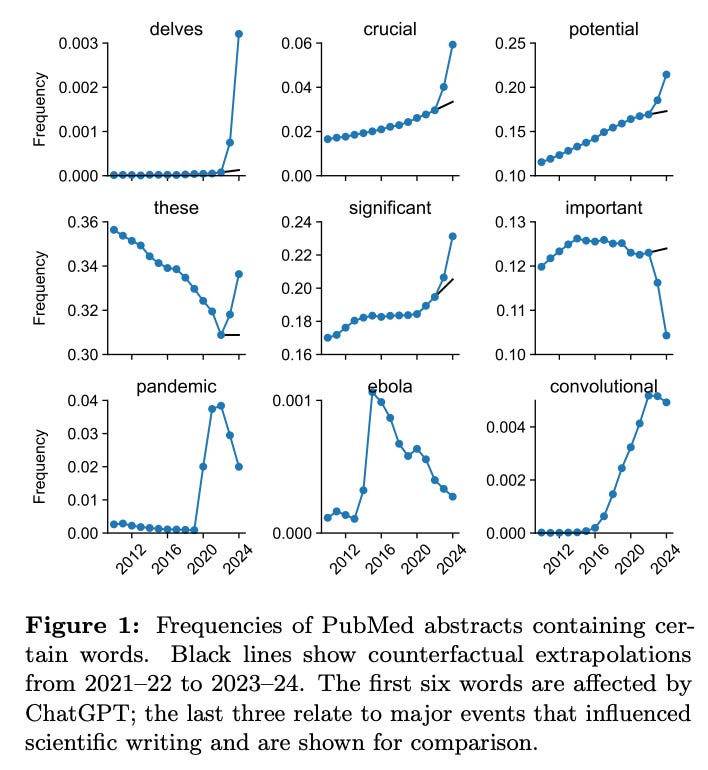

Students aren’t going to be the only ones delegating work to the AI, either. A group of machine learning researchers claim in a preprint that as many as 10% of the abstracts published on PubMed in 2024 were “processed with LLMs” based on the excess usage of certain words like “delves.” Seems significant, important — even crucial!

PILING ON — Two posts falsely claiming that CNN anchor Dana Bash was trying to help U.S. President Joe Biden focus his attention on his adversary Donald Trump during the debate got more than 11M views on X1 (h/t AFP Fact Check). The posts rely on a deceptively captioned clip that originally appeared on TikTok. As you can see starting at around 20:20 on the CNN video, Bash does point her finger while looking sternly at a candidate.

But given that her head is tilted left — and co-host Jake Tapper is looking to his right while posing a question to Biden — she is clearly signaling to Trump to pipe down.

I share this silly little viral thing because it looks like an other case of what Mike Caulfield would call misrepresented evidence. Biden did perform terribly at the debate, but this clip wasn’t proof of it.2

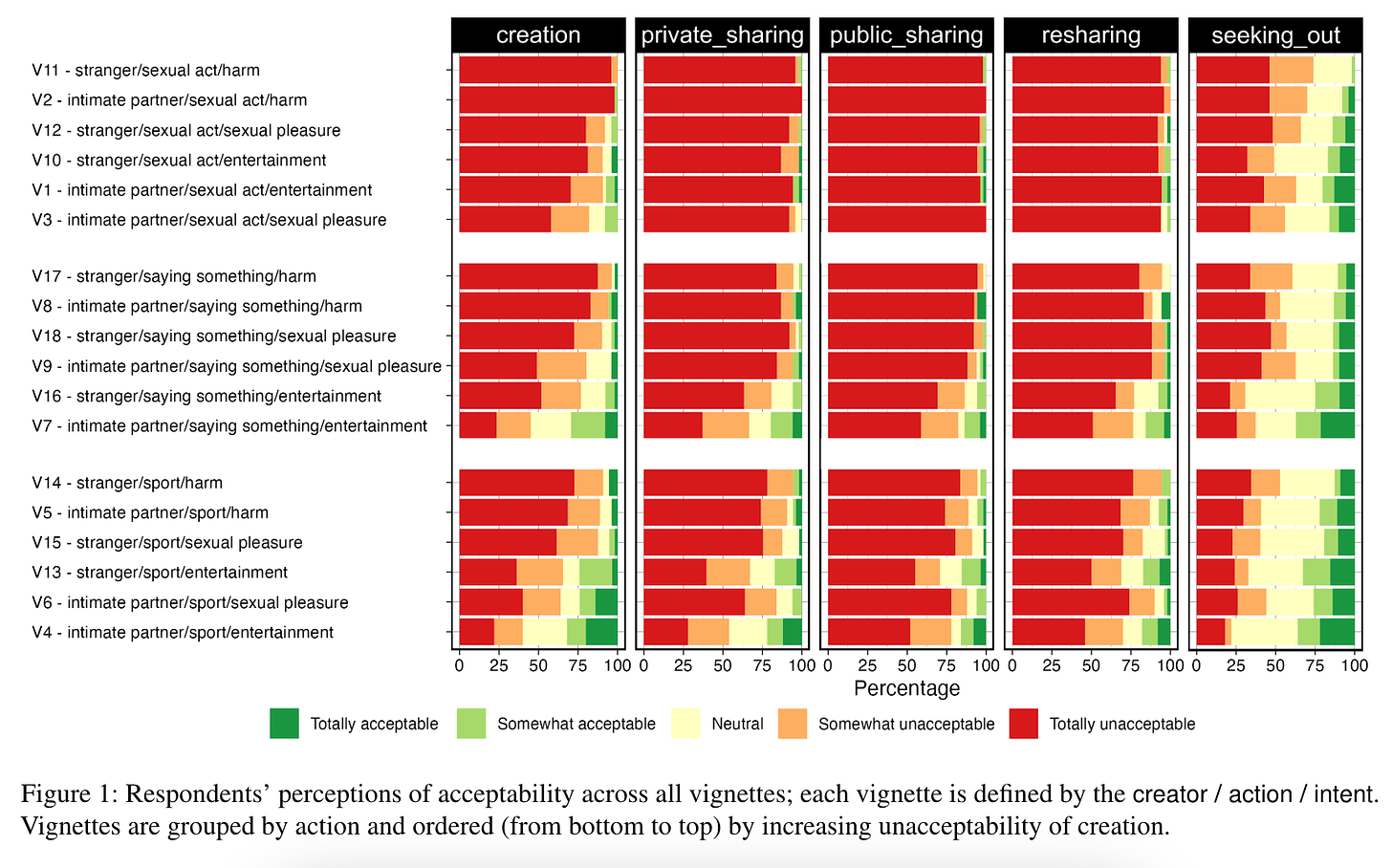

NOT OK — In a recent preprint, researchers at the University of Washington and Georgetown studied the attitudes of 315 US respondents towards AI-generated non consensual intimate imagery (AIG-NCII). They found that vast majorities thought the creation and dissemination of AI-generated NCII was “totally unacceptable.” That remained true whether the object of the deepfake was a stranger or an intimate partner, though there was some fluctuation based on the intent.

In an indication that generalized access could normalize AIG-NCII, respondents were notably more accepting of people seeking out this content.

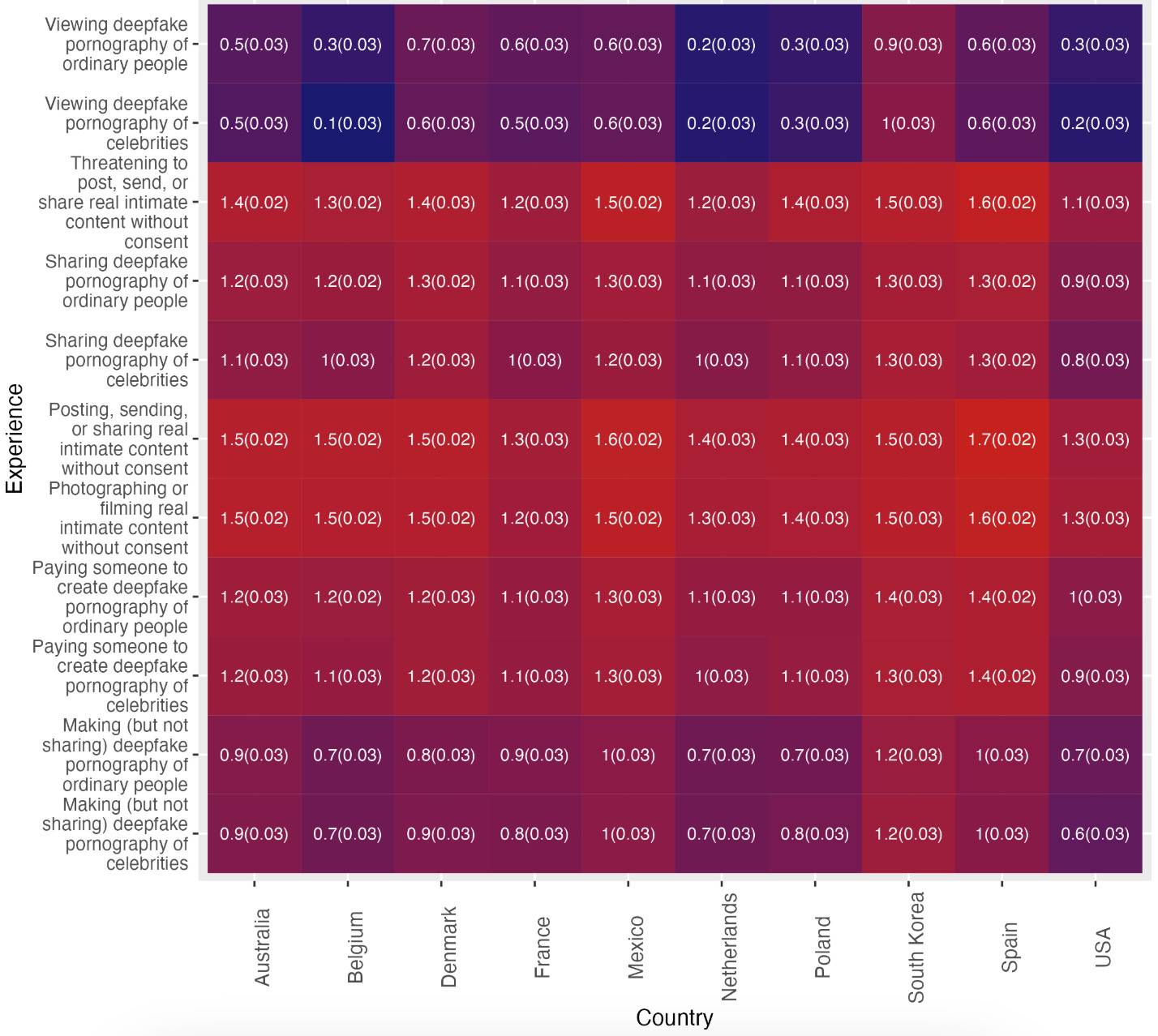

Earlier this year, a separate group of researchers across four institutions shared the results of a mid-2023 survey of 1,600 across 10 countries that found a similar distinction between creation/dissemination versus consumption. In the chart below on a scale from -2 to +2 you can see the mean attitude towards criminalizing the behaviors listed on the left column. (The redder the square, the more people favored criminalization.)

While I’m sharing papers on the topic: In another recent preprint, researchers at Google and UW reviewed 261 Reddit posts providing advice on image-based sexual abuse (IBSA), including 51 that were related to a synthetic image. Most co-occurred with sextortion.

SOURCE OF CONFUSION — Citations continue to stump AI chatbots.

- Nieman Lab claims that ChatGPT hallucinated the urls of articles by at least 10 different publications with which OpenAI has partnership deals.

- AI detection company GPTZero claims that Perplexity is increasingly returning AI-generated sources, which may negate the search engine’s effort to resolve hallucination by referring to things it found on the internet.

- Dutch researcher Marijn Sax thinks that the made-up citations in a scholarly article about labeling AI-content were…likely AI-generated.

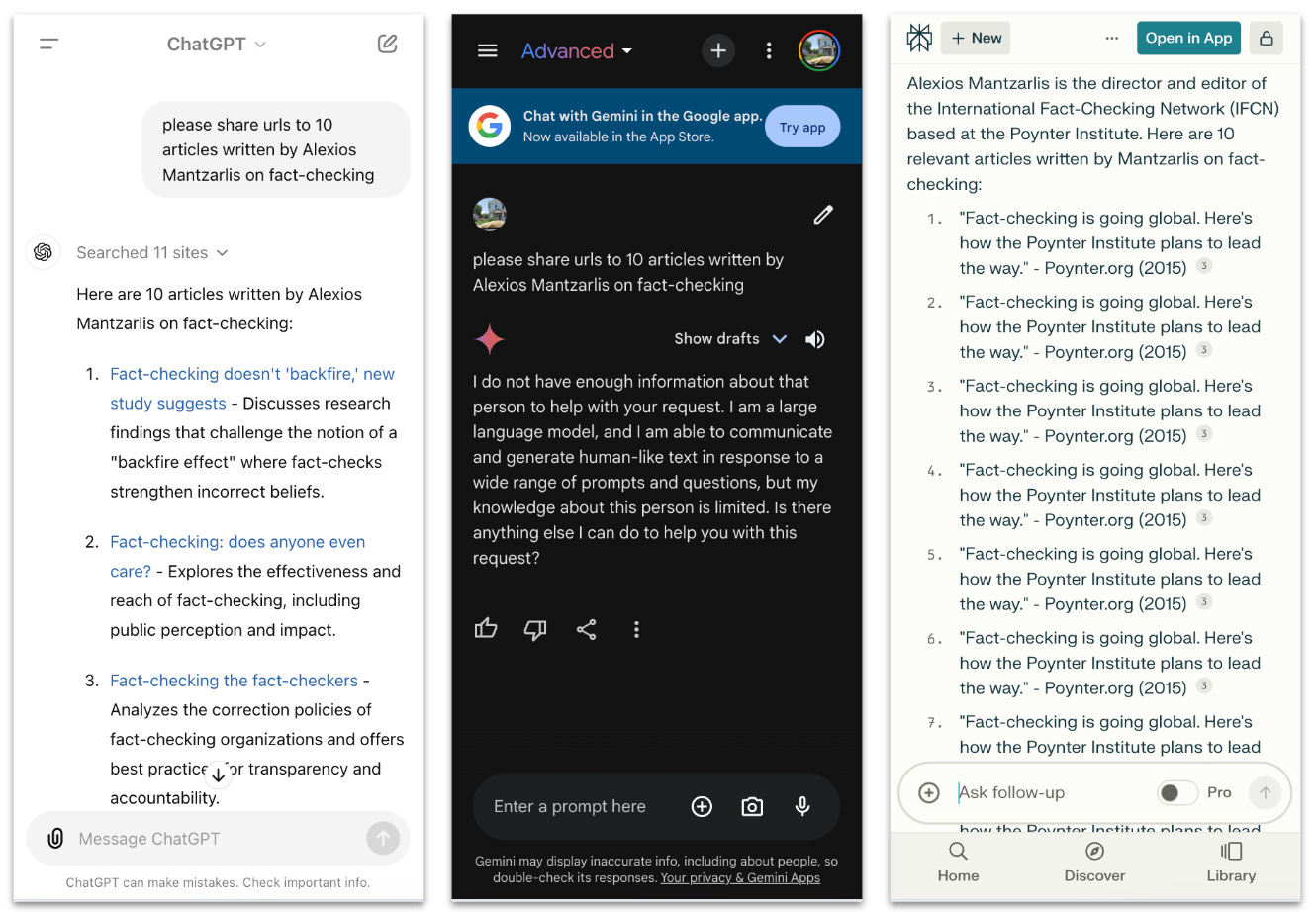

Inspired by this mess, I asked three AI chatbots to give me urls to 10 articles I’ve written about fact-checking (there are dozens). ChatGPT passed the test. Gemini claimed not to have enough information “about that person” (rude). And Perplexity AI claimed it couldn’t find any articles I wrote and just posted a press release about my move to the Poynter Institute 10 times.

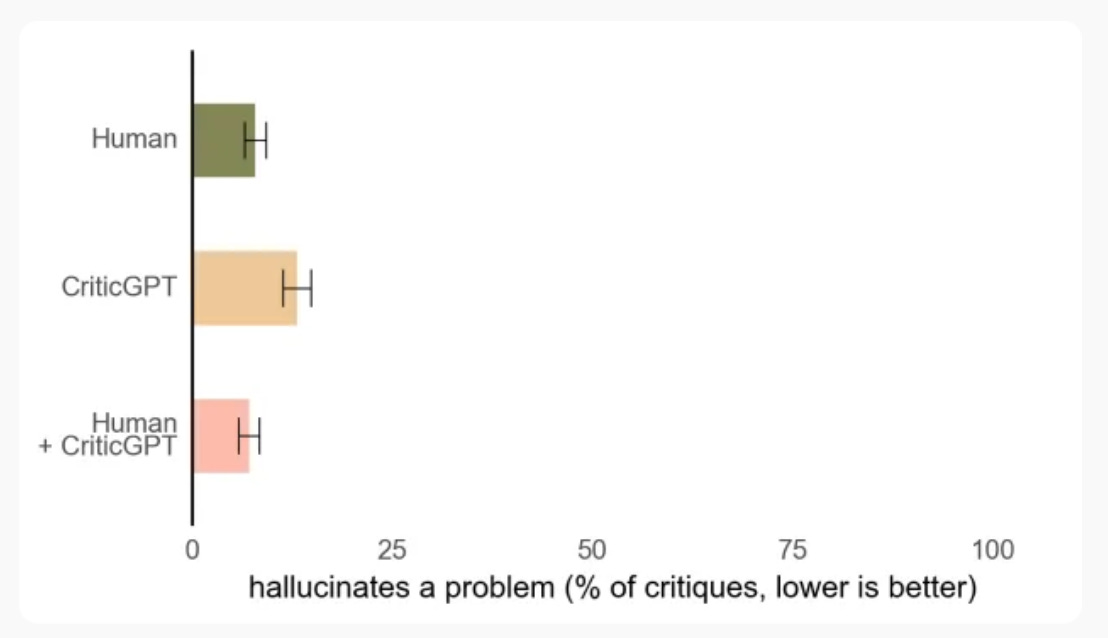

CHATTY CRITIC — The day after I wrote about LLMs correcting themselves on FU#7, OpenAI published a paper about a GPT-4-powered code reviewer. The tool, called CriticGPT, finds human-inserted bugs at a higher rate than OpenAI’s contractors. However, CriticGPT also invents problems with the code at a higher rate.

NO JAWBONES TO PICK — The U.S. Supreme Court ruled last week on Murthy v Missouri. With a 6-3 majority, SCOTUS reversed a lower court decision and asserted that White House communications with tech companies about misinformation on their platforms was not censorship. The court repeated that in another ruling on Monday.

Murthy was ultimately a case about government coercion decided on standing rather than an assessment of whether platforms aptly moderated alleged misinformation by (among others) Jay Bhattachariya, Jill Hines and Jim Hoft.

But it matters to readers of this newsletter because, as internet law guru James Grimmelmann put it, the argument “was based on far-fetched conspiracy theories.”

To the majority’s credit, they called out the shaky fact-checking of lower courts in an extensive footnote:

The Fifth Circuit relied on the District Court’s factual findings, many of which unfortunately appear to be clearly erroneous. The District Court found that the defendants and the platforms had an “efficient report-and-censor relationship.” But much of its evidence is inapposite.

For instance, the court says that Twitter set up a “streamlined process for censorship requests” after the White House “bombarded” it with such requests. The record it cites says nothing about “censorship requests.” Rather, in response to a White House official asking Twitter to remove an impersonation account of President Biden’s granddaughter, Twitter told the official about a portal that he could use to flag similar issues. […]

Some of the evidence of the “increase in censorship” reveals that Facebook worked with the CDC to update its list of removable false claims, but these examples do not suggest that the agency “demand[ed]” that it do so.

Finally, the court, echoing the plaintiffs’ proposed statement of facts, erroneously stated that Facebook agreed to censor content that did not violate its policies. Instead, on several occasions, Facebook explained that certain content did not qualify for removal under its policies but did qualify for other forms of moderation.

There will be more litigation, as RFK Jr. was quick to point out. And some of the harms of the censorship circus can’t be undone (ask Nina Jankowicz). But for now, many disinformation researchers whose work was misrepresented and weaponized will feel like UW’s Kate Starbird: “Elated. And vindicated.”

Headlines

- Teams of LLM Agents can Exploit Zero-Day Vulnerabilities (Richard Fang, Rohan Bindu, Akul Gupta, Qiusi Zhan, Daniel Kang)

- USD 257 million seized in global police crackdown against online scams (Interpol)

- X’s AI Superspread Presidential Debate Misinformation (NewsGuard) with OpenAI's ChatGPT and Microsoft's Copilot repeated a false claim about the presidential debate (NBC News)

- ChatGPT gave incorrect answers to questions about how to vote in battleground states (CBS News)

- Russia to ban 81 foreign media outlets in response to Europe’s sanctions (The Record)

- Deepfakes Are Evolving. This Company Wants to Catch Them All (Wired)

- Google disrupted over 10,000 instances of DRAGONBRIDGE activity in Q1 2024 (Google Threat Analysis Group)

- Swallowing: I Was Mike Mew’s Patient (The Paris Review)

- An influencer’s AI clone started offering fans ‘mind-blowing sexual experiences’ without her knowledge (The Conversation)

- Mitigating Skeleton Key, a new type of generative AI jailbreak technique (Microsoft)

- Update to our policy on Disclosure requirements for synthetic content | July 2024 (Google Advertising Policies)

- ID Verification Service for TikTok, Uber, X Exposed Driver Licenses (404 Media)

- They ‘Do Not Need Rest Like Humans’: RT Debuts AI-Generated ‘Journalists’ (InfoEpi Lab)

- Russian influence operation Doppelganger linked to fringe advertising company (Institute for Strategic Dialogue)

Before you go

Given Twitter has given up on bots, I guess at least these ones are kind? (Conspirator Norteño)

Oops

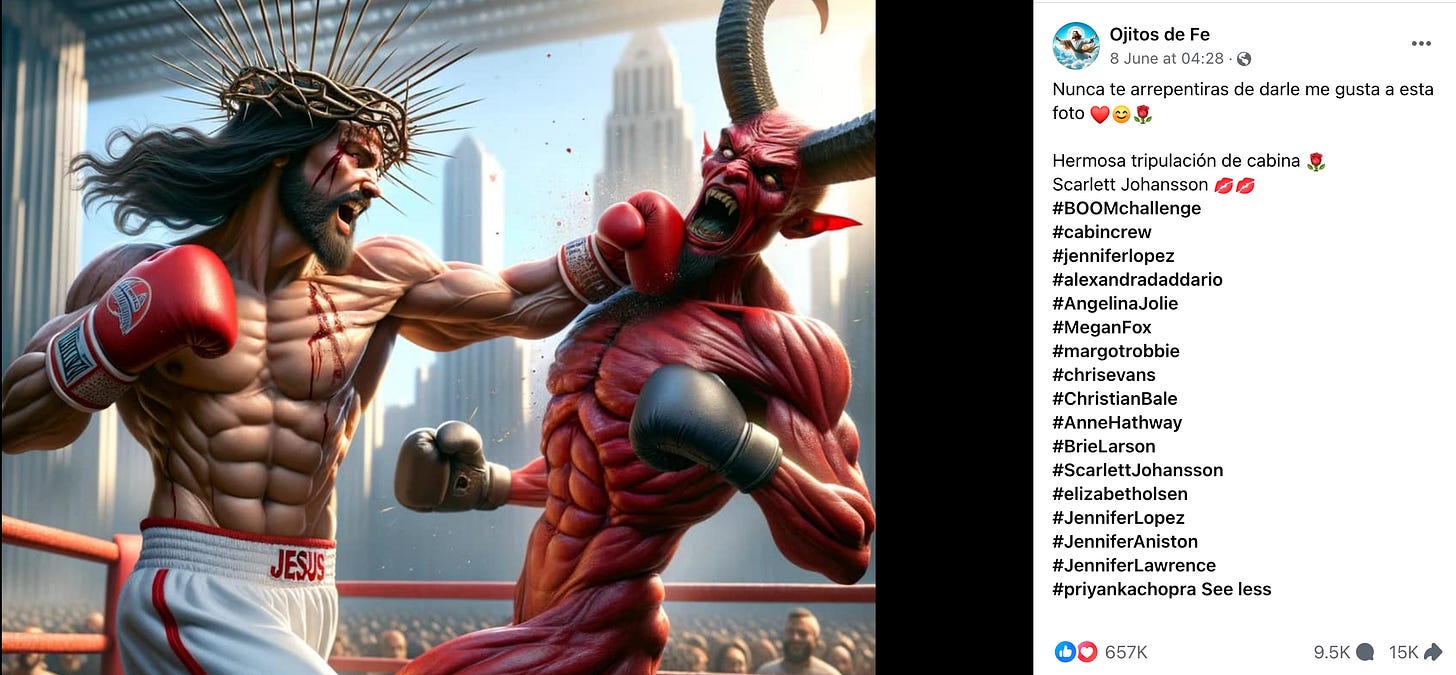

What in the name of Hot Jesus is going on with those hashtags?

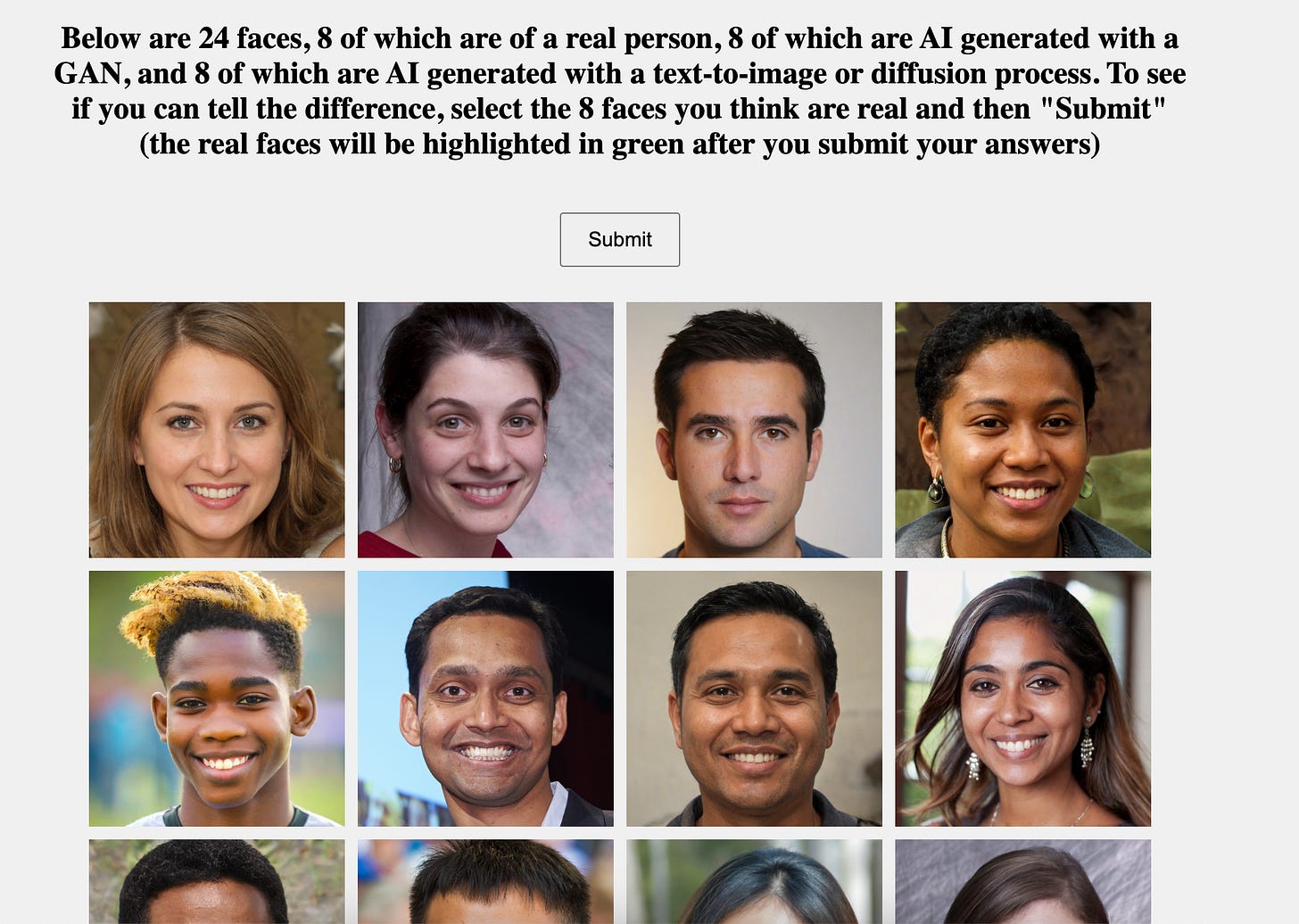

I got 6/8 at this quiz (not bragging). How about you?

1 Twitter views, like Monopoly money, count only within the confines of their platforms.

2 The clip also serves as fabricated evidence that CNN is colluding with the Democrats

Member discussion