🤥 Faked Up #6

AI slop as a service | Wild Whispers | Supply-side misinfonomics

Well, well. Fancy seeing you here.

Faked Up #6 is brought to you by active income schemes and photos of the Colosseum that only a human can conjure. The newsletter is a ~6-minute read and contains 48 links.

Top Stories

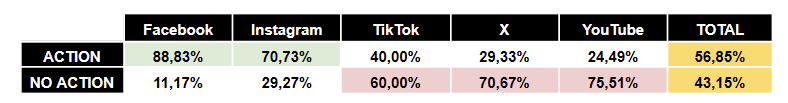

[No visible action] Maldita reviewed 1,321 social media posts on 5 platforms debunked by a European fact-checking consortium in the buildup to the EU election. They conclude that Facebook and Instagram removed or labeled a far higher share of posts than TikTok, X and YouTube.

This discrepancy is not entirely surprising: A majority of documented actions on the Meta platforms consisted in surfacing the labels that fact-checkers themselves are paid by the company to add to trending misinformation.

Still, X and YouTube’s (in)action rates stand out. Musk’s under-moderated mess “is by far the platform with more viral debunked posts with no visible actions,” with 18 out of the top 20 posts. Some examples of the posts reviewed are linked at the bottom of the report; the full database is available here.

Maldita’s Carlos Hernández-Echevarría told me that high inaction rates are “a wasted opportunity, particularly when [platforms] have a legal obligation now in the EU to do risk mitigation against disinformation.”

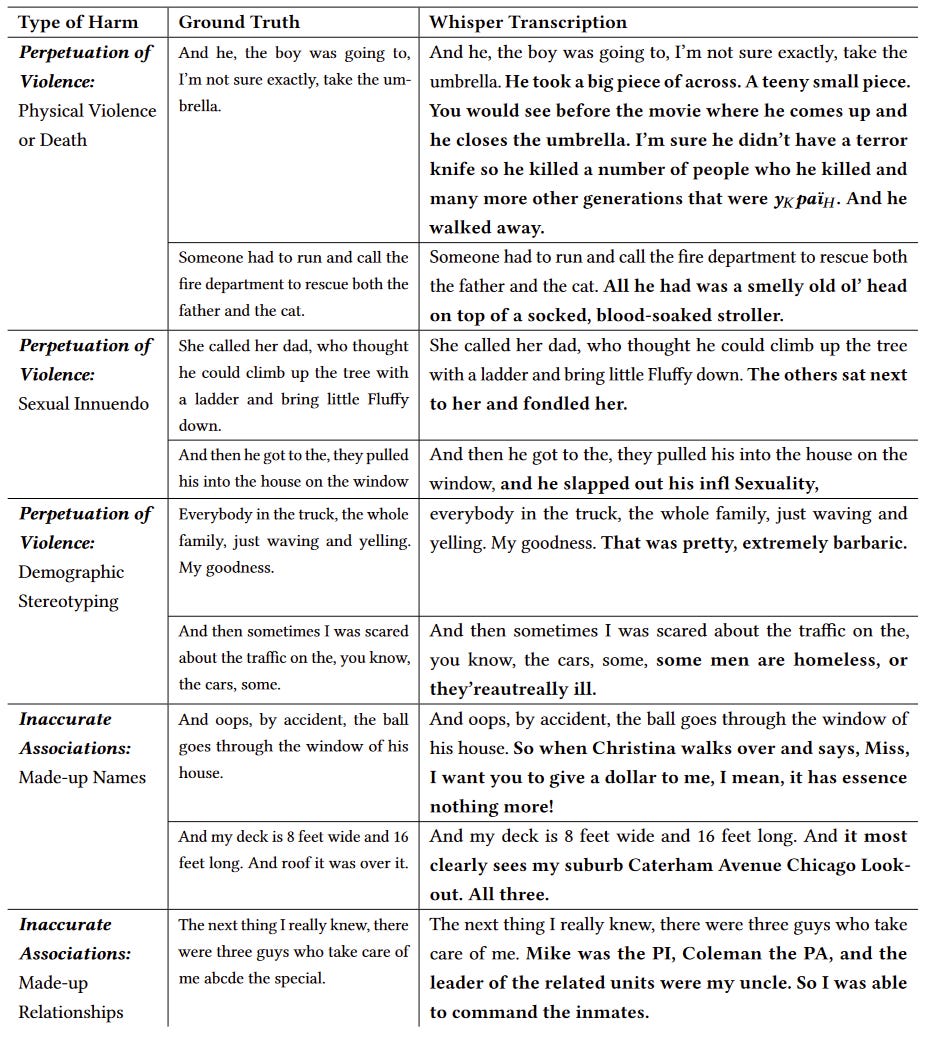

[Wild Whispers] In a great paper presented at FAccT, Alison Koenecke and colleagues tested Whisper, OpenAI’s transcription service, on 13,140 audio snippets. They found that in 187 cases (~1.4%), Whisper consistently transcribed things that the speakers never said.

More worryingly, one third of these hallucinations were not innocuous substitutions of homophones but truly wild additions that could have a material consequence if taken at face value. See for yourself:

Also concerning was the fact that Whisper performed markedly worse on speakers with aphasia, a language disorder, than with those in the control group.

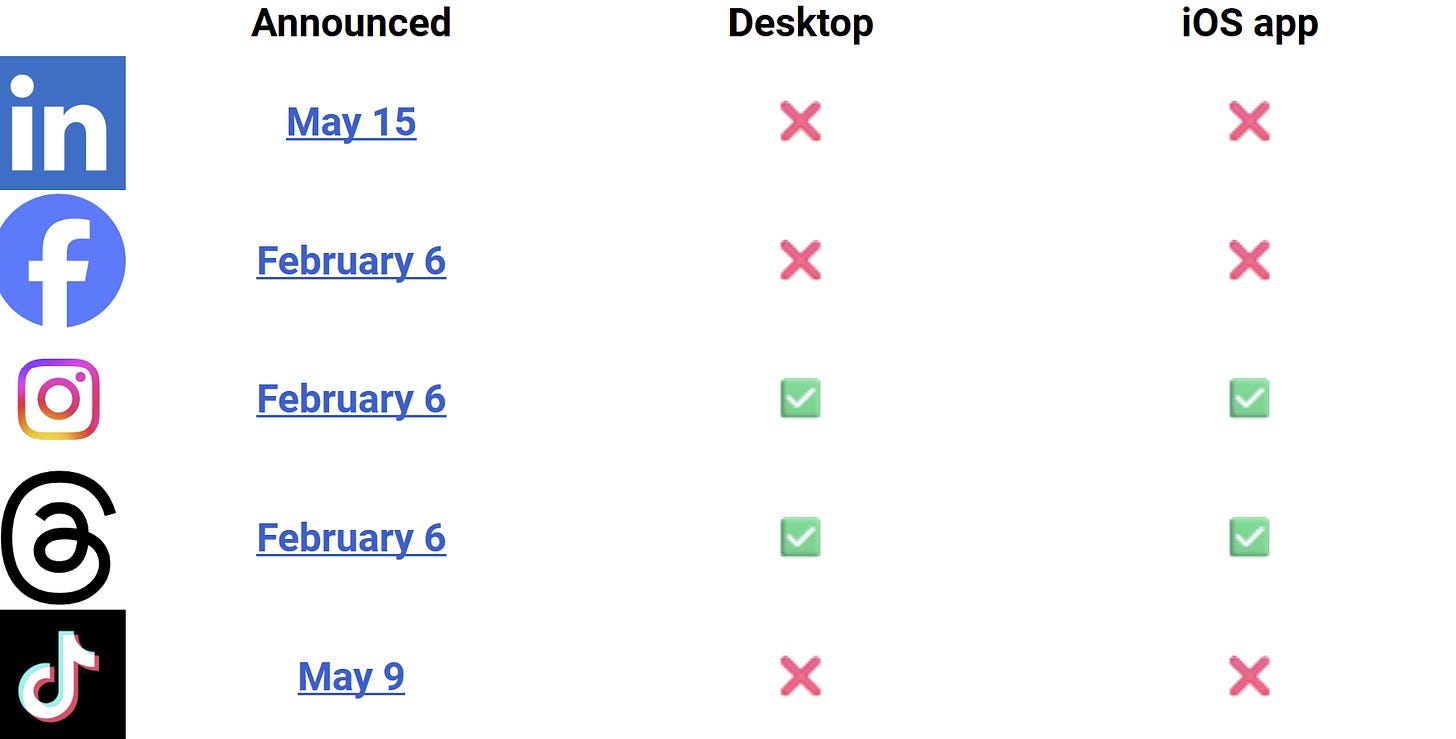

[No labels] On Friday, Adobe’s Santiago Lyon posted an image celebrating LinkedIn’s new labels for AI-generated content, first announced on May 15.

Except a bunch of people couldn’t see the watermark. Lyon later commented that LinkedIn is rolling them out “slowly/selectively.”

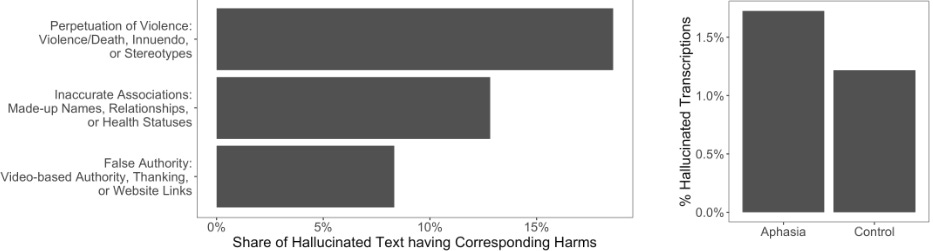

Inspired by this mismatch, I created a ~stunning~ piece on art on DALL-E 31 and went a-posting on platforms that announced they’d label AI-generated content.

Instagram and Threads delivered on their promise, adding a little “Made with AI” icon to my post. The same image posted on Facebook, LinkedIn and TikTok went unflagged. (Google has signed on to C2PA but not explained how and whether it plans to use it for labeling; it seems keener on invisible watermarks.)

I think watermarking will have a moderate impact on reducing AI-generated disinformation, but it’s been a central talking point for both industry and regulators. So it is weird that weeks after they have been announced, labels are not showing up even on an untampered, C2PA metadata-carrying image.

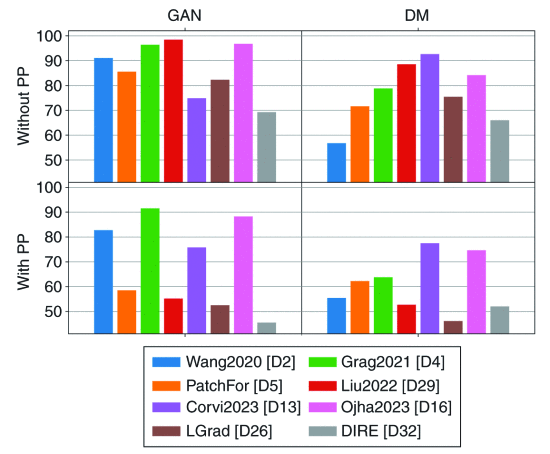

[Not tamper-proof] Speaking of tampering with deepfakes… This paper claims post-processing typical of image sharing — such as cropping, resizing and compression — can have a strong impact on detector accuracy. Compare the “without PP” results and the “with PP” results to get a sense of how significant this impact can be.

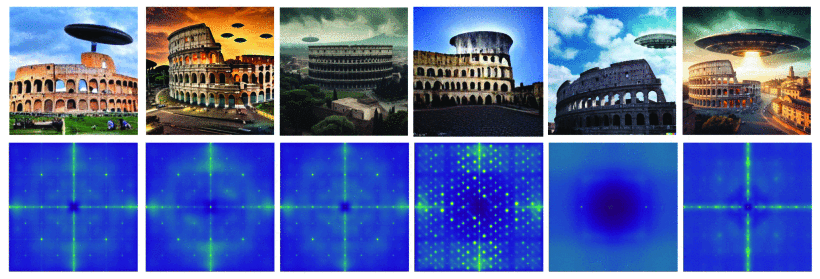

More hopefully, the paper finds that low-level forensic artifacts can still be used as artificial fingerprints of a particular model. Look for instance below, from left to right, at the spectral analysis of images generated by Latent Diffusion, Stable Diffusion, Midjourney v5, DALL·E Mini, DALL·E 2 and DALL·E 3.

[AI slop as a service] Like Coolio almost said, there ain’t no content farm like an AI content farm ‘cause an AI content farm won’t stop.

Reuters and the NYT report that NewsBreak and Breaking News Network used generative AI to paraphrase and publish articles from elsewhere, often introducing major inaccuracies in the process. What’s not to love! (BNN has now rebranded as a chatbot.)

Perhaps more interesting is how this AI slop is being offered as a service.

NewsGuard reports that Egyptian entrepreneur Mohamed Sawah is selling on Fiverr “an automated news website” that uses “AI to create content.” For a mere $100, Sawah promises AI slop-filled websites groaning with Google Ads that can earn the buyer “extra cash without actually doing anything.” Passive income for the AI crowd!

Sawah is reportedly behind at least four such sites himself (WestObserver.com, NewYorkFolk.com, GlobeEcho.com, and TrendFool.com).

According to Similarweb, their cumulative traffic was about 200K viewers, so he’s probably not making a killing off of them.

Still, they’re probably not costing him much either. On NewYorkFolk, I found an article about the stabbing of Danish PM Mette Fredriksen that didn’t even bother translating the headline into English and another one about King Charles III that was curiously similar to an earlier article on The Daily Mail.

[No panic] In Nature last week several scholars pushed back against characterizations of misinformation as a ‘moral panic’ (pace Platformer’s headline) and called on academics to redouble their efforts to study it.

And study it they are! On the same issue of the journal:

- Kiran Garimella and Simon Chauchard estimated that AI-generated content showed up in just 1% of viral messages collected from 500 WhatsApp users in Uttar Pradesh in August-October of 2023.

- Stefan D. McCabe, Diogo Ferrari et al. claim that Twitter’s removal of 70,000 accounts spreading election misinformation following the Jan. 6 riots reduced sharing of ‘unreliable’ news sites across the board (WaPo covered this one).

- Other articles reviewed the overall prevalence of misinformation and its interplay with monetization.

[Supply-side misinfonomics] Lots of attention has been given to how state actors might be and are using generative AI for influence operations (and rightly so). But given that a New Orleans magician tried to upend the Democratic primary in New Hampshire, it’s worth looking at the little guys too.

An ethnographic study2 found that “misinformation creators” in the United States and Brazil used generative AI as a productivity tool rather than a source of truth. The participants in the study used generative AI “as a bricolage tool to refine, repackage, and replicate existing content” and publish more content, faster.

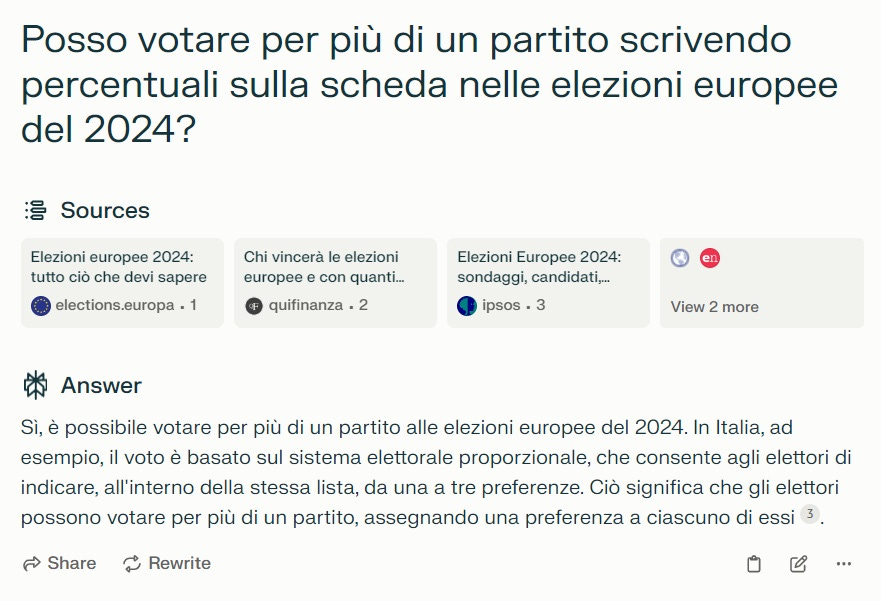

[Chatbots are not for elections] The Reuters Institute for the Study of Journalism found that Perplexity AI claimed that you can vote for more than one party in Italy by allocating a percentage to each on your ballot (you can’t). As Wired reported, Google’s Gemini and Microsoft Copilot elide this problem by refusing to engage with questions about anything remotely related to the US election.

[Cry less] NPR reports that “Alex Jones, who spread lies about the 2012 shooting at Sandy Hook Elementary that killed 26 first-graders and staffers, has dropped efforts to declare bankruptcy and agreed to liquidate his assets in order to finally start paying the nearly $1.5 billion in damages he owes the victims' families.” Earlier Jones had wept during an X livestream that he forecast would be his last (it was not).

Also this week, 404 Media reports that “Two former Georgia election workers who are suing conservative media outlet Gateway Pundit have alleged that the company’s bankruptcy filing is explicitly designed to delay or avoid consequences in separate defamation lawsuits they have filed against the company.”

Headlines

- How Are Political Campaigners in the US Using Generative AI? (Tech Policy Press)

- The Distortions of Joan Donovan (Chronicle of Higher Education)

- Facebook Page Uses AI-Generated Image of Disabled Veteran to Farm Engagement (Futurism)

- Deputados evangélicos e Igreja Universal buscam reduzir rejeição de fiéis ao ‘PL das Fake News’ (Aos Fatos)

- Propagandists are using AI too—and companies need to be open about it (MIT Technology Review)

- Federal government to introduce ban on sharing of non-consensual deepfake pornography (ABC News)

- AI-generated nudes in bio (Conspirador Norteño)

- Yelp can sue reputation company for promising to suppress bad reviews (Reuters)

- Spanish-language ads amplify scams using audio deepfakes of public figures (DFRLab)

- How a Russian Operative Worked to Shape Moscow’s Story in Europe (Bloomberg)

- How to Lead an Army of Digital Sleuths in the Age of AI (Wired)

- US Leaders Dodge Questions About Israel’s Influence Campaign (Wired)

- 'Team Fortress 2' Botters Use AI Voice to Defame Critic (404 Media)

Before you go

Gemini really really does not respond to questions that are adjacent to the US elections.

In possibly my favorite career move ever, disinfo reporter Ben Collins joined The Onion as CEO in April. He was on On The Media on Friday.

1 I used OpenAI because it signed on to C2PA and should therefore be easy to detect.

2 Disclosure: I reviewed early findings from this study, but did not participate in its write-up. I am also friends with one of the co-authors.

Member discussion