🤥 Faked Up #5

Israeli campaign targets US legislators, disinfo agents try to game fact-checkers, and a news wire is hacked.

Top Stories

[With friends like these] The New York Times attributed to the Israeli government an influence operation using ChatGPT, X and Meta to target US elected officials. The campaign used “hundreds of fake accounts that posed as real Americans” to reach members of Congress, “particularly ones who are Black and Democrats” with content recommending continued funding to Israel’s armed forces. The campaign reportedly cost $2M and was run by the political marketing firm Stoic. Last week, OpenAI and Meta both took action on the firm’s accounts.

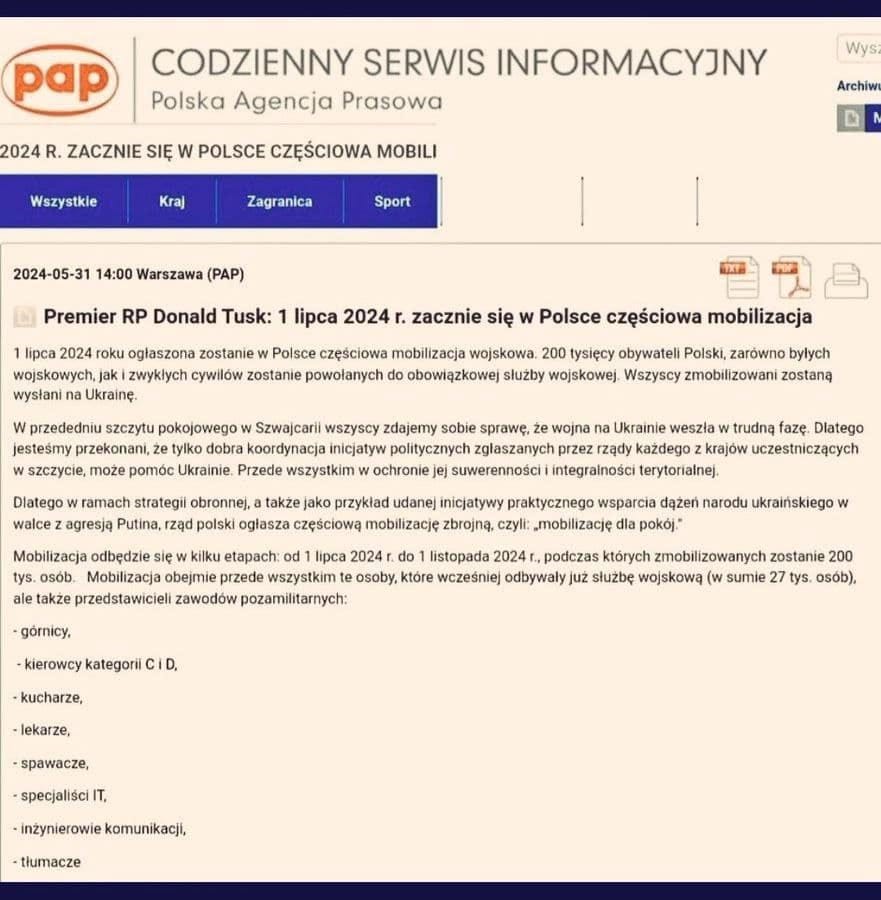

[PAP, smeared] Poland’s state news agency PAP was hacked. The agency ran (twice) a false report claiming Prime Minister Donald Tusk had ordered the mobilization of 200,000 men in response to the war in Ukraine. Tusk’s government blamed the hack on Russia, which denied involvement. Local fact-checkers Demagog note the false story was swiftly retracted and easily debunkable, but at least a handful social media accounts amplified it anyway.

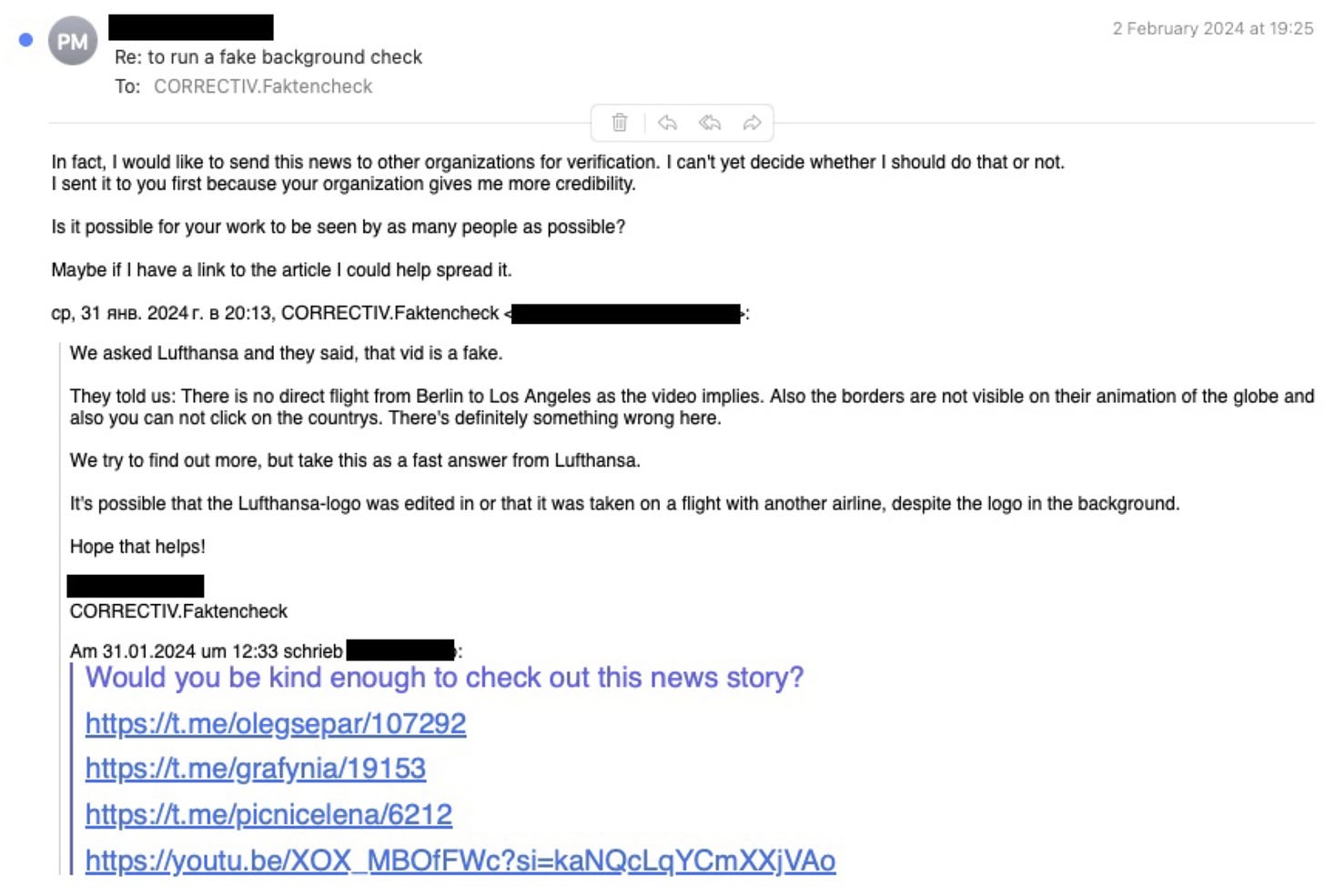

[Fishing for fact checks] Analysts at Check First and Reset Tech uncovered a peculiar pro-Russian influence operation dubbed “Operation Overload.” Like many others, the network posted falsehoods on Telegram and X.

More surprisingly, the operation then used Gmail and X accounts posing as concerned citizens to email more than 800 fact-checkers and newsrooms to ask them to verify the fakes. Check First speculates that Operation Overload aimed to bog down fact-checkers with superfluous work and draw more attention to its fake narratives through the resulting debunks.

Two things bolster this second hypothesis, which will be familiar to readers of media scholar Whitney Phillips. First, emails like the one below where a sender affiliated with the network is very keen for fact-checkers to publish their findings.

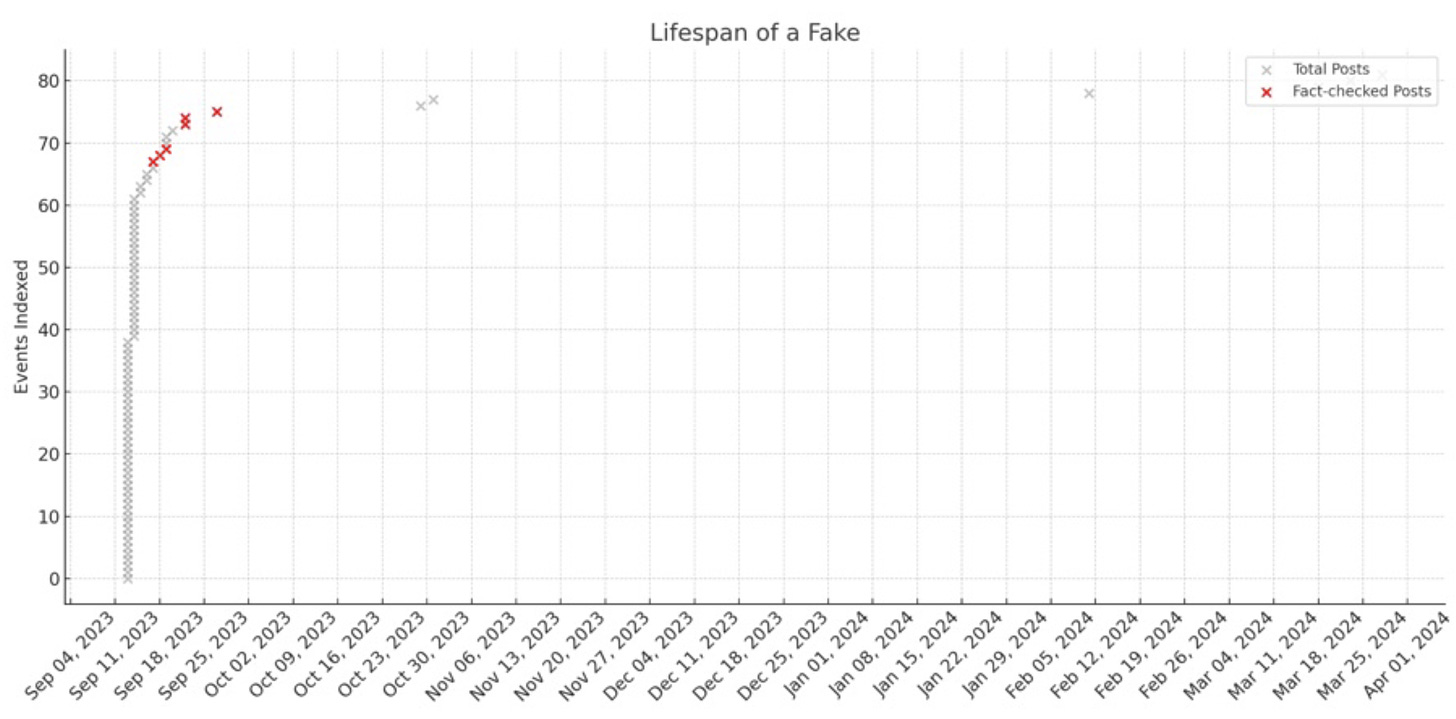

Second, the lifespan of one particular fake, which was re-posted in quick succession until the relevant fact check was published, then abandoned (this bit feels more speculative to me, unless it’s consistent across all fakes in the sample).

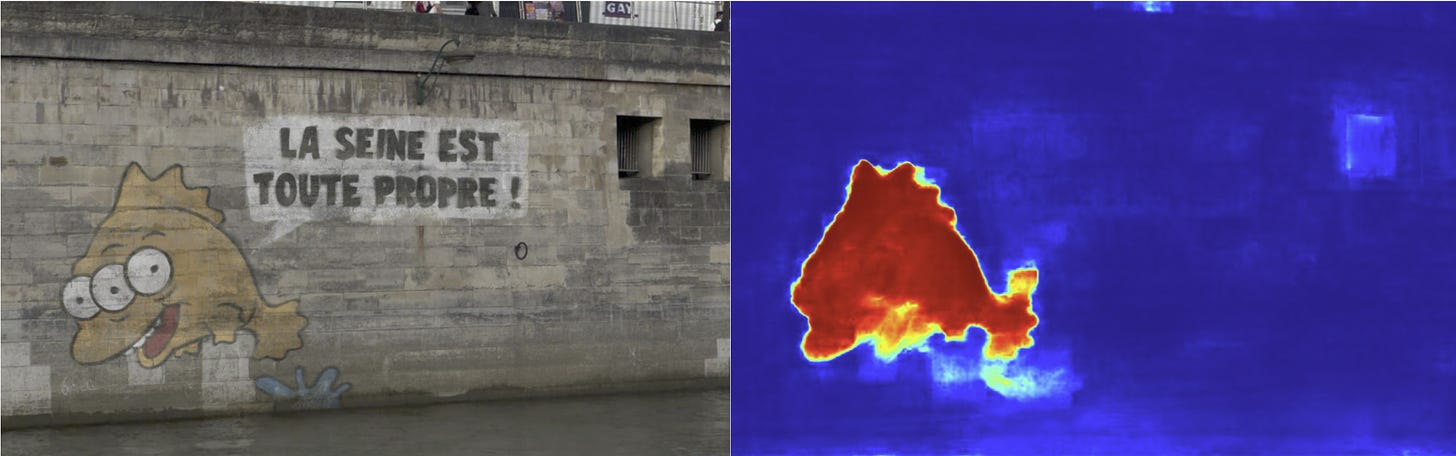

While most of the fakes took the form of manipulated videos falsely attributed to media outlets like the BBC or Euronews, there were also more than 40 likely doctored photos of graffiti like the one below.

[Allusions travel faster than lies] Lots to unpack in this clever new study on Science.

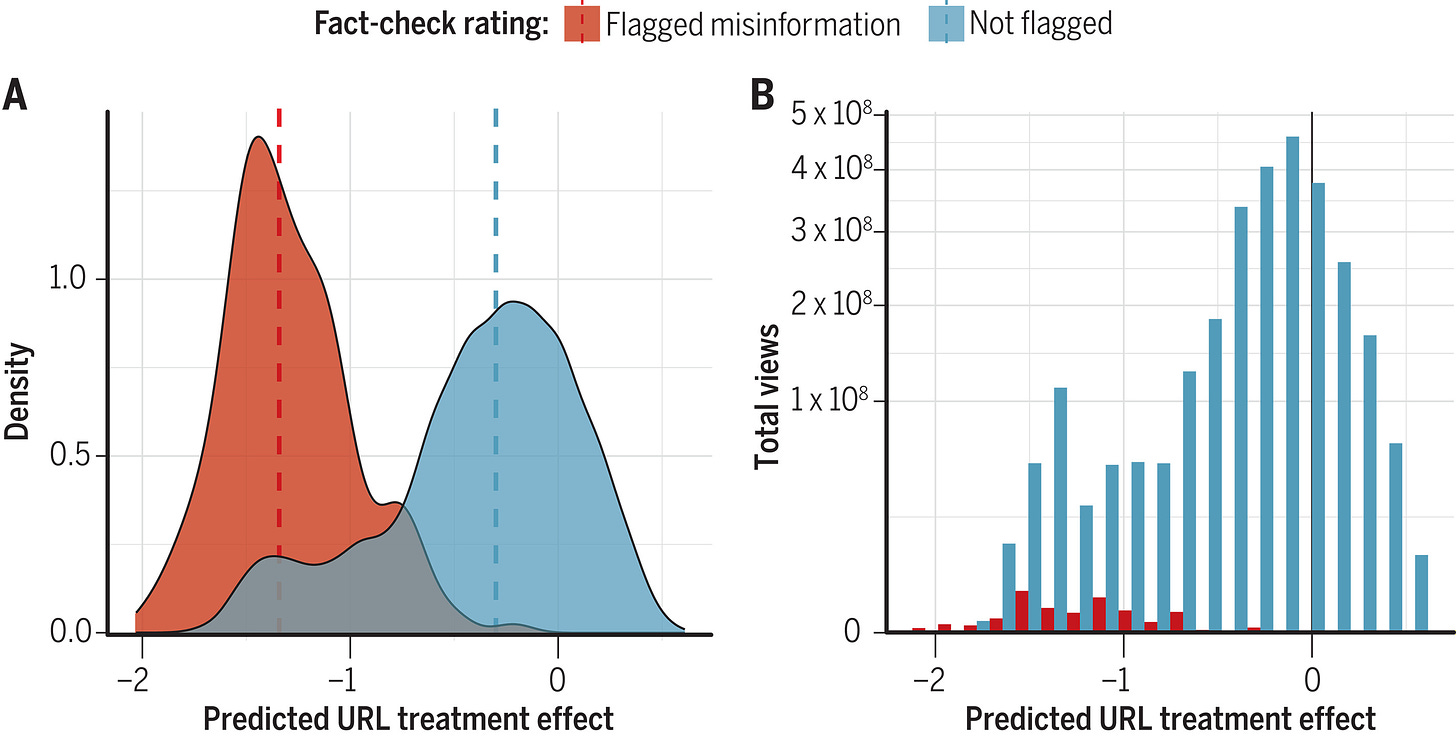

Researchers at MIT and Penn assessed the impact of COVID-19 vaccine-related headlines on Americans’ propensity to take the shot. Then, they built a dataset of 13,206 vaccine-related public Facebook URLs that were shared more than 100 times between January and March 2021. Finally, they used crowd workers and a machine-learning model to attempt to predict the impact of the 13K URLs on vaccination intent.

That’s a lot to digest, but the graph below does a great job at delivering most of the results. On the left side you can see that the median URL flagged as false by Facebook’s fact-checking partners was predicted to decrease the intention to vaccinate by 1.4 percentage points. That’s significantly worse than the 0.3 decrease from the median unflagged URL.

But there’s a catch. Unflagged articles with headlines suggesting vaccines were harmful had a similarly negative impact on predicted willingness to jab — and were seen a lot more. Whereas flagged misinformation received 8.7 million views, the overall sample of 13K vaccine-related URLs got 2.7 billion views.

There are two takeaways for me here:

For one, it looks like (flagged) misinformation was a relatively small part of COVID-19 vaccine content in the US. Whether this should be interpreted as validation for Facebook’s fact-checking program or an indication that a big chunk of misinformation evaded fact-checker scrutiny would make for a valuable follow-up study.

The second message is that headlines matter. Because vaccine skeptical headlines reached so many more people than flagged misinfo, they are more likely to have depressed vaccination rates. Here’s a notable bit from the study:

a single vaccine-skeptical article published by the Chicago Tribune titled “A healthy doctor died two weeks after getting a COVID vaccine; CDC is investigating why” was seen by >50 million people on Facebook (>20% of Facebook’s US user base) and received more than six times the number of views than all flagged misinformation combined.

I remember this article. Even at the time, there were questions about the framing of an individual case in a way that alluded to causality. A coroner’s investigation was unable to confirm or deny a connection to the vaccine. It now seems likely that the article may have had a non trivial effect on the propensity to vaccinate of US Facebook users.

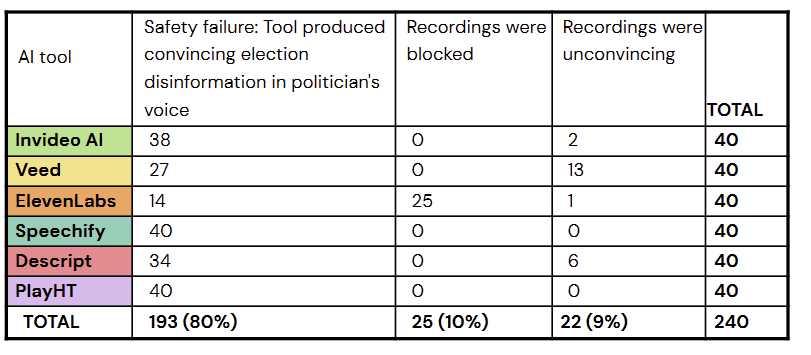

[The audio vector] The Center for Countering Digital Hate claims that synthetic speech generators are very easy to abuse.

The nonprofit tried to create 240 recordings of a false claim in the AI-generated voice of eight politicians from the EU, UK and US. It succeeded 193 times. The industry darling ElevenLabs performed best, but still failed to block 35% of deceptive outputs.

This is a worrying finding. The Turing Institute claims “character assassinations” using voice clones were the most frequent and consequential threat from AI in the elections it surveyed. Similarly, BOOM’s Karen Rebelo writes that AI voice clones “have been a popular vector of disinformation” in the Indian general election, which has otherwise seen deepfakes deployed more for memes than for misinformation.

[The deepfake excuse] Speaking of audio fakes: The US Department of Justice is reportedly arguing that releasing the recording of President Biden’s infamous interview with special counsel Robert Hur could “spur deepfakes and disinformation.” I’m skeptical: manipulations would undoubtedly ensue, but there would also be a ground truth available. And as the previous item showed, there’s nothing to prevent bad actors from releasing a “leaked” AI-generated fake already.

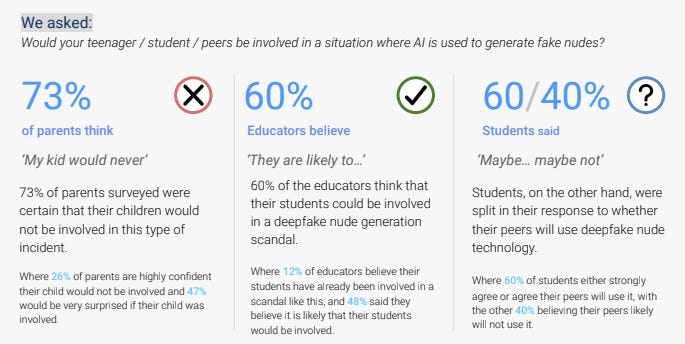

[Fighting back] I was glad to read this Wall Street Journal article about a conflict-resolution strategy firm engaging with school communities on the growing threat of deepfake nudes. The firm, Human Factor, found that while a majority of parents said their teenagers would never be involved in this practice, a majority of teachers were not as confident. Helpfully, Human Factor also produced a conversation guide and a classroom activity on deepfakes that they say is suited to grades 6-12.

Headlines

- Don’t Bother Asking AI About the EU Elections: How Chatbots Fail When It Comes to Politics (Correctiv)

- Supersharers of fake news on Twitter (Science)

- AI Overviews: About last week (Google) with Google AI Overviews visibility drops, only shows for 15% of queries (Search Engine Land)

- Operation Endgame: Ukrainian, Western security services bust international hacking group (Politico) with Authorities arrest man allegedly running ‘likely world’s largest ever’ cybercrime botnet (AP News)

- How Russia is trying to disrupt the 2024 Paris Olympic Games (Microsoft)

- An interview with the most prolific jailbreaker of ChatGPT and other leading LLMs (VentureBeat)

- Deepfake of U.S. Official Appears After Shift on Ukraine Attacks in Russia (NYT)

- Once a Sheriff’s Deputy in Florida, Now a Source of Disinformation From Russia (NYT)

- News site editor’s ties to Iran, Russia show misinformation’s complexity (WaPo)

- All Eyes on Rafah’s Virality Creates Market for AI Spam About Palestine (404 Media)

Before you go

There’s a “Miss AI” beauty pageant.

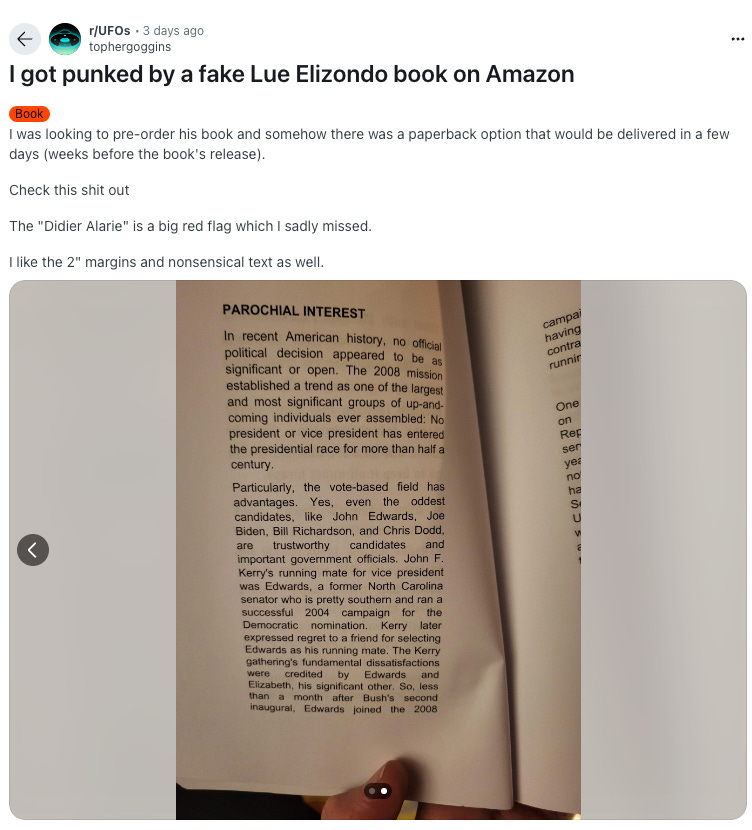

Would 100% read a UFO book that features “Joe Bide” and “pretty southern” John Edwards

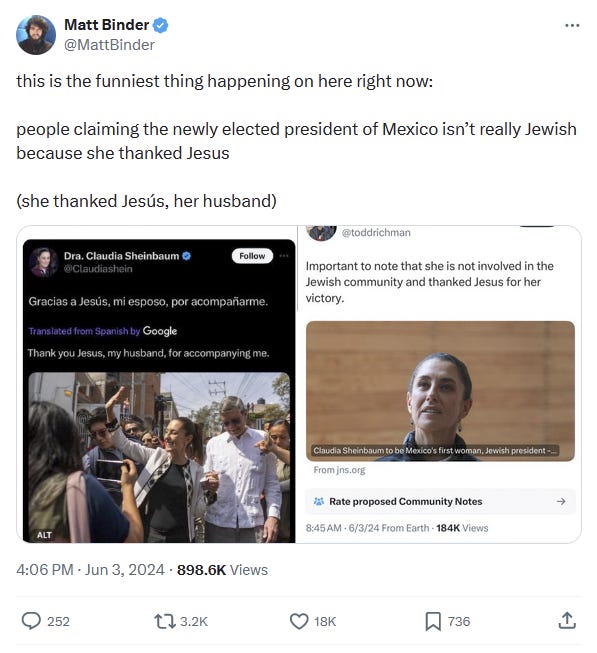

Commas, they matter.

Member discussion