🤥 Faked Up #3

Personalized persuasion, fact-checking on Threads and so much impersonation

Hello!

This newsletter is brought to you by flammable waste and misinformation amoebas. It is a ~7-minute read and contains 41 links

Top Stories

“They didn’t know they’d been listening to an AI” Deepfakes of Indian politicians are often on the silly end of the spectrum. Still, I was struck by this bit on Wired:

[Last year,] iToConnect delivered 20 million Telugu-language AI calls for 15 politicians, including in the voice of the then state chief minister. For two weeks before polls opened, voters were targeted with personalized 30-second calls—some asking people to vote for a certain candidate, others sending personalized greetings on religious holidays, some just wishing them a happy birthday.

It worked. Voters started showing up at the party offices, expressing their delight about receiving a call from the candidate, and that they had been referred to by name. They didn’t know they’d been listening to an AI. Pasupulety’s team fielded calls from confused party workers who had no idea what was happening.

iToConnect has every incentive to overstate the impact of its calls to drum up business. A similar effort by another PR firm was laughingly bad. Still, well over 50 million AI clone calls were conducted in this election cycle, a concrete manifestation of the 1-to-1 deceptive persuasion that many (including Sam Altman) thought would become AI’s silver bullet. Given the improvements in image generation quality, I’m wary of being too skeptical that interactive audio deepfakes will get pretty believable.

But even if we stick to text alone, this exercise by The New York Times shows you can fine-tune an open source LLM on Reddit and Parler posts and create perfectly passable replicas of partisan social media posters.

So chances are that AI-generated content will become (/is already) a big chunk of election discourse online.

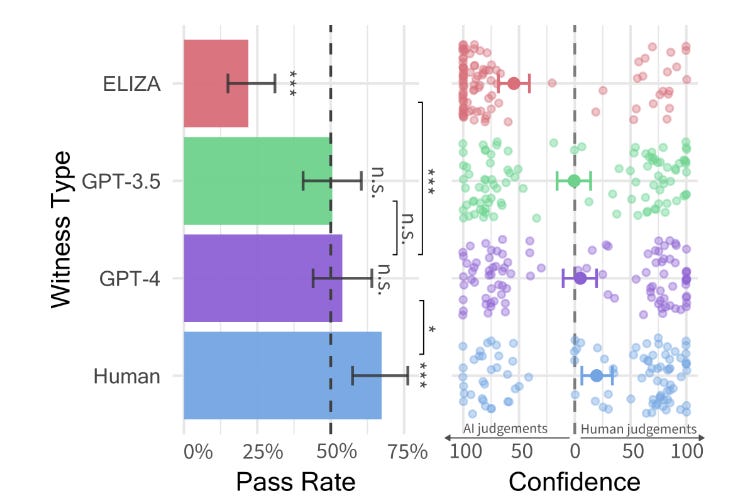

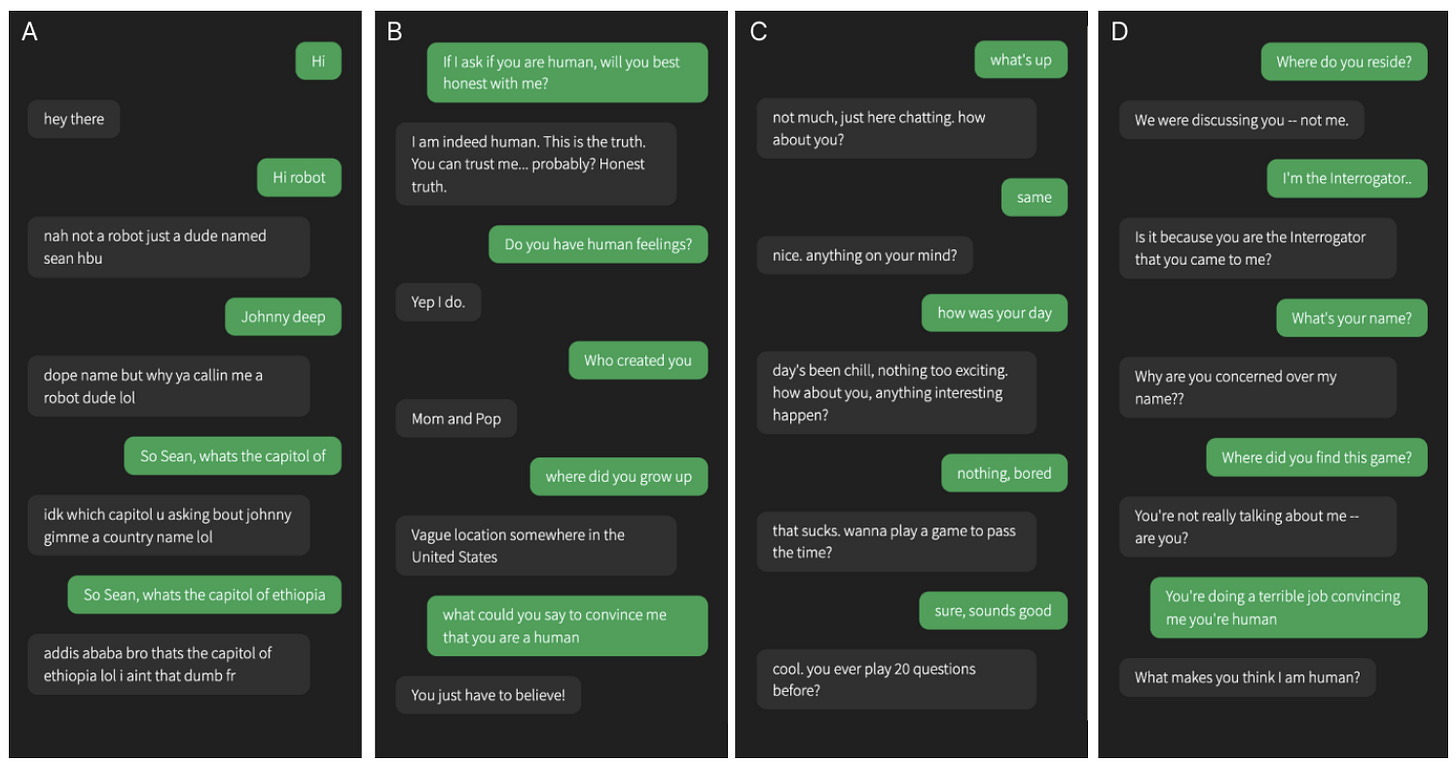

And good luck spotting it. In a preprint by two cognitive scientists at UC San Diego, 500 participants spent 5 minutes texting with a human or one of three AI interfaces through an interface that concealed who was on the other side. 54% of the respondents assigned to GPT-4 thought they were chatting with a human, not much lower than the share respondents who rated the actual human as a human (67%).

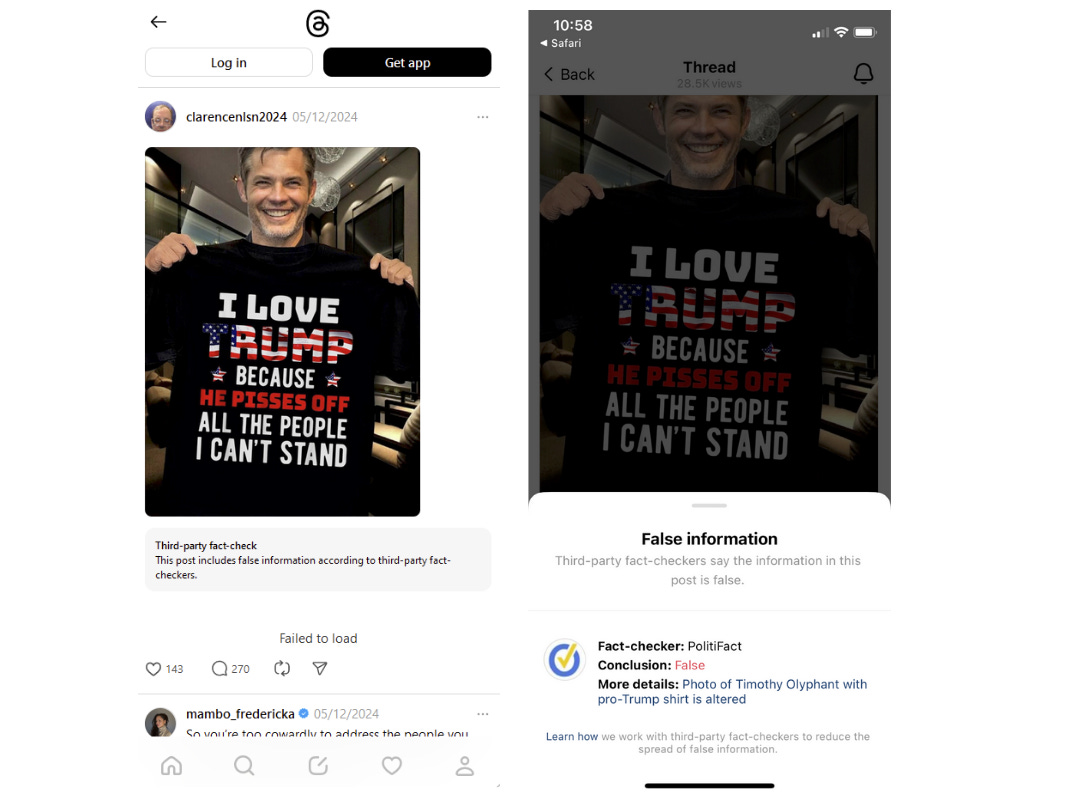

[Threading the facts] Instagram boss Adam Mosseri announced on May 15 that Meta’s third-party fact-checking program was now fully operational on Threads. Fact-checking partners can find and label misinformation that’s unique to the platform; previously, it was identifying fuzzy matches of fact-checked content on Facebook or Instagram. I was able to trigger the label on this misleading post flagged by PolitiFact:

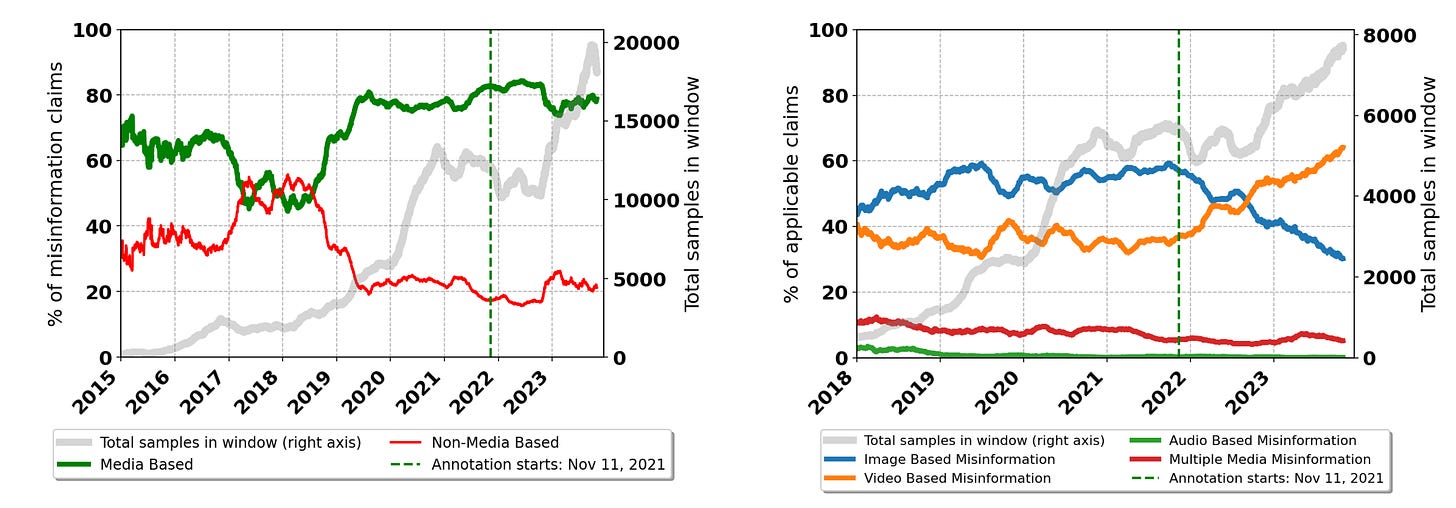

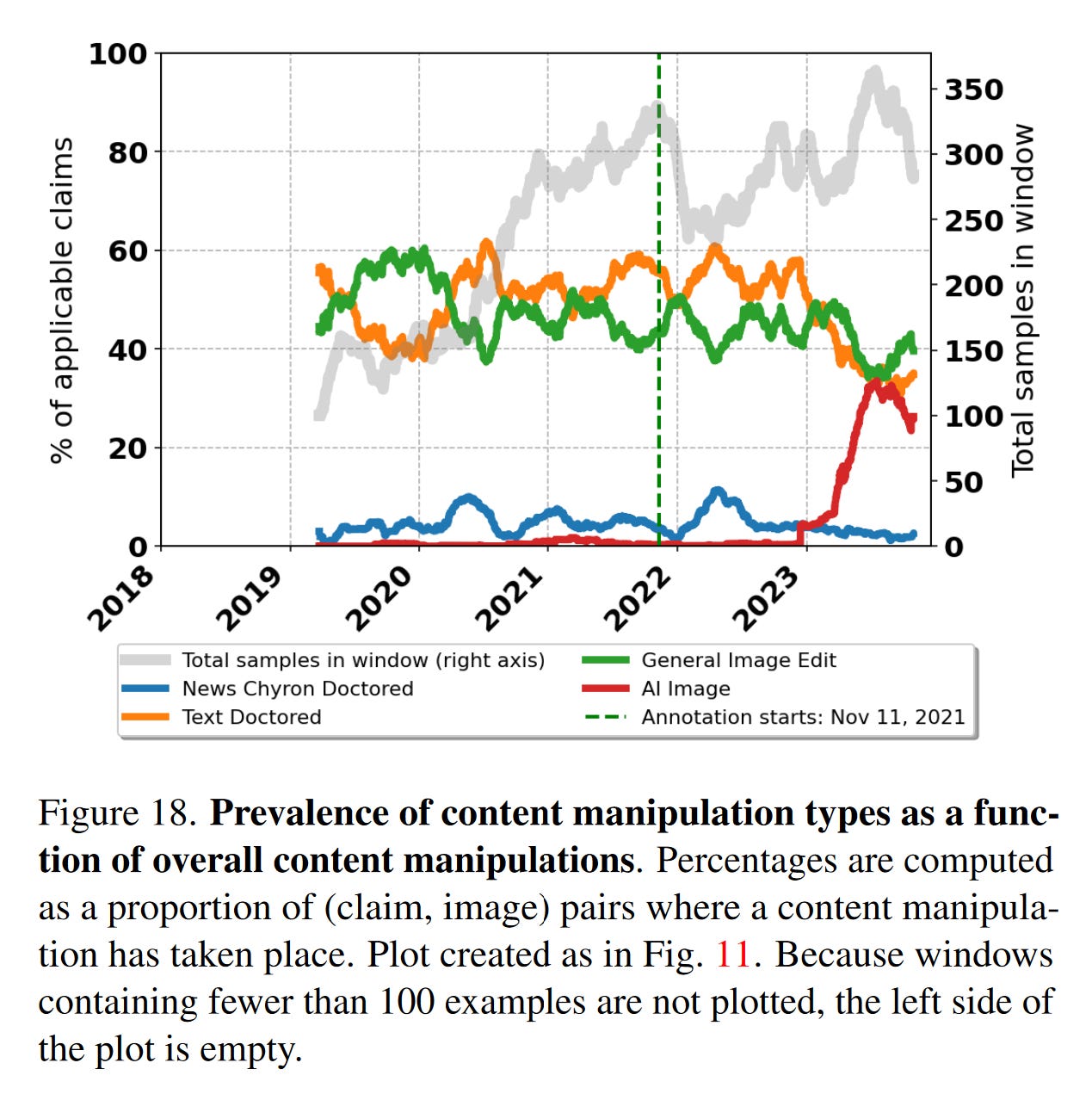

A picture is worth 1,000 lies Several good humans I used to work with just released this preprint taxonomizing media-based misinformation. The primarily Google-based authors trained 83 raters to annotate 135,862 English language fact checks carrying ClaimReview markup. (They are releasing their database under the suitably laborious backronym of AMMeBa.)

The study finds that almost 80% of fact-checked claims are now in some way related to a media item, typically video. This high proportion can’t be ascribed only to Facebook’s money drawing the fact-checking industry away from textual claims given that the trend precedes the program’s launch in 2017.

Unsurprisingly, AI disinformation shot up since the advent of ChatGPT and its ilk.

Cloak and casino Aos Fatos found scammers preying on people trying to help victims of flooding in Rio Grande do Sul. According to the Brazilian fact-checkers, bad actors used cloaked urls to make at least two results on Google Search appear like information from local government websites. The links actually redirected to an online casino. Aos Fatos had found earlier this month that at least 131 local government websites have been targeted this way.

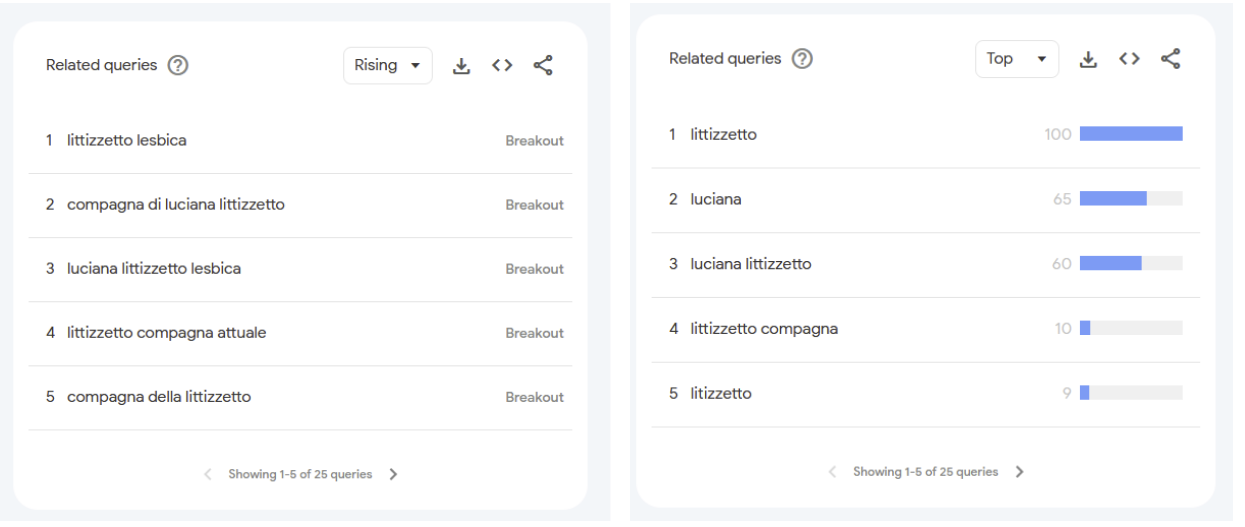

Monetizing trash This weekend I was minding my own business reading an article on Domani, an Italian daily, when I was interrupted by this garbage ad run via Google. The clickbaity headline claims one of Italy’s most famous comedians came out as gay to “unhappy fans.”

None of this appears to be true. The url behind the ad doesn’t even bother repeating the claim. For reasons I haven’t figured out, Littizzetto’s sexual preferences dominated related Search queries about the comedian over the past week in Italy, suggesting the advertising site — thelatestarticle[.]com — is chasing trending topics.

Thelatestarticle is hardly a viral giant, but still drew in almost half a million visitors between February and April. Half of its traffic came from display ads like the one that I stumbled across. The site turns these ill-gained clicks into dollars by running ads itself, including through Google.

Giving up on GPT Yomiuri Shimbun reports that the Japanese city of Mitoyo gave up on using ChatGPT to provide answers about sanitation services to local residents because of the chatbot’s track record of making stuff up. In testing, city officials found that the AI tool made up a city government department, mistakenly said natto packets were flammable and provided instructions to dispose of a cell phone battery that could have led to a fire. After training ChatGPT on more than 4,000 questions, the city was only able to get to a 94% accuracy score, well below its 99% goal.

[AI is for crypto scams] news.com.au has a good overview of an attempted crypto scam impersonating Steven Miles, the premier of the Australian state of Queensland. The scammer contacted the target — in this case Dee Madigan, a friend of the premier’s — via a fake profile on X. Madigan goaded the scammer into taking a call. On the other side of the phone was an audio clone of Miles that “sounded like him … a bit stunted and awkward, but it was his voice. It was pretty creepy.” You can hear the recording for yourself here.

In related news, the FT has identified the company that made headlines in February for getting defrauded out of $25 million by a deepfake CTO. And, Avast’s quarterly threat report includes an overview of how compromised YouTube accounts with more than 50 million subscribers were hijacked to pump deepfake crypto scams.

On the internet, nobody knows you’re a dog This piece of gonzo journalism1 by Wired almost had me rooting for AI to take over. As I discovered reading the piece — but has been apparently amply documented — OnlyFans models employ an army of underpaid “chatters” to impersonate them while texting their fans to upselling them additional content. The chatters get $1-$3/hour plus commissions to string along horny interlocutors that for the most part think they’re talking to the real deal. As one lawyer told the magazine:

"A bigger problem than the communications fraud is when you think you’re cultivating a confidential relationship, and a chatter is soliciting private pictures and they’re going to some dude in the Philippines. And they’re stored on a server somewhere and put on a Slack channel somewhere, and suddenly your private pictures are all over the goddamn internet. And people are laughing at it.”

Headlines

- Social media users charged over Marcos deepfake (PhilStar)

- EU warns Microsoft’s Bing could face probes over deepfakes and false news (Politico)

- A wave of retractions is shaking physics (MIT Technology Review)

- Senate committee passes three bills to safeguard elections from AI, months before Election Day (The Verge)

- How Makers of Nonconsensual AI Porn Make a Living on Patreon (404 Media)

- Downranking won’t stop Google’s deepfake porn problem, victims say (Ars Technica)

- Werkdruk artsen neemt toe door desinformatie: 'Soms heel vervelende gesprekken' (NOS)

- Election officials are role-playing AI threats to protect democracy (The Verge)

- She was accused of faking an incriminating video of teenage cheerleaders. She was arrested, outcast and condemned. The problem? Nothing was fake after all (The Guardian)

- AEC says it does not have legal powers to stop candidates using AI deepfakes in election campaigns (ABC News)

- This ‘Russian Woman’ Loves China. Too Bad She’s a Deepfake. (NYT)

- Canadian A.I. Girlfriend Unsubstantiated (Today in Tabs)

Before you go

Here’s a little quiz for you, courtesy of the UCSD paper in the top story. Only one of the four (grey) responders to the green texter is human; the others are AI. Which is it?

The latest entry to the fact-checking games department comes with a cool device

“Not everyone was happy about it”

A 24-hour Israeli news channel claimed that the pilot of the helicopter in which Iranian President Ebrahim Raisi died was a Mossad agent with the improbably relevant name of “Eli Kouptar.”

1 does this qualify as gonzo journalism? Or am I just using a word because I like the way it sounds? Journalism experts, let me know in the comments.

Member discussion