🤥 Faked Up #24

What I'll be watching for on America's election night, two more studies find that corrections work, and a Lebanese newspaper ran an AI fake on its front page

HEADLINES

Google is adding a modicum of transparency to photos edited with Pixel’s AI tools. The company also described how its SynthID-Text watermarks work. A woman was arrested in China for lying about snowflakes online. A network of 1,800 X bots is promoting the upcoming UN climate change conference in Azerbaijan. U.S. Senator Mark Warner called on domain registrars to do more against disinformation. LinkedIn claims to have verified the identity of 55 million of its 1 billion users.

TOP STORIES

WATCH OUT PARTY

This is the last issue of Faked Up before the main event in America’s quadrennial election misinformation festival. So much has been written about efforts to discredit the validity of the democratic process in this country through falsehoods and xenophobic innuendos that it’s hard to know where to begin.

Here are a handful of articles that set the stage for November 5th:

- NBC News and The New York Times looked at the network of organizations tied to Cleta Mitchell, the lawyer behind the falsehood-ridden pressure campaign to overturn the result of the 2020 presidential election in Georgia. They found that voter fraud narratives this year center heavily around non citizens.

- And finally, Wired reported on a memo by the Department of Homeland Security warning law enforcement officials about domestic violent extremists “reacting to the 2024 election season […] by engaging in illegal preparatory or violent activity that they link to the narrative of an impending civil war, raising the risk of violence against government targets and ideological opponents.”

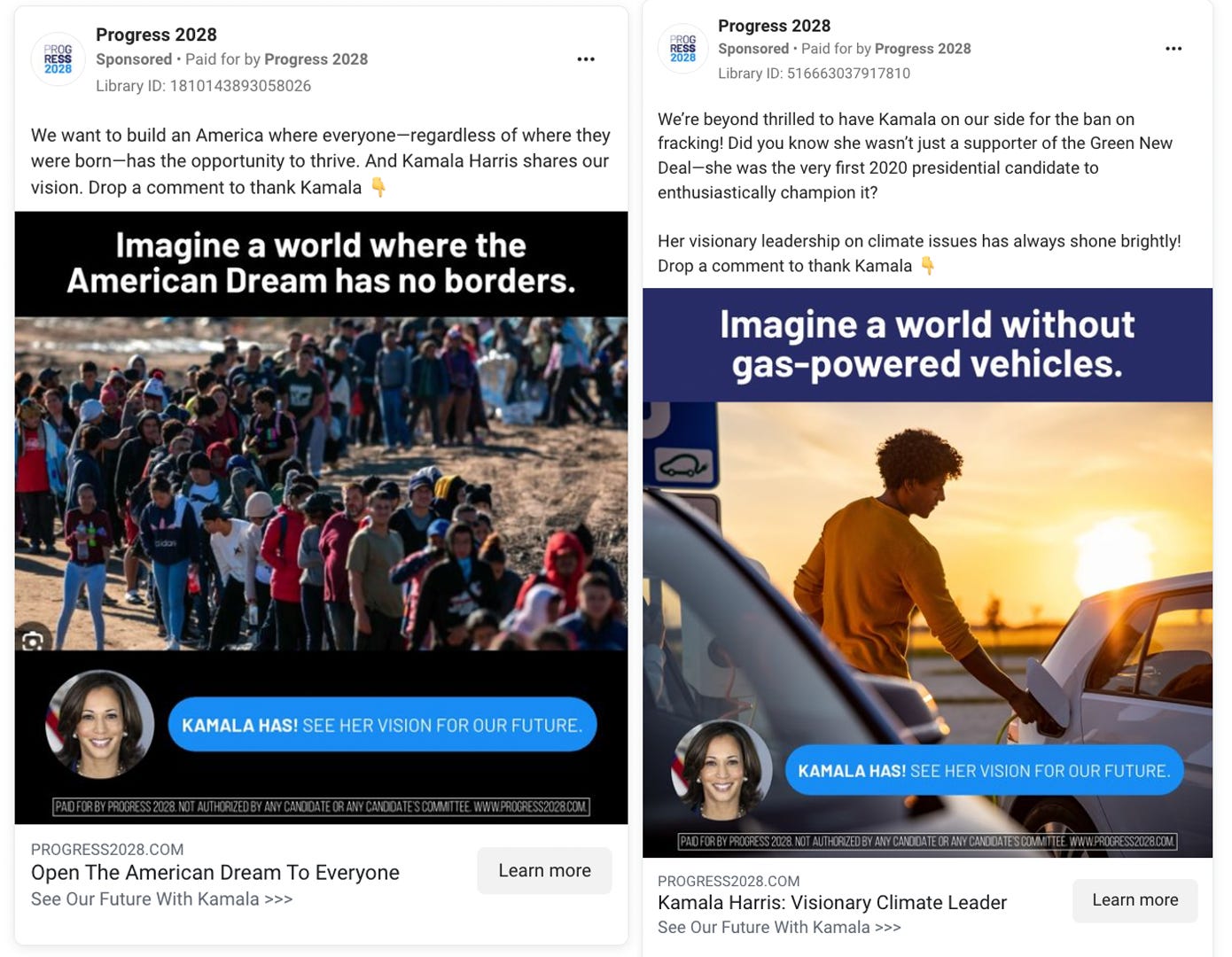

Musk’s PAC has also been paying for a fake “Progress 2028” campaign that pretends to be aligned with the Democratic Party. According to 404 Media, the operation has spent more than $500,000 dollars on Meta ads touting made-up Kamala Harris policy stances likely to be unpopular with conservative voters.

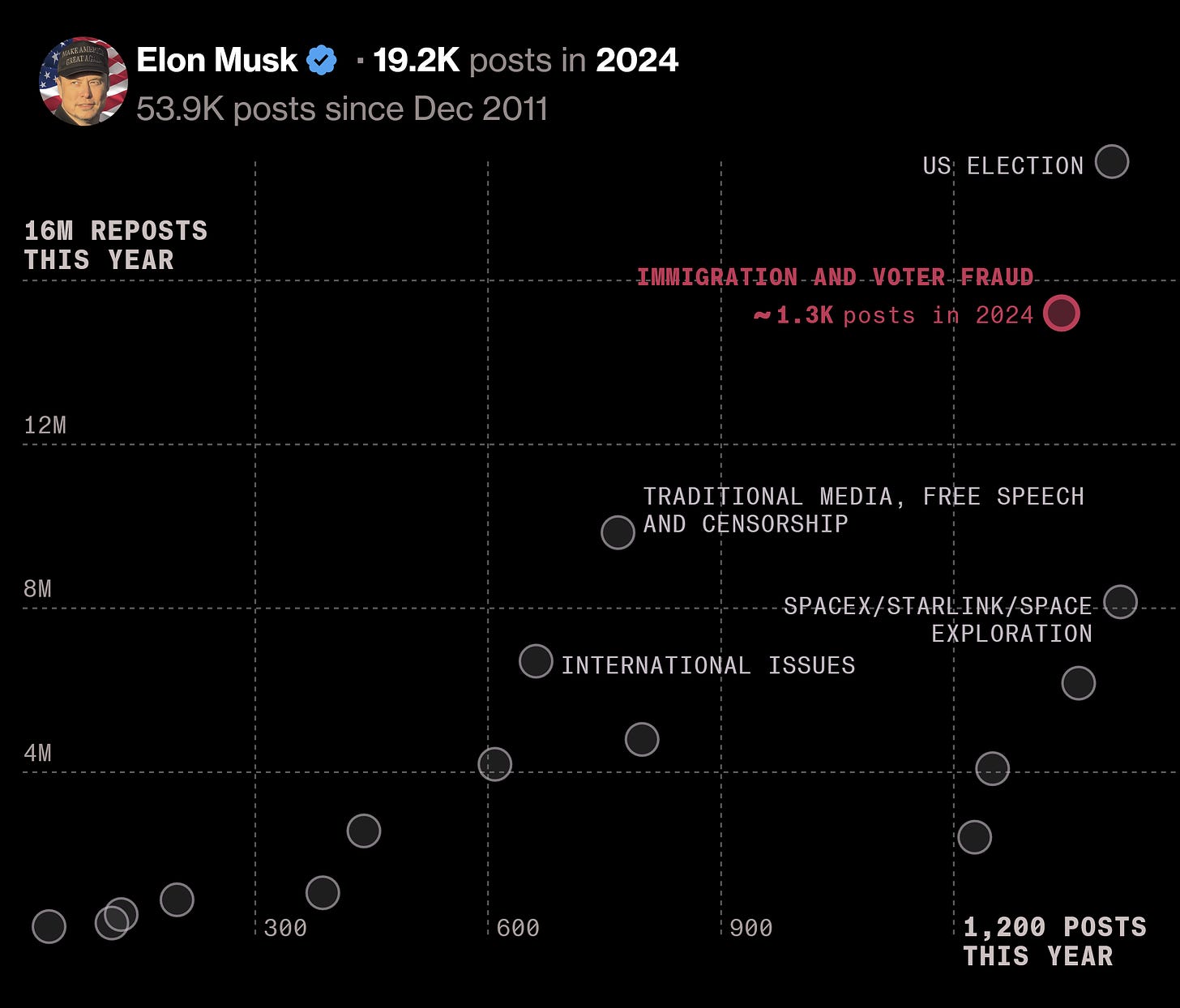

Elon Musk got the memo. Bloomberg’s analysis of the billionaire’s tweets found 1,300 posts like this one about “importing voters” collecting 10 billion views.

If polls are correct, election night will wrap up without a clear winner. Still, a lot of the main conspiracy theories that will dominate the subsequent months will emerge during and immediately after November 5th. Here’s what I’ll be doing to keep track:

- Monitoring my conspiracy theory burner accounts on Instagram and Tik Tok, which I’ve been priming by searching for the election rumors in UW’s Center for Informed Politics.

- Tracking the capacity of content on Truth Social (where Donald Trump posts most frequently) to hop over to more mainstream social networks.

- Looking at rumors on the “election integrity community” set up by Musk’s Super PAC on X. I’m especially interested in the interplay between claims on this group and Community Notes, the crowd-checking tool that the platform has just “re-architected” to enable quicker turnaround times.

- Searching for rumors about the 13 bellwether counties identified by Cook Political Report given that closer contests will inevitably spark closer scrutiny (and conspiracy theorizing).

- Looking for relevant context about all of the rumors above on the Fact Check Explorer, the National Association of Secretaries of State and lists of disinfo beat reporters, election lawyers and election analysts.

What’s your plan? Share in the comments or via email.

I’ll see you all on the other side, no doubt with oodles of contested claims of voter fraud to wade through.

DETECTION DISTRACTION

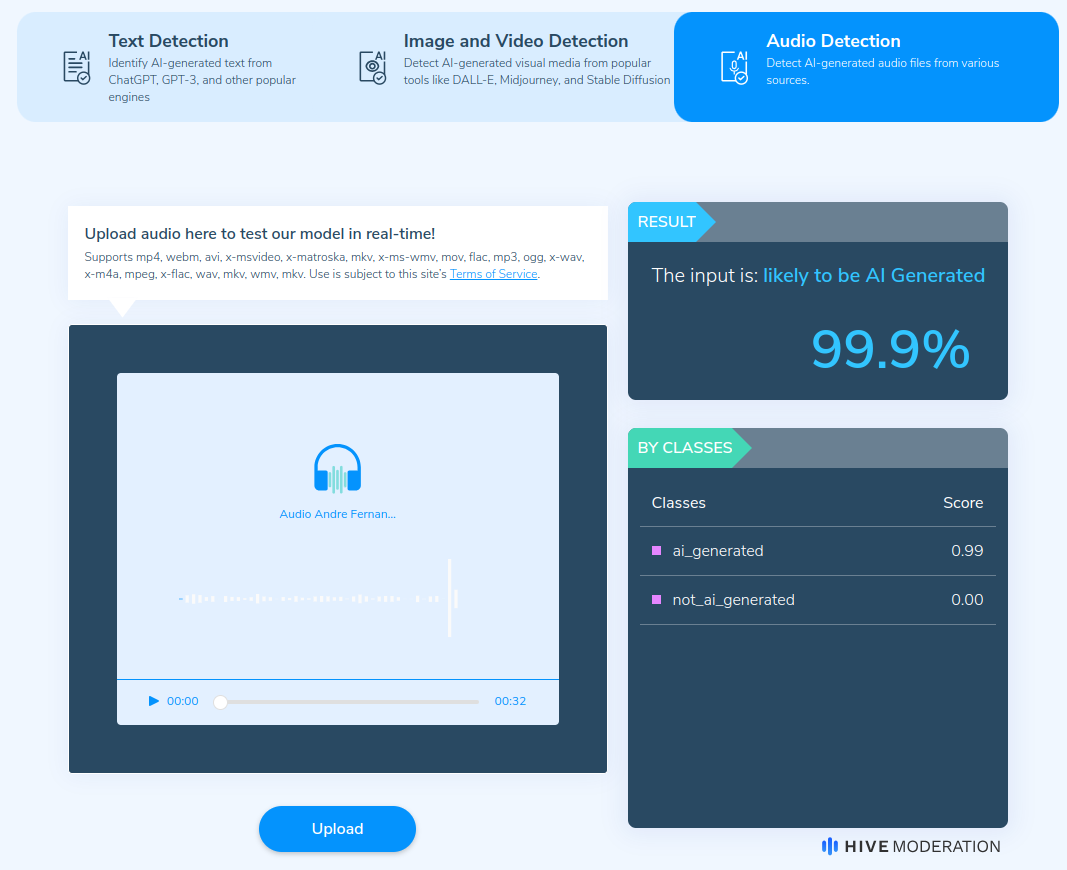

Lupa sought to verify a controversial clip purporting to capture Fortaleza mayoral candidate André Fernandes discussing a scheme to purchase votes. Two of the three tools that the Brazilian fact-checkers used claimed the audio was AI-generated. A third one said it was real. As with last week’s case of “Matt Metro,” detectors proved insufficient to definitely resolve an authenticity quandary.

Separately, employees at buzzy cybersecurity startup Wiz were targeted by a deepfaked voice message of CEO Assaf Rappaport trying to get access to their credentials. The attack appears to have failed because the replica was trained on Rappaport’s public speaking, which is reportedly more anxious-sounding than his tone with staff. (For what it’s worth, I think we should abolish all audio notes.)

Overall, though, humans don’t appear all that well equipped to detect faked voices.

In a preprint, Sarah Barrington and Hany Farid at UC Berkeley used ElevenLabs to clone 220 speakers. They then had survey respondents discern whether two clips were from the same person and whether any of them were deepfaked. In almost 80% of the cases, a real voice and its audio clone were deemed to be from the same speaker (real clips of the same voice were correctly attributed to the same person 92% of the time).

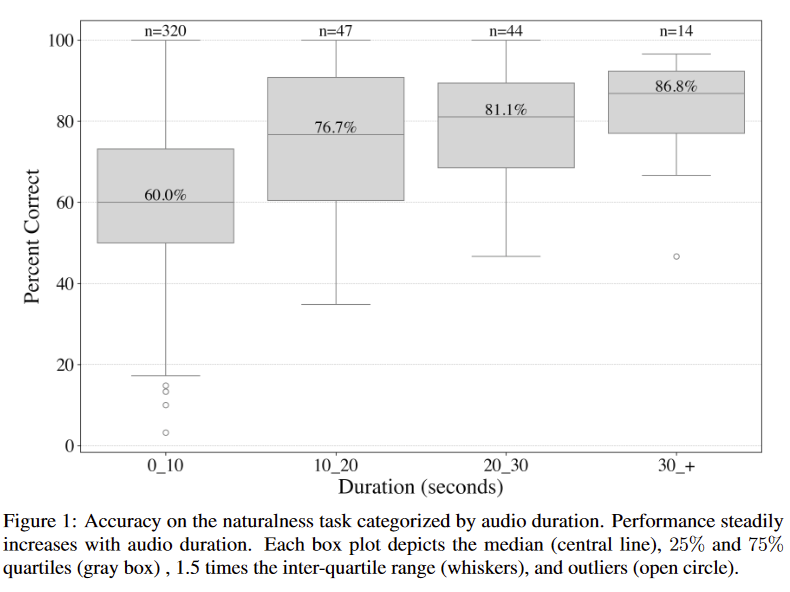

Slicing the data another way, Barrington and Farid found that respondents correctly flagged an audio as synthetic 66.3% of the time. That is not much better than flipping a coin. The silver lining is that accuracy appears to increase with clip duration, though the relative rarity of longer clips means we can’t tell for sure.

FACTS MATTER

Add two more studies to the pile that says that — at least in a lab setting — individuals are open to corrections.

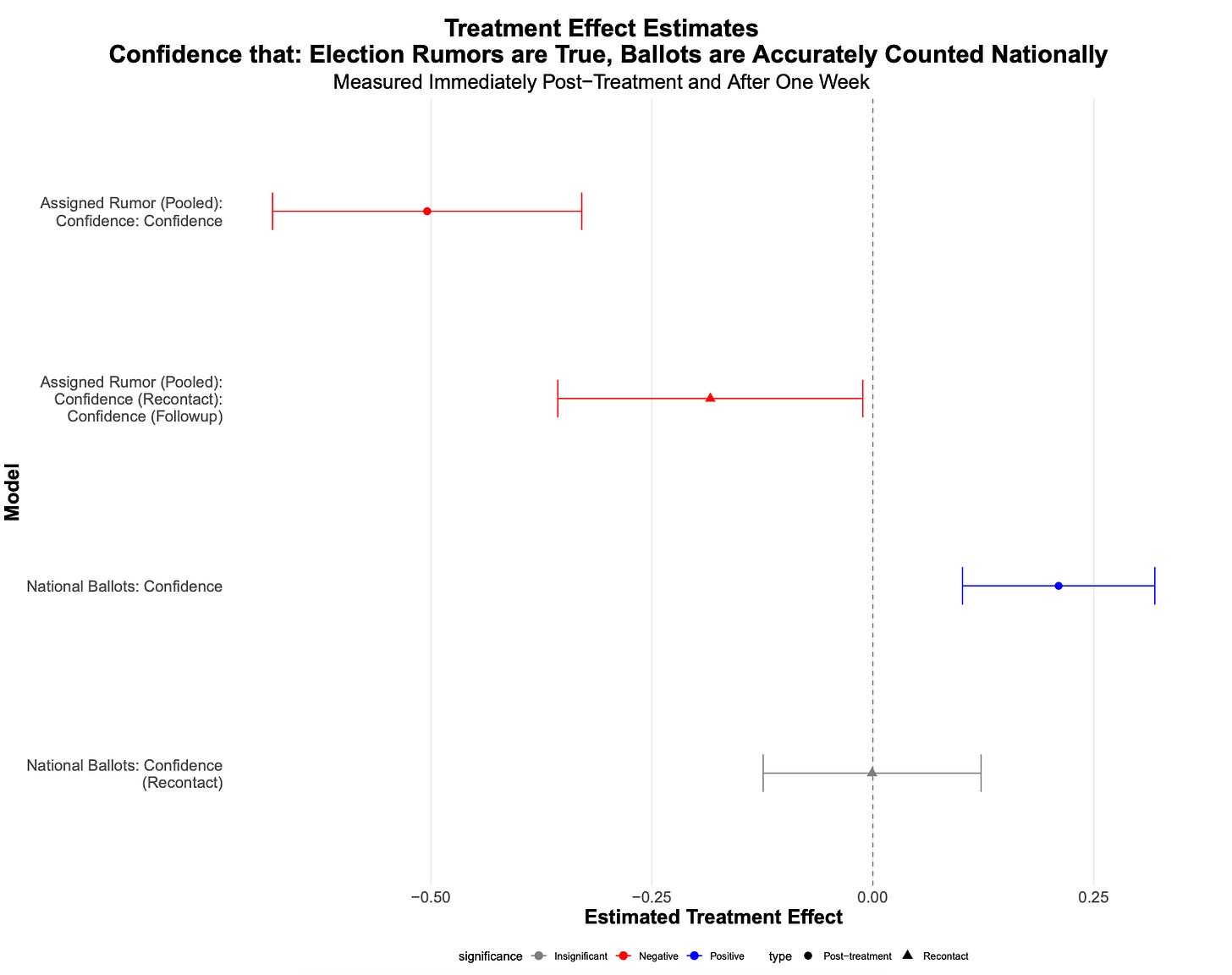

In a preprint, Linegar et al. test the efficacy of an AI-generated “prebunking” article1 on five common US election falsehoods. On average, prebunks reduced belief in these myths by 0.5 points on a 10-scale, while also improving confidence in election integrity. Overall, Democrats and Republicans did have differing belief levels in the misinformation — but preemptive corrections worked regardless of party affiliation.

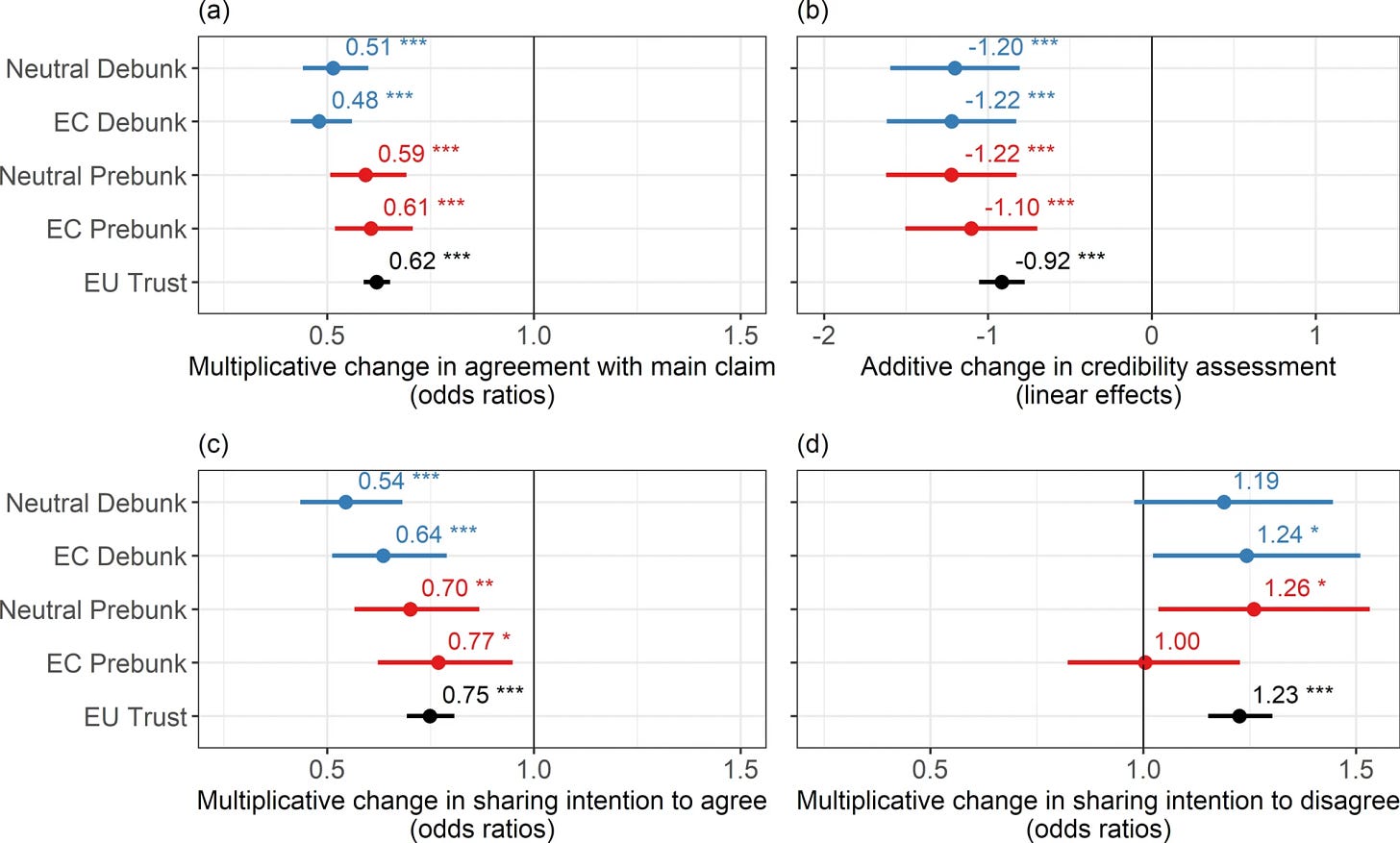

An earlier peer-reviewed paper by Bruns et al. looked at corrections in the context of misinformation about COVID-19 or climate change in Germany, Greece, Ireland, and Poland. The paper tested two different variables: the timing of the correction (before or after the user was served an article making false claims about climate change) and the correction’s source (either absent, or attributed to the European Commission).

The authors conclude that for most conditions tested and in most locales, corrections worked (see aggregate results below). Overall, debunking had a slightly bigger effect than prebunking. The effect was pretty large, too, reducing strong agreement with the main false claims by almost half. Greater trust in the EU was also associated with an increased acceptance of the correction attributed to the Commission, though the authors caution that study design may have affected this finding.

FRONT PAGE FAKES

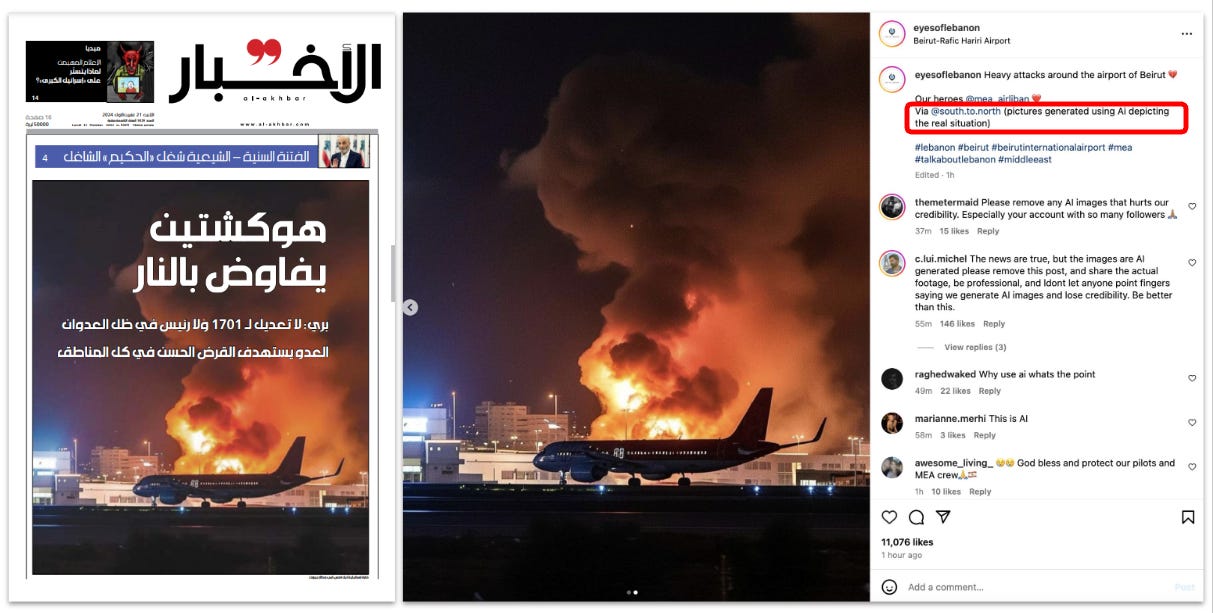

A Lebanese tabloid used, without labeling, a likely AI-generated image of Israeli blasts near the Beirut airport on the front page of its October 21st edition. The picture had been previously shared by an Instagram account alongside other images it claimed were “pictures generated using AI depicting the real situation.”

Breaking news events have always provided grounds for unverified viral imagery to be picked up by media outlets. 9 years ago, a Spanish newspaper put the edited selfie of a Canadian gaming critic on its front page as the supposed perpetrator of the terrorist attacks on the Bataclan theatre in Paris.

Obviously, Al Akhbar’s error is less serious. For one, it didn’t frame an innocent man. Still, after the Adobe stock photo fiasco of last year, this is another vivid example of AI being used to describe violent events in the Middle East, cheapening true tragedies with fake images.

HOW MUCH SLOP IS TOO MUCH SLOP?

Wired published a pretty thoughtful analysis on the prevalence of artificially-generated content on Medium. The headline finding is that somewhere between 40 and 48 percent of posts on the publishing platform may have been written by AI.

Now, as every discerning reader of this newsletter knows only too well, AI detectors are flawed instruments. Still, both of the companies used by Wired tried to control for this by running their detectors on content published before ChatGPT was a thing and on other news sites. The prevalence in those cases was below 10 percent. So something does appear to be going on here.

Medium CEO Tony Stubblebine, who in several ways has charted an AI-skeptical path, pushed back on these findings being either novel or significant. Stubblebine says Medium’s own metrics show AI-generated content has increased tenfold this year but that the stuff mostly gets no views.

“I think you could, if you're being pedantic, say we're filtering out AI—but there's a goal above that, which is, we're just trying to filter out the stuff that's not very good,” he told Wired.

This origin-agnostic, quality-first, position is one that Google decided to take with Search as well. (Hell, it’s probably the position I’ll likely be taking when grading assignments from my students.)

At the end of the day, it doesn’t really matter whether this post is AI-generated or not if it’s overall value add is 0 because it’s some form of affiliate marketing scheme.

But I can’t see how scale isn’t going to play a role here. Perhaps platforms can beat AI slop when it comes to the top 1% of content they recommend and rank highly. But we also all go online to explore autonomously, not just get fed content. If doing so increasingly strands us on continent-sized AI garbage patches, I worry it will sap our capacity to discover worthwhile new things that aren’t popular or vetted.

NOTED

- American creating deepfakes targeting Harris works with Russian intel, documents show (WaPo)

- Coordinated Reply Attacks in Influence Operations: Characterization and Detection (arXiv)

- Deceptive Delight: Jailbreak LLMs Through Camouflage and Distraction (Unit 42)

- Researchers say an AI-powered transcription tool used in hospitals invents things no one ever said (AP News)

- Medical large language models are susceptible to targeted misinformation attacks (Nature)

- Data Void Exploits: Tracking & Mitigation Strategies (CIKM) with Google, Microsoft, and Perplexity Are Promoting Scientific Racism in Search Results (Wired)

- Generative AI Will Increase Misinformation About Disinformation (Lawfare)

BEFORE YOU GO

C2PA should get on this.

1 Prebunking articles dispel manipulation techniques common to many misinformation techniques and are a popular intervention to build resilience against falsehoods

Member discussion