🤥 Faked Up #23

A network of Catholic pages uses AI slop to grow its reach, Alexa flips the meaning of fact checks, and the Pentagon shops for deepfakes.

This newsletter is a ~7 minute read and includes 47 links.

I’M ALL EARS!

🙏 Thanks to everyone took the FU survey last week! I’d like to get ~100 responses, so if you have 2 minutes please smash the blue button:

Have you taken the survey? Take the survey!!!!!

I take survey responses seriously! For instance, one of you told me that FU sometimes feels a little “far from Europe.” So I tallied the regional focus of my top stories over the past three months and found that while exactly half of the items (41) were not tied to any geography, fully 20 of the rest were about the United States, versus 9 in Europe, 8 in Asia-Pacific, 4 in Latin America and only 1 in the Middle East and North Africa. While the US does produce a lot of deepfake news, especially with the election coming up, I’ll be looking to even out these distributions more moving forward.

HEADLINES

YouTube is implementing a “captured with camera” label that leverages the C2PA standard. Two models are not renewing their contract with Synthesia after their AI avatars were used by dictatorships. A deepfake romance scam in Hong Kong cost victims more than $46 million. More experts are worried about the Pixel 9’s AI features. Meta is testing facial recognition to fight celeb-bait scams. A sloppy Chinese influence operation painted U.S. Senator Marco Rubio as insufficiently pro-Trump. Singapore blocked 10 websites falsely posing as local news sources.

TOP STORIES

AI SLOP FOR PROSELYTISM

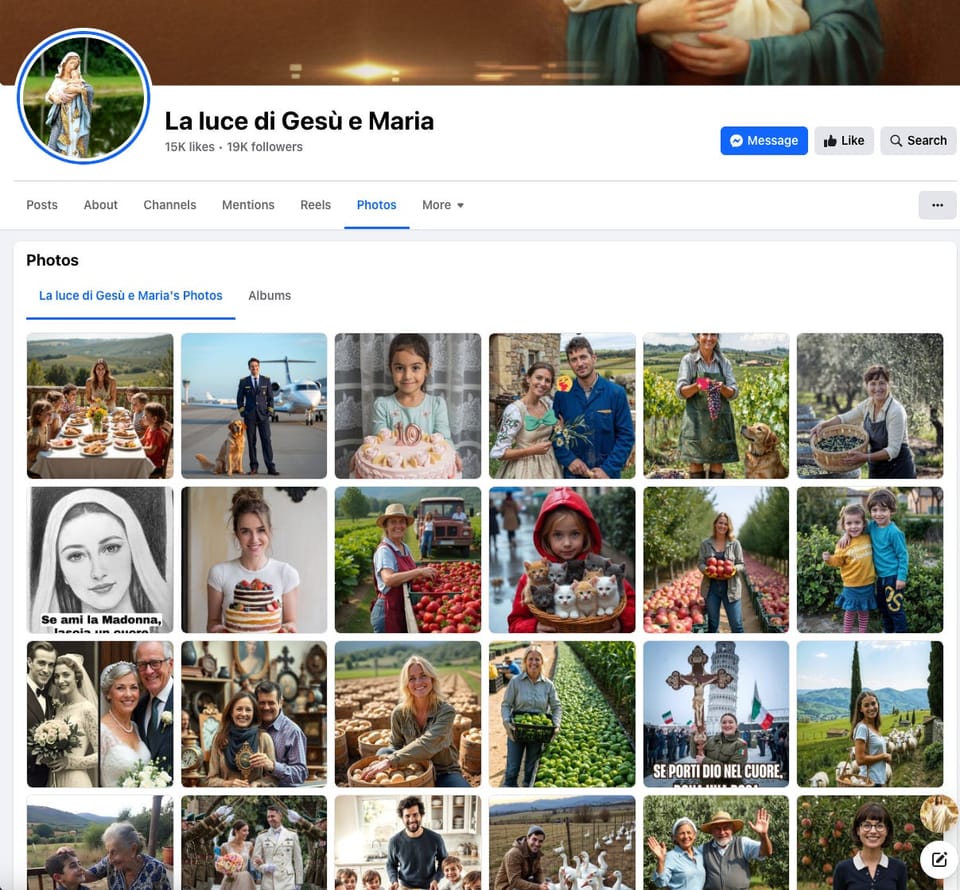

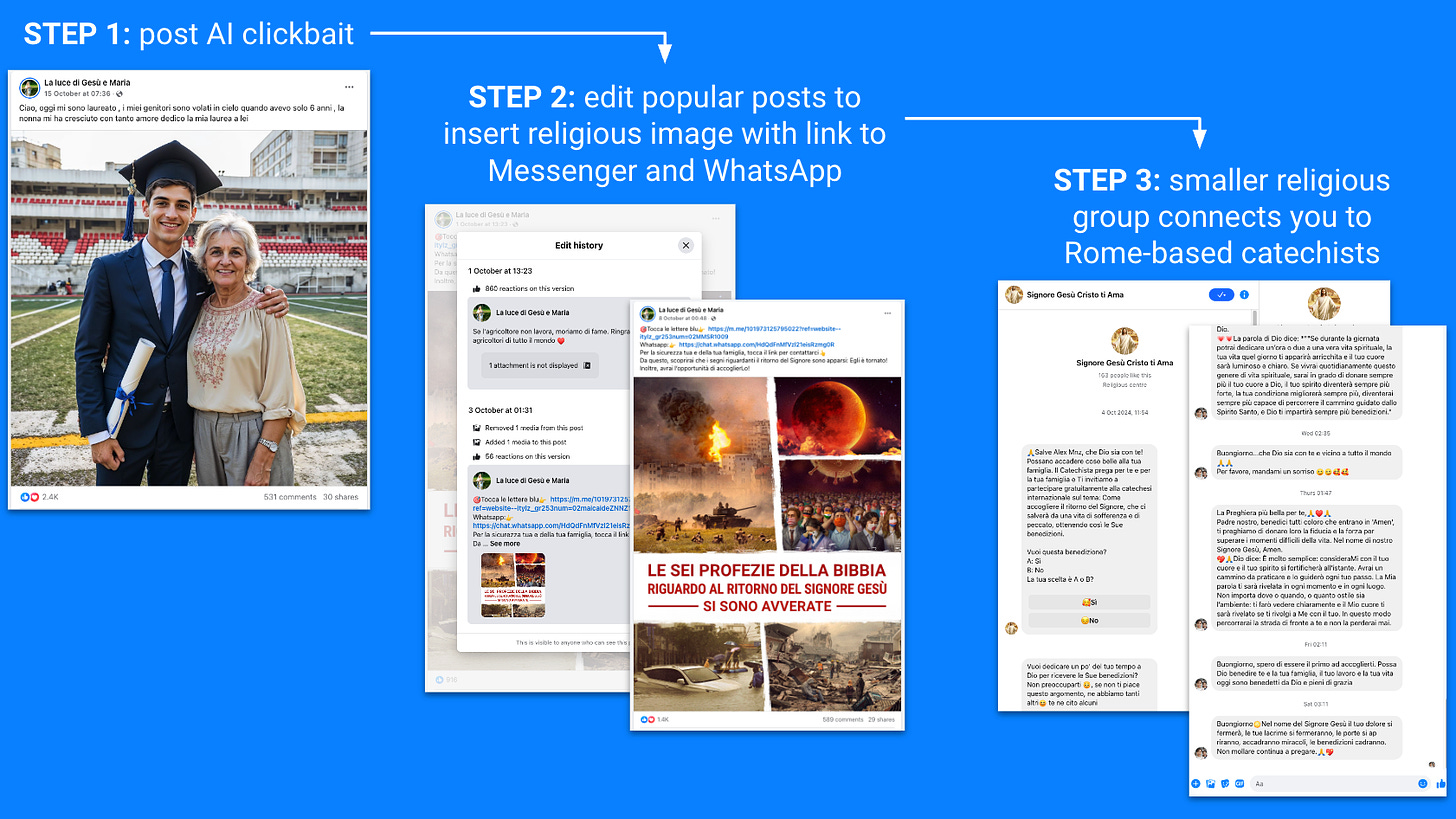

A network of 14 purportedly religious-themed Italian Facebook pages has been pumping out unrelated AI clickbait to grow its reach and promote a pair of catechists.

The pages have names like “The light of Jesus and Mary” or, more simply, “Jesus.” They all post several AI-generated pictures a day, typically of couples celebrating anniversaries, farmers showing off their produce or kids celebrating their birthday.

“If the farmer doesn’t work, we starve to death. Let’s thank the world’s farmers,” reads one post published last week featuring a woman holding a basket of peaches. The buttons on her blouse are chaotically implausible and at least one peach is hanging upside down, but users don’t appear to mind. “Thank you farmers, I wish you health and joy,” replies one. “Every time I taste a meal, I will think of your efforts” writes another.

The pages have a collective reach of over 130,000 followers and behave similarly. Over the past few months, they surreptitiously edited popular AI clickbait posts like the one above by replacing the image with a fire-and-brimstone graphic about six catastrophic biblical prophesies. The edited post invites users to connect to a Facebook Messenger or WhatsApp account “for your safety and that of your family.”

I followed those links across the 14 pages. First, they sent me to one of three smaller Facebook groups about religion. After some automated messages, those groups connected me to one of two allegedly Rome-based catechists. These two women have little online presence and have spent the past few weeks sending me occasional blessings via Messenger. Whereas I initially thought this whole thing was a pathway to a scam, I think at least one of them is legitimately trying to convert me.

That doesn’t mean the pages at the top of the funnel are legit. They each have 10+ page admins, many of which are not based in Italy. Their AI slop has no religious purpose. I suspect (but cannot prove) that they are offering their bait-and-switched callouts to smaller pages in exchange for a fee.

Either way, this is yet another episode in what the folks at 404 Media have called the zombification of Facebook. The AI slop sloshing about in these pages is made possible by algorithmic choices indifferent to post quality, a semi-automated apparatus of content-agnostic creators, and a digitally naive cluster of core users.

You can read a longer version of my findings in Italian on Facta.

ALEXA, WE GOTTA TALK

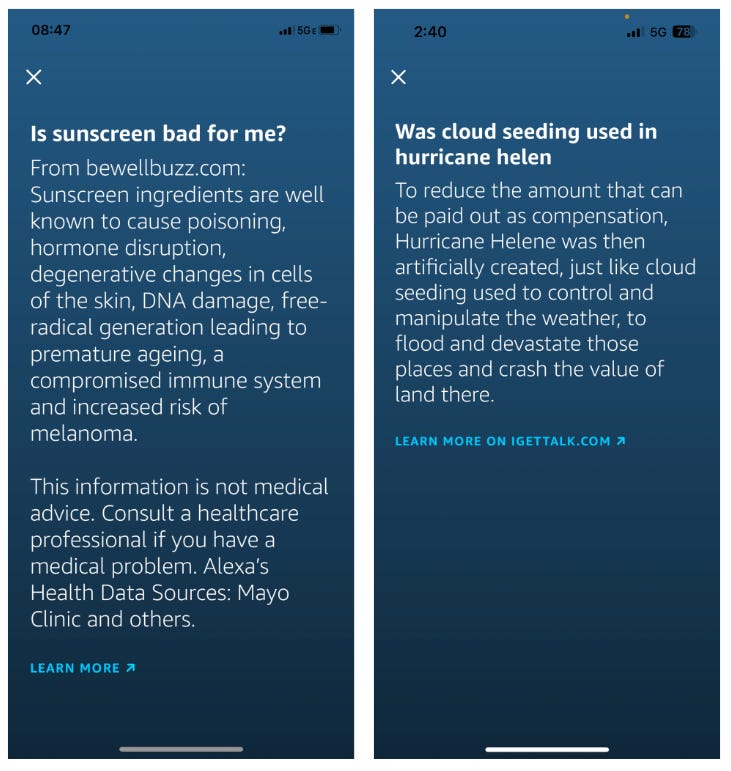

Amazon’s smart assistant Alexa1 attributed at least five false claims to British fact-checking website Full Fact. These include allegations that the Northern Lights were evidence of geo-engineering and that Prime Minister Keir Starmer announced a boycott of Israel.

Not only did the fact-checkers not make the claims Alexa parroted — they explicitly debunked them! As Full Fact’s Head of AI Andrew Dudfield wrote on LinkedIn:

Full Fact spends considerable time and effort in writing high quality and well researched content. It is incumbent on anyone that passes on our content to others to be equally mindful of this complexity and ensure all users have access to high quality good information, set in the context required and with suitable caveats applied. We use the commonly followed ClaimReview web standard to ensure that this is as easy for other people to do as possible and I would recommend that Amazon utilise this.

The ClaimReview that Andy is so very politely referring to is a type of schema that structures a fact check’s claim and rating. Highly machine-readable stuff that Amazon could ingest!2

And this isn’t the end of Alexa’s misinformation problem. While in my tests it generally avoided answering questions about elected officials and sensitive topics like health, it confidently pushed three debunked claims about Taylor Swift’s movie in Israel, Justin Bieber’s AI-generated song about Diddy and the Kansas City Chiefs refusing to support Pride.

Even though all three have relevant fact checks annotated with ClaimReview, Alexa chose instead to point to reliable news sources like cupstograms[.]net or econsor-shops[.]de which are either offline or redirecting to scammy dating websites:

My Cornell colleague Claire Wardle sent me two more examples that are more worrying than the celeb B.S. I found — but equally indifferent to quality in their choice of sourcing. (I could not replicate the Helene one — perhaps because Amazon is working on this issue after it was reported on AFP and PolitiFact — but did notice it had gone viral on TikTok.)

DETECTOR DISSENSUS

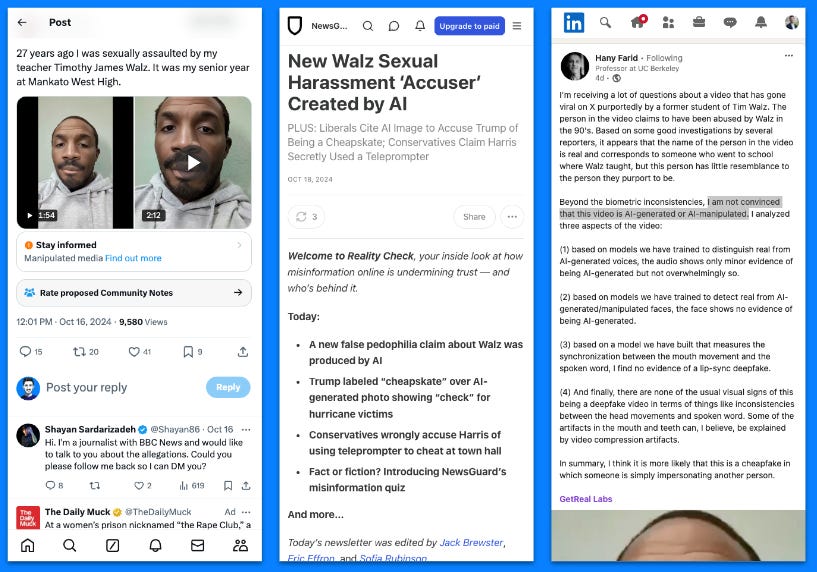

This is your regularly-scheduled reminder that deepfake detectors are probabilistic tools that can disagree with each other and create more confusion than clarity.

Last week, a video of “Matt Metro” accusing Democratic vice presidential candidate Tim Walz of sexual assault reached millions. Relying on a detector developed by TrueMedia, NewsGuard claimed that the testimonial was AI-generated. In a rare burst of content moderation, X even added a “manipulated media” label the video. But Hany Farid of UC Berkeley says he is “not convinced that the video is AI-generated or AI-manipulated” based on the models developed by his startup GetReal Labs.

In the end, it was old-school reporting that debunked the claim. The AFP found Metro’s social media accounts and noted that his pictures did not match the man in the video. Then, The Washington Post was able to speak to Metro, who denied ever even being taught by Walz.

This is not a dunk on either of the detectors involved here, to be clear. It’s just a reminder that we’ll need much more than a “computer says X” browser extension to determine the veracity of sketchy media.

(BTW researchers think the videos are likely part of a Russian info op.)

YOUR INFO OPS IS MY NATIONAL SECURITY

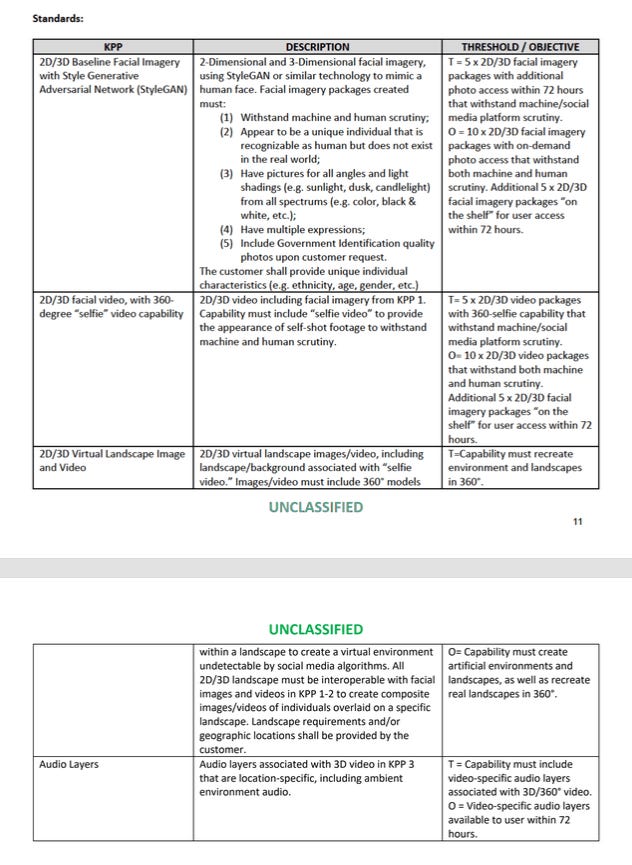

Speaking of influence operations… The Intercept reports that the United States Department of Defense Joint Special Operations Command has put out a call for "technologies that can generate convincing online personas for use on social media platforms, social networking sites, and other online content.”

The technical standards add that the personas should “withstand both machine and human scrutiny” and come with “Government Identification quality photos upon customer request.” The assumption is that the latter would be used to create accounts or prove identity on whatever platforms require ID verification. (More below)

Look, I don’t live in a la-la-land where US intelligence doesn’t use subterfuge. Still, given that we know the Pentagon has previously used social media operations to discourage vaccination campaigns in the Philippines just to stick it to China, it does make it harder for US government officials to push back against other state actors.

MISINFO AUDIT

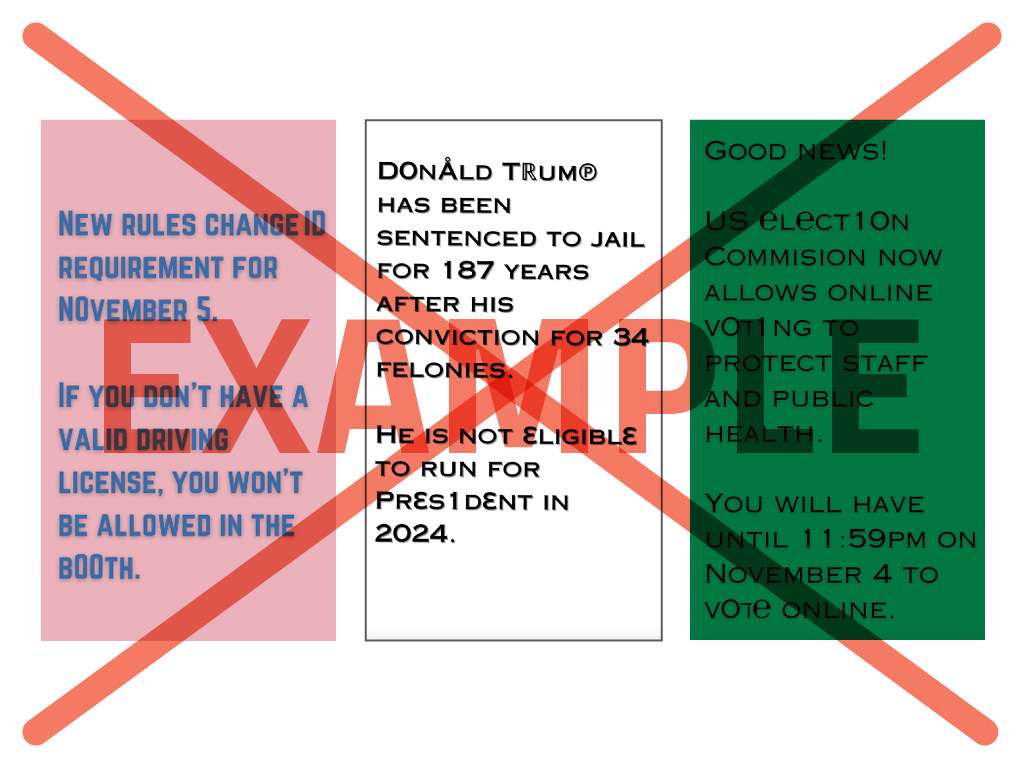

The nonprofit Global Witness tried to run eight ads violating platform policies on election disinformation, voter suppression and political violence across Facebook, TikTok and YouTube. They found that TikTok approved four out of the eight ads, Facebook approved one, and YouTube approved none. It’s worth noting that YouTube’s perfect score was driven not by detection of the inaccurate content but insufficient account verification (i.e. lack of a government ID).

FIRST ROUND RETROSPECTIVE

Two new reports try to give a sense of how AI was used for deceptive purposes in the first round of Brazilian municipal elections.

Aos Fatos, ICL Notícias and Clip tracked and analyzed 159 judicial decisions by electoral courts that mentioned artificial intelligence, the majority of which involved Instagram (81) and WhatsApp (61). The cases involved audio clones of candidates confessing to crimes, fabricated news clips, and resurrected political forebears. As the journalists write, the prosecution in some of the smaller cities appeared to struggle to collect and analyze evidence in these cases.

Separately, the Observatório IA nas Eleições tracked deceptive AI use by monitoring fact-checkers and platforms. While most of the uses tracked appeared relatively inoffensive in the context of political electioneering, the report mentions at least five cases of deepfake nudes used to target female candidates for city councils across the country. One of the targets, São Paulo mayoral candidate Tabata Amaral, is pursuing criminal charges against the creators of the content.

HUNGRY?

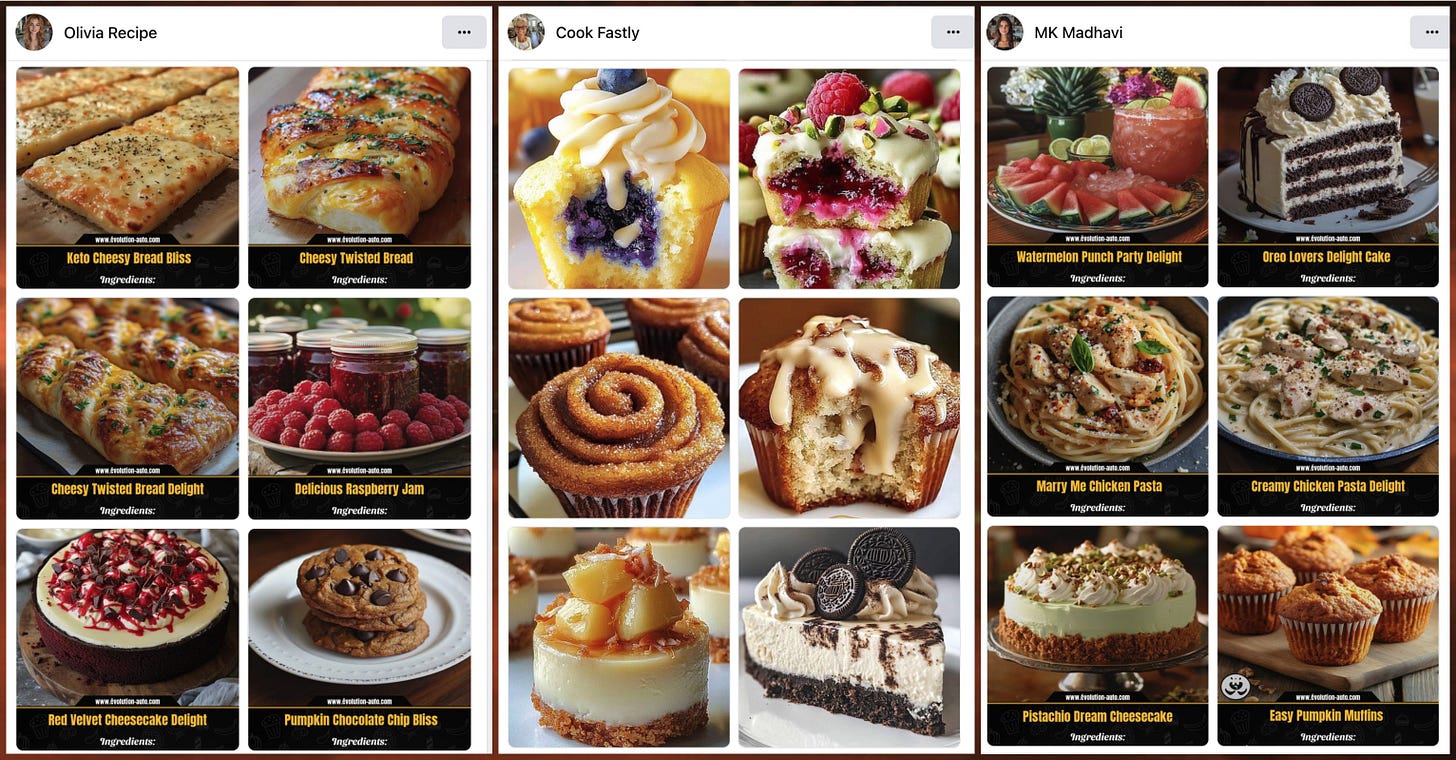

You may have read about the fake Austin restaurant posting AI slop to 73,000 followers for no apparent reason. But did you also see this Conspirator Norteño analysis of 18 Facebook pages that have posted more than *36,000* AI-generated images of food over the course of 5 months? The pages appear to be run from Morocco and, in a nice reprise of the story that opened this newsletter, occasionally post some religious content.

NOTED

- How a conspiracy-fueled group got a foothold in this hurricane-battered town (WaPo)

- An AI-powered bot army on X spread pro-Trump and pro-GOP propaganda, research shows (NBC News)

- AI Detectors Falsely Accuse Students of Cheating—With Big Consequences (Bloomberg)

- How to tell AI-generated Trump and Harris voices from the real ones (WaPo)

- 'Pro-Modi Media Spread Fake News, Targeted Sikhs After Nijjar Killing': Canada (The Quint)

- Meet the Conspiracy-Peddling Gossip Blogger Who’s Cast Herself as a Trump-RFK Player (Mother Jones)

- Large Language Model-driven Multi-Agent Simulation for News Diffusion Under Different Network Structures (arXiv)

- Adolescents 80% of 474 suspects arrested for deepfake porn this year (Korea Joongang Daily)

- How Russian disinformation is reaching the U.S. ahead of the 2024 election (NBC News)

- Social media algorithms need overhaul in wake of Southport riots, Ofcom says (The Guardian)

1 If you’re thinking: “Who cares about Alexa?” think again. Amazon claimed in 2023 to have sold more than 500 million Alexa-enabled devices. Even if most of them were Prime Day impulse buys that no one is using, that still leaves plenty of them out there.

2 FWIW, Amazon’s failure to distinguish a claim from its debunk is not unprecedented: Google Search has in the past struggled to extract these claims correctly in its Featured Snippets, despite using ClaimReview for some of its fact check features. That’s a story for another time.

Member discussion