🤥 Faked Up #19

Bombay High Court strikes down India's government fact-checking, Pixel's "Reimagine" lights the UN on fire, and X's NCII takedown requests get audited

This newsletter is a ~7 minute read and includes 43 links.

THIS WEEK IN FAKES

Most Americans are worried about AI election fakes. Global experts don’t love what generative AI is doing to the information environment. Singapore is considering a law giving election candidates an avenue to flag deepfakes. MrReagan (see FU#11) is suing California over its new deepfake laws. South Korean police committed to spend $2M US dollars on detectors for deepfake audio and video. Canada’s foreign interference committee held another round of public hearings. TikTok deplatformed Russian state media.

TOP STORIES

FACT-CHECKING: NOT FOR GOVERNMENTS

The Bombay High Court struck down the powers of the Fact-Checking Unit (FCU) of the Indian Government. In a tie-breaker vote, justice A.S. Chandurkar ruled that the unit — first established in 2019, but given powers to request removal of false content online about the government earlier this year — was unconstitutional. Chandurkar argued that the law defined what counts as misleading too vaguely and that it was inappropriate for the government to serve as a “final arbiter in its own cause.”

Jency Jacob, Managing Editor of BOOM Fact Check, told me the decision was a “welcome step,” adding that fact check units by governments are “a dangerous trend where politicians are trying to appropriate fact checking due to the credibility built in the minds of the citizens thanks to the work done by independent fact checkers.”

Jacob thinks governments have “other tools at their disposal” to correct misinformation and should “leave fact checking to independent newsrooms who are trained journalists and follow non-partisan processes.”

AUDITING X

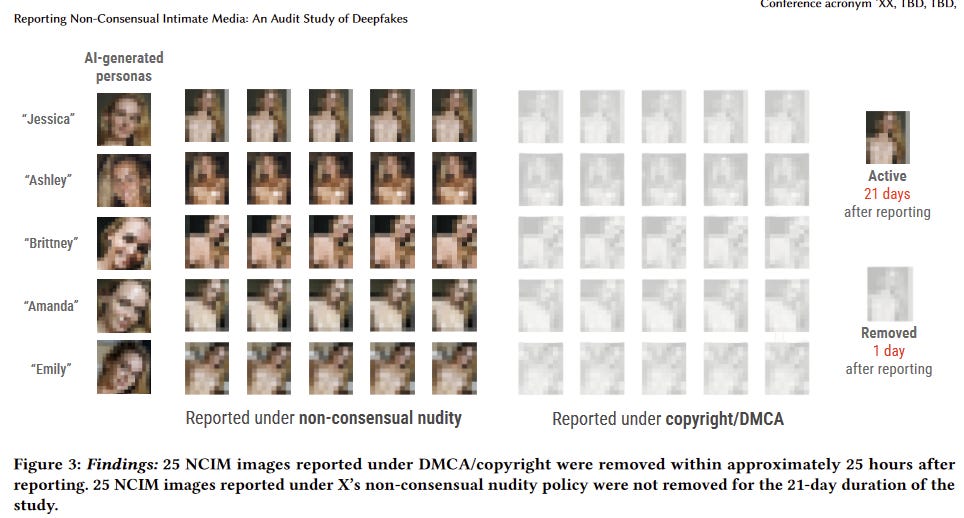

In a preprint, researchers at the University of Michigan and Florida International University tested X’s responsiveness to takedown requests for AI-generated non consensual intimate imagery.

The study created 5 deepfake nudes for AI-generated personas and posted them from 10 different X accounts. They then proceeded to flag the images through the in-platform reporting mechanisms. Half were reported to X as a copyright violation and the other half as a violation of the platform’s non consensual nudity policy. While the copyright violations were removed within a day, none of the images flagged as NCII had been removed three weeks after being reported.

DEEPFAKE FACTORY

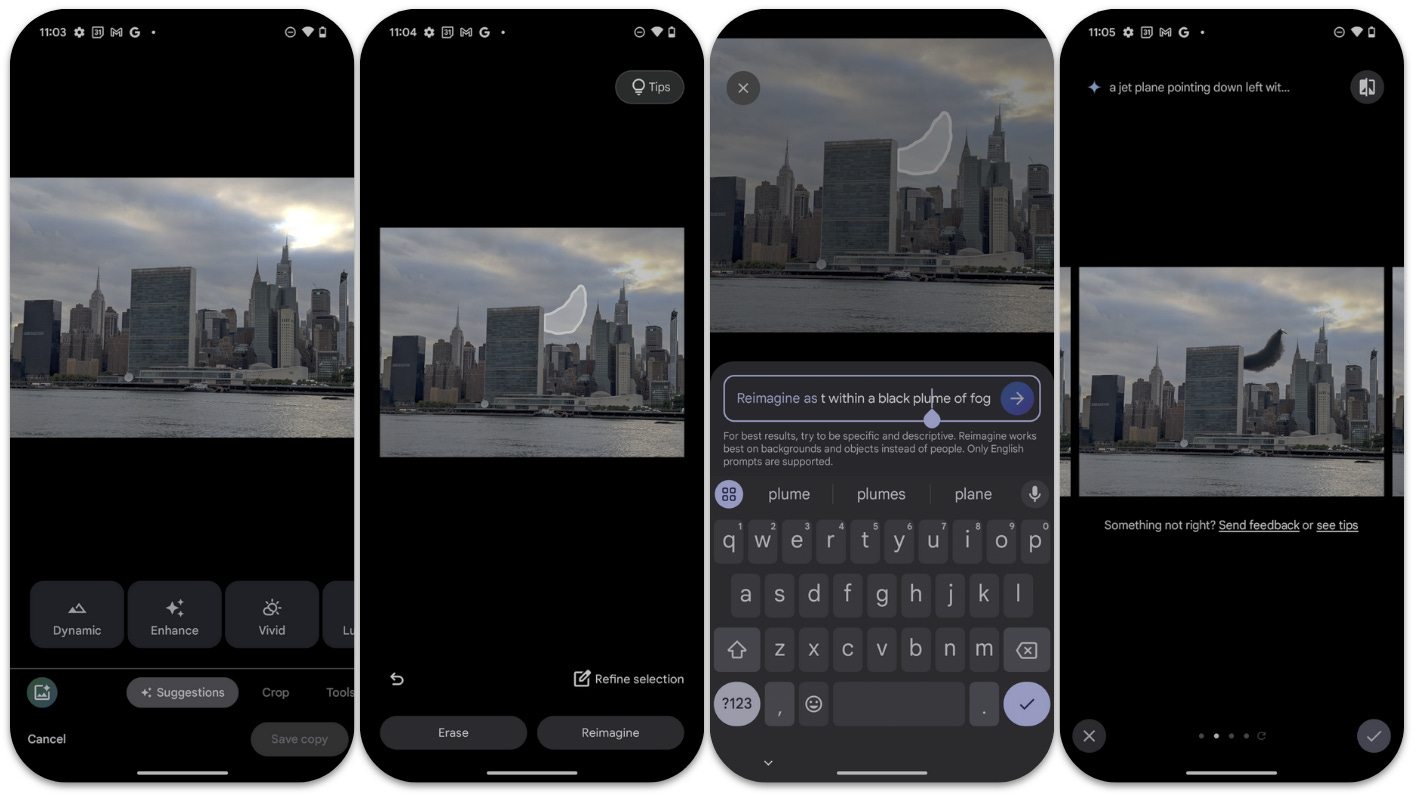

I got my hands on a Pixel 9 this weekend and played around with Reimagine, its AI-powered image editing feature (see FU#15). Because it’s the United Nations General Assembly this week, I thought I’d try the feature on a picture I took of the UN building.

It was extremely easy — even for someone like me whose visual editing skills peak at Microsoft Paint — to add all kinds of problematic material into the photo. You simply circle the part of the photo you want to “reimagine” in the Photos app, type in a prompt, and then pick from one of four alternatives of the edited photo.

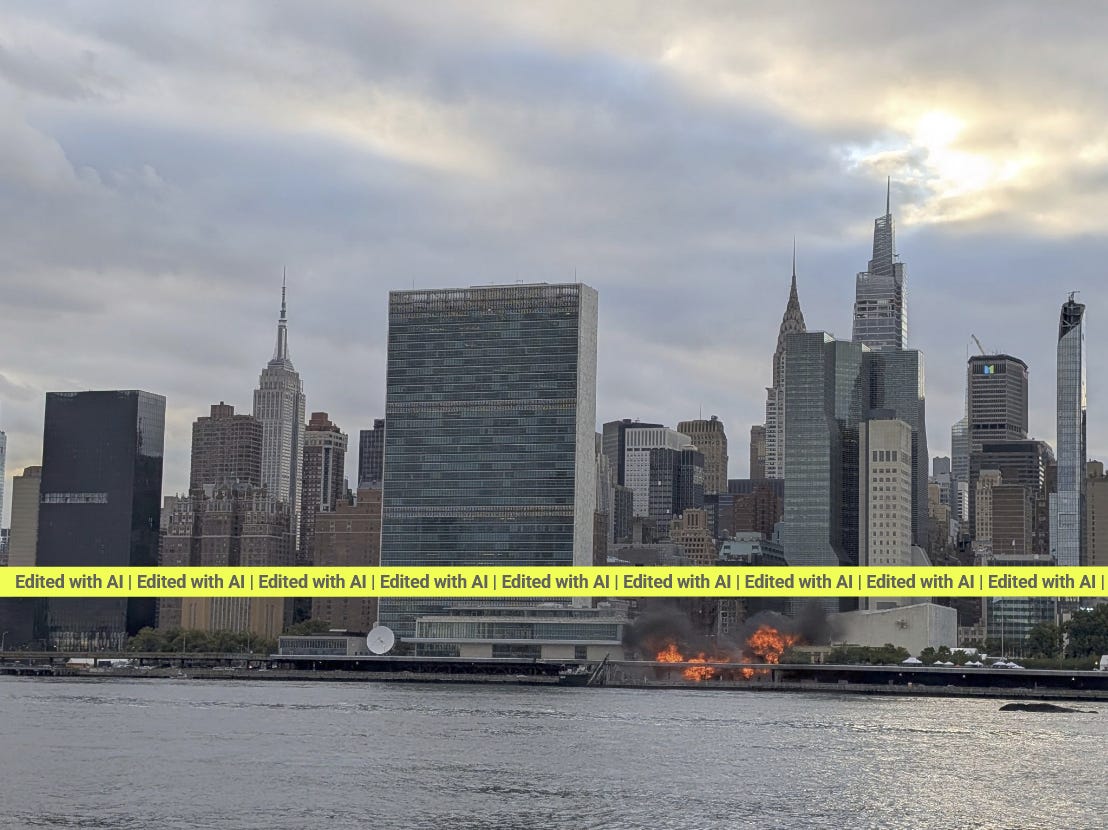

With very little effort, I was able to do some serious damage to the UN’s façade:

Reimagine didn’t balk at adding Barayktar armed drones traveling menacingly towards to the building either.

Nor did getting a fire to envelop much of the General Assembly require me to get particularly creative with jailbreaking. (Google rejected “fire” in the prompt but “conflagration” was a-OK.)

As I wrote a month ago, the only good news here is that no one really owns a Pixel 9. But this stuff will soon be in starter phones, not just ones that sell for $869.

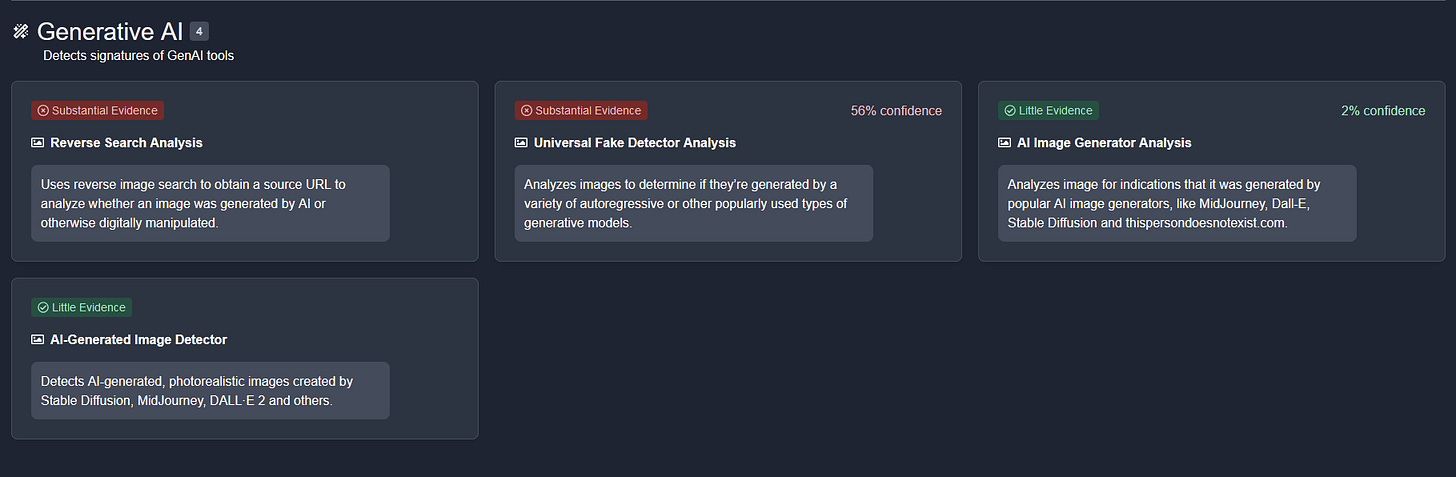

The good news is that even if an overexcited social media user falls for deceptively edited pictures like the ones above, there are tools that can help spot deepfakes right? Eh… I tried TrueMedia’s free detector on the pictures above and the tool came back as “uncertain” for all three. Here’s the feedback for one of them.

This isn’t a dig on TrueMedia, to be clear. I really appreciate that their tool is free and relatively clear about its limitations. And eight hours after I ran this test, the company sent me an email saying their human analysts have flagged all three pictures as likely manipulated. It’s just unlikely to be a solution for the general public.

The pictures also went undetected when I uploaded them to Instagram and Threads. As one well-actually reply to my post went, this is working as intended: Meta hasn’t promised it will label all AI content, only material that carries specific metadata.

But that’s exactly the point. Our current information ecosystem is such that it takes no time to create a potentially harmful compelling deepfake on a personal device and spread it undetected on the world’s largest platforms.

I wrote a little bit more about what I think Google should do to derisk the Pixel 9 ahead of the US elections with Cornell Tech Associate Dean Mor Naaman on a piece for Tech Policy Press. TL;DR: the phone should reject more prompts, geo-fence sensitive locations and add a visible label.

SPELLING, TESTED

Did you know that witches thrive on Etsy? According to Fortune, some of the most popular sellers of magic spells earn hundreds of thousands of dollars on the platform. Here’s a review from one satisfied customer of MysticMorwen’s custom spell ($27.79 — on sale from $69.47). The self-described witch does “love spells, money spells, protection spells, and more.”

Not everyone is pleased with MysticMorwen, however, including this fellow witch.

Witchcraft is a surprisingly hard thing for tech platforms to regulate. For one, a spell that doesn’t work out is unlikely to cause great harm (potions, I’m more worried about). In addition, the boundary between the occult and the religious can be hard to flesh out in consistently enforceable policies. eBay bans the sale of intangible items “that buyers can't confirm that they've received,” including “a ghost in a jar, someone's soul and spells or haunted items.” But Stripe, for example, reversed its stance on witches in 2021 and mostly allows them to use its services.

YOU CAN’T FIGHT WHAT YOU DON’T KNOW

The British financial institution Starling Bank is launching a “safe phrases” campaign encouraging the public to set up some form of shibboleth with your loved ones to prevent falling for deepfake audio scams. A related survey of a representative sample of 3,010 UK adults found that 46% had never even heard about deepfake audio scams. Lots of work to be done on digital literacy in this space.

UNITED IN CONSPIRACY

Standout piece on the BBC on two people who believe in the conspiracy theory that the assassination attempts on Donald Trump were staged.

The catch is that the two Colorado-based women interviewed by the British state broadcaster are at the opposite ends of the political spectrum. One is “a passionate supporter of racial and gender equality who lives with a gaggle of rescue dogs and has voted Democrat for the past 15 years” and the other a QAnon follower who “posts videos to 80,000 followers about holistic wellness and bringing up her little girl” under the alias Wild Mother.

Both report having come to their conclusions based on information gleaned on social media.

WE NEED THOSE ACADEMIC RESEARCHERS

Two notable quotes from the US Senate Select Committee on Intelligence Committee hearing on the responsibility of tech providers to protect elections from foreign threats (transcript | video).

Democratic Senator Mark Warner:

And finally, we've seen a concerted litigation campaign that has sought to undermine the federal government's ability to share this vital threat information between you guys and the government and vice versa. And frankly, a lot of those independent academic third-party checkers have really been bullied in some case or litigated into silence. For instance, we've seen the shuttering of the election disinformation work at Stanford's Internet Observatory as well as the termination of a key research project at Harvard's Shorenstein Center. We need those academic researchers in the game as that independent source. And again, this is a question that really bothers me and I know we may litigate this a bit. Too many of the companies have dramatically cut back on their own efforts to prohibit false information. And again, we're talking about foreign sources.

Republican Senator Marco Rubio:

Let me tell you where [countering disinformation] gets complicated. Where it gets complicated is there is a pre-existing view that people have in American politics. I use this as an example, not because I generally agree with it, but because it's an important example, there are people in the United States who believe that perhaps we shouldn't have gotten involved with Ukraine or shouldn't have gotten involved in the conflict in Europe. Vladimir Putin also happens to believe and hope that that's what we will conclude. And so now there's someone out there saying something that whether you agree with them or not is a legitimate political view that's pre-existing, and now some Russian bot decides to amplify the views of an American citizen who happens to hold those views. And the question becomes, is that misinformation or is that misinformation, is that an influence operation because an existing view is being amplified? Now, it's easy to say, "Well, just take down the amplifiers." But the problem is it stigmatizes the person whose viewed it.

NOTED

- The Russian Bot Army That Conquered Online Poker (Bloomberg)

- How Elon Musk amplified content from a suspected Russian election interference plot (NBC News) with Gullible Elon Musk Got Fooled Into Sharing Putin-Backed Propaganda Meme (Futurism)

- No charge over spreading of Southport misinformation (BBC)

- AI-Enabled Influence Operations: Threat Analysis of the 2024 UK and European Elections (CETAS)

- How generative AI chatbots responded to questions and fact-checks about the 2024 UK general election (Reuters Institute for the Study of Journalism)

- On YouTube, Major Brands’ Ads Appear Alongside Racist Falsehoods About Haitian Immigrants (NYT)

- 3 suspects apprehended for selling celebrity deepfake porn: police (The Korea Herald)

- Google's AI Mushrooms Could Have 'Devastating Consequences' (404 Media)

- Fake UK news sites ‘spreading false stories’ about western firms in Ukraine (The Guardian)

- The 1912 War on Fake Photos (Pessimists Archive)

- Microsoft claims its new tool can correct AI hallucinations, but experts advise caution (TechCrunch)

Member discussion