🤥 Faked Up #18

Americans turn to Search for debate fact-checking, Meta buries its AI labels, and Australia tries to regulate misinformation (again).

This newsletter is a ~6 minute read and includes 60 links.

THIS WEEK IN FAKES

The US Secretary of State accused Rossiya Segodnya and TV-Novosti of being a “de facto arm of Russia’s intelligence apparatus.” Meta decided this was a good time to deplatform related accounts (most have been off YouTube for years). Congress is unlikely to pass a deepfake election law before November, but California did. Google struggles with AI paintings (see also: Hopper and Vermeer). OpenAI claims its new model “hallucinates less.” A new app lets you build an entirely AI-generated following (at least it doesn’t do too poorly with conspiracy theories).

TOP STORIES

OZ TRIES AGAIN

The Australian government introduced three bills addressing harmful online behavior, including the Communications Legislation Amendment (Combatting Misinformation and Disinformation) Bill 2024 targeting digital deception on online platforms. This is the Labor government’s second shot at it and an outgrowth of a voluntary industry code spearheaded by the former Conservative government. Elon Musk called Australian legislators fascists (🤷♂️), but even someone I respect like Mike Masnick expressed skepticism, while the advocacy group Reset.Tech called it “worryingly poor.”

As I read it, the proposal struck me as relatively middle-of-the-road? The draft bill defines misinformation as false content that can create physical harm or serious harm to the electoral process, public health, protected groups and critical infrastructure.

It would boost the powers of ACMA so that the media regulator can require digital communications platforms1 to join or create a binding industry code on disinformation. Platforms would also be expected to keep records of disinformation countermeasures and publish risk assessments. And in case they fail to comply, there’s a list of remedial actions that range from formal warnings to financial penalties worth up to 5% of global turnover.

THEY’RE FACT-CHECKING THE PETS

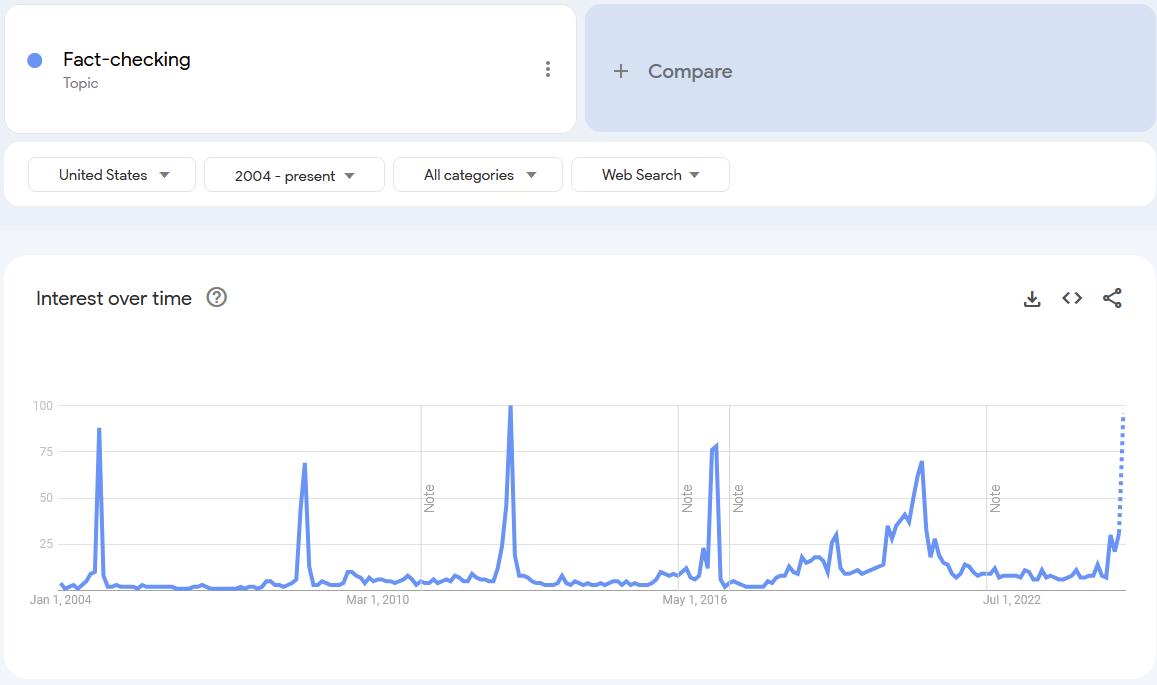

Last week, Google Search queries related to the term “fact-checking” were at a 10-year high both globally and in the US. In line with historical patterns, this peak was driven by an American political debate2.

Looking at the related topics adds some color into what people were eager to verify through Search. Besides the names of the two ABC moderators,3 Americans wanted to verify the baseless claims that Haitian immigrants in Ohio were eating cats, Harris’ claim that the Wharton School said Trump’s budget plan would “explode the deficit” (here’s what they said) and former Virginia Governor Ralph Northam’s oft misquoted views on late-term abortions.

Even some conservative commentators were upset that pet-eating made it from the online fringes to the debate stage. Neither of the Springfield-based individuals behind the early Facebook chain that spread this falsehood could substantiate their claim.

THE CHAT-CHECKER

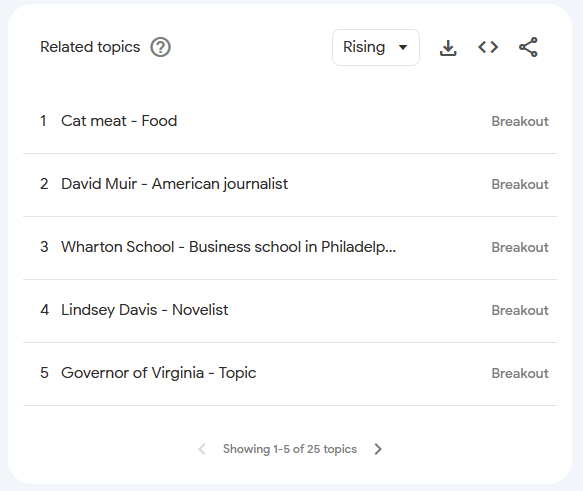

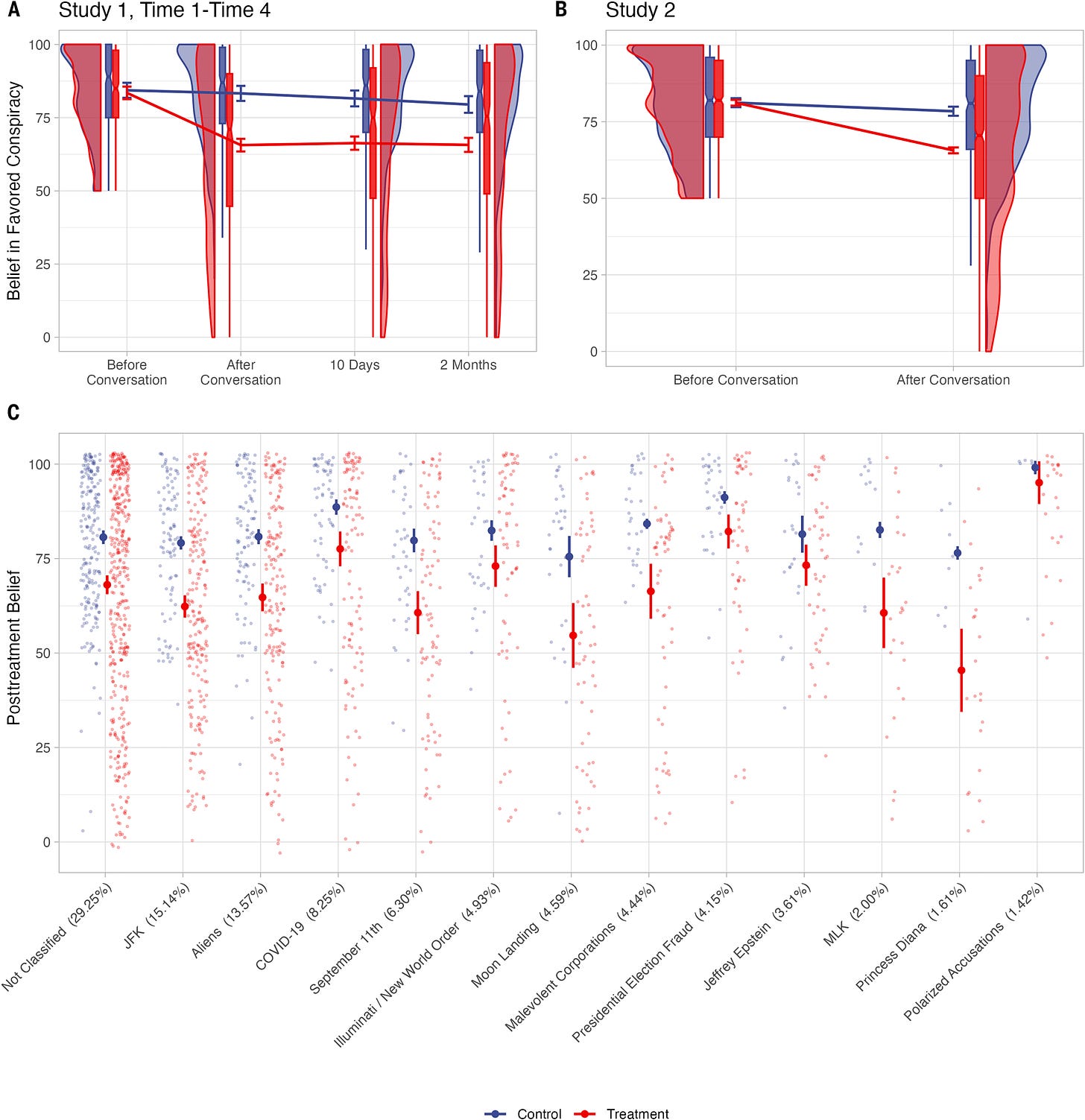

New on Science4: A three-round conversation with ChatGPT reduced belief in a conspiracy theory of choice by an average of 20%. That’s…pretty good! The effect was observed across a wide range of conspiracy theories and lasted even two months after the intervention.

You can get a good sense of the study design in the graphic below. I am inclined to agree with the authors’ assessment that the reason the intervention was so successful is that the LLM could tailor its responses to the unique reasons each participant had to believe in the conspiracy theory. You can also play around with their AI fact-checker at debunkbot.com.

WHITHER, FACT CHECK LABELS?

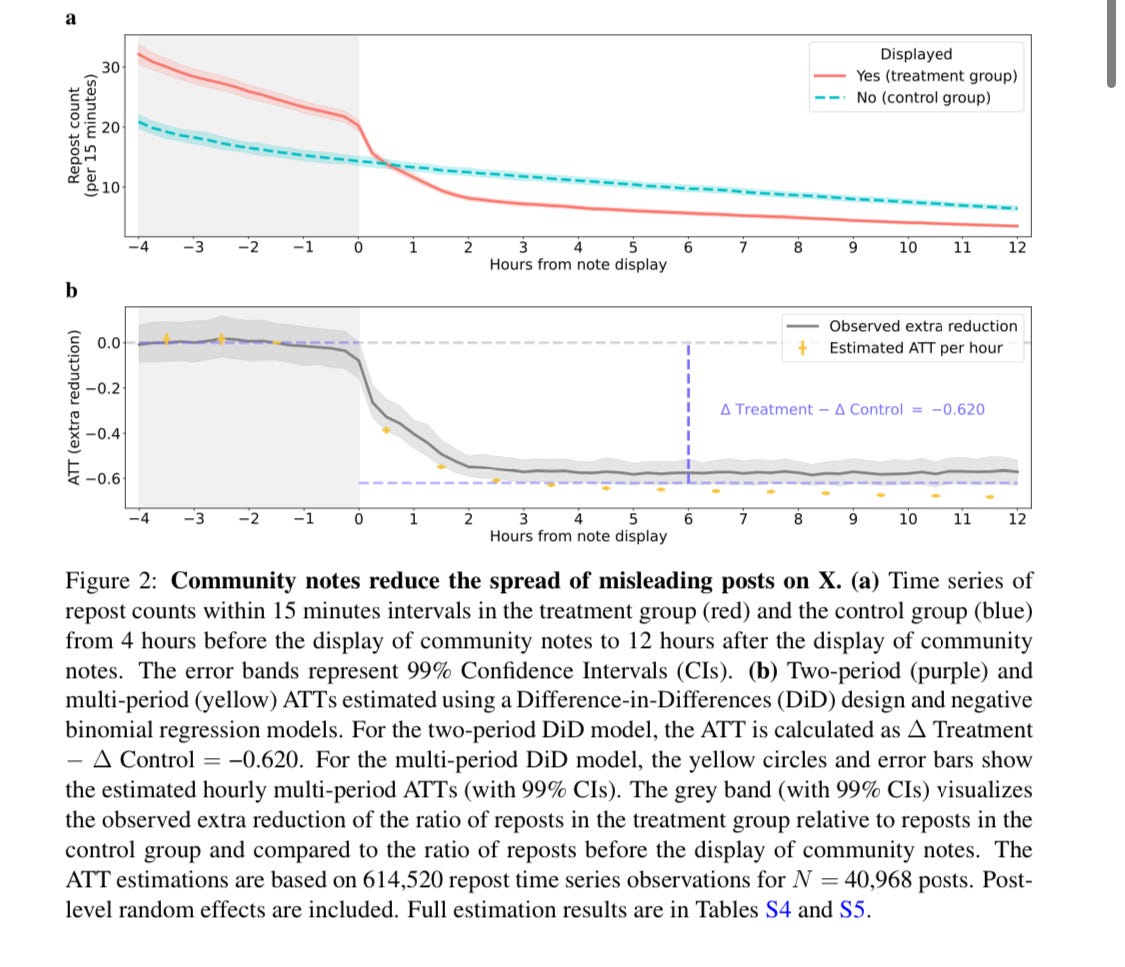

A preprint by researchers in Luxembourg, France and Germany claims community notes on X reduced the spread of tweets they were attached to by up to 62 percent and doubled their chance of being deleted. The study also found that the labels typically came too late to affect the overall virality of the post. (This is a bit of a chicken-and-egg problem where a viral fake is more likely to be seen by people who can debunk it.)

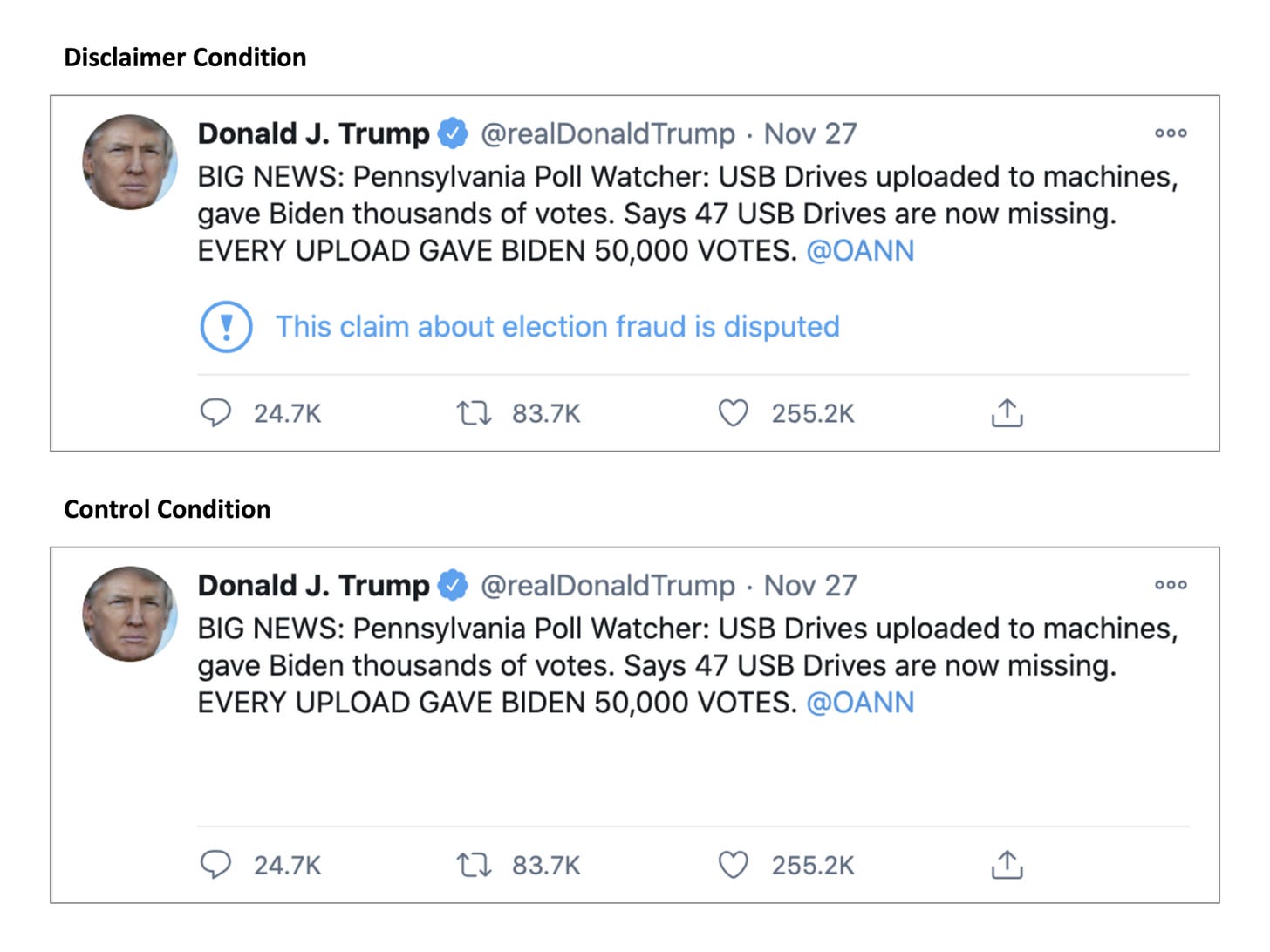

This peer-reviewed paper on Misinformation Review is less encouraging, finding that the “disputed” labels that (then) Twitter was appending to false claims of election fraud increased belief in the false claim by Trump supporters. It’s worth noting that this was a survey, rather than an analysis of platform data, and that no information beyond the label was provided.

CONCEALED TRANSPARENCY

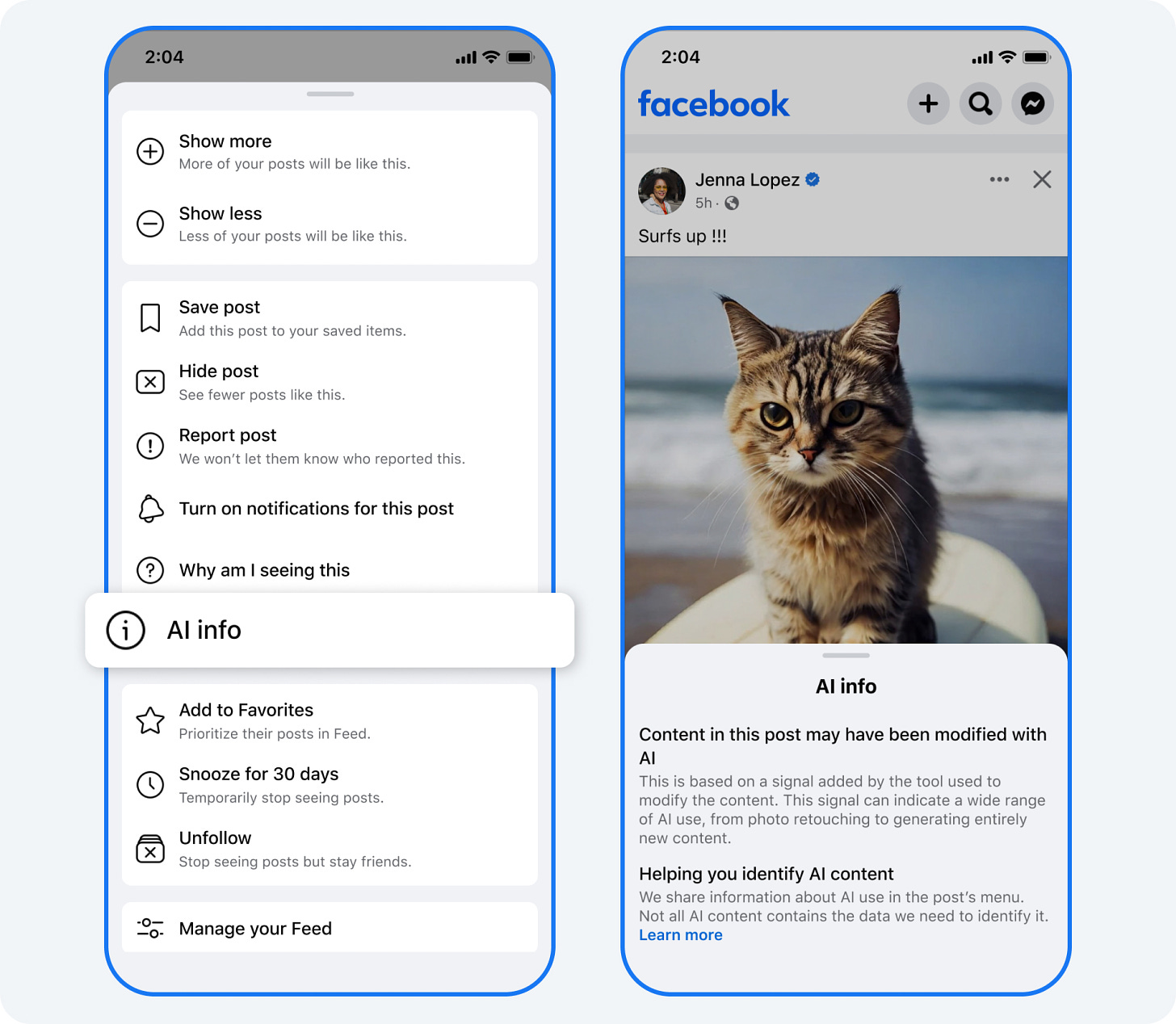

Meta announced last week that it would be relocating the label for AI-modified images it launched in April. Instead of appearing above the image, “AI info” is now relegated to the list of options you get when clicking the menu button.

Incidentally, Google announced on Tuesday that its own labeling efforts would be similarly buried behind a menu button in Search’s “About this Image” feature. Google did not share mock-ups of this implementation and was vague about its launch date.

I am divided about this setup. As I’ve written in the past, Meta’s labels are imprecise, failing to differentiate between minor edits and entirely AI-generated content. They are also infrequent, failing to capture large swaths of entirely AI-generated accounts created with tools that don’t adhere to industry standards.

Reducing the labels’ prominence may be a good interim measure to give engineers time to work on their precision and recall while still providing access to journalists and researchers who understand their limitations.

The challenge is that this is likely to become a permanent approach that ends up doing very little to help ordinary users — who do not click on tiny three-dotted-buttons — distinguish real photos from fake one.

DEPT. OF DID WE REALLY NEED THIS?

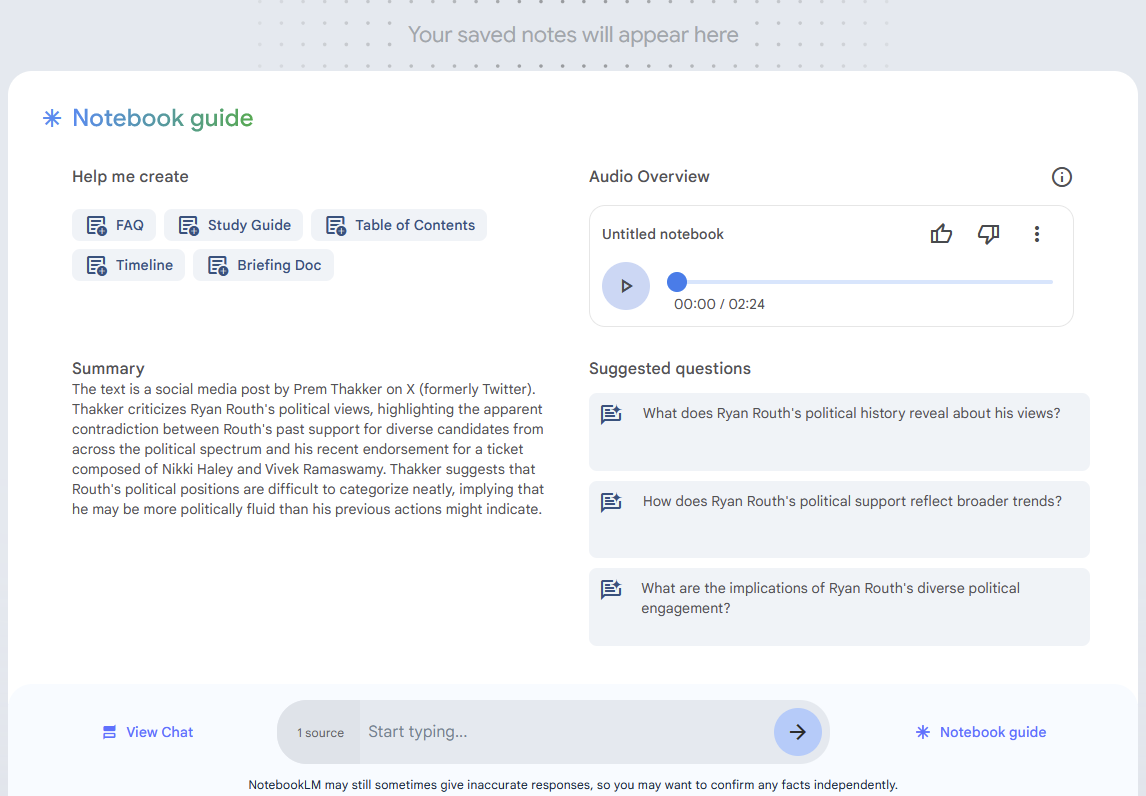

Google has not one but two AI products that transform a piece of writing into a podcast discussion. Illuminate is restricted to arXiv articles, presumably to limit abuse, but Notebook LLM lets you use pretty much any text.

I was curious to see how NotebookLLM coped with polarizing or false content, so I had Google generate a “deep dive conversation” for four different urls.

First I tried with JD Vance’s Sep 9 tweet about pet-eating immigrants. The AI-generated hosts engage in a pretty believable podcast-style repartee; they also avoid endorsing the conspiracy theory. Instead, the 4-minute clip turns into a pretty safe exchange on media literacy.

Notebook does even better with the transcript of a TikTok video I flagged a few weeks ago about tell-tale signs of reptilians. It calls it out as a conspiracy theory outright and spends most of the time talking about why people believe in misinformation.

The tool does less well on this other transcript of a TikTok video claiming pyramids were built by giants.

With some credulity, the 4-minute podcast walks through the alleged evidence in the TikTok and claims it “makes you wonder if there wasn’t some grain of truth” to stories about giants. The hosts say “what seems impossible today could be a textbook chapter tomorrow,” while acknowledging we’d need archeologists to uncover a “femur the size of a telephone pole” to truly believe in giants.

Finally, I tried with this tweet on attempted Donald Trump assassin Ryan Routh. The two hosts of the AI-generated conversation argued that he’s an example of an individual who had a “more nuanced set of priorities“ rather than “blind party loyalty.” That’s certainly a take!

All in all, I come out of this both encouraged by Google’s protective measures on NotebookLLM and wondering…do we really need any of this?

DEPT. OF AWFUL HUMANS

Infuriating stuff uncovered in a court record by 404 Media and Court Watch:

A man in Massachusetts was arrested Wednesday after allegedly stalking, doxing, and harassing a female professor for seven years. Among a series of crimes, the man is accused of using AI to create fake nudes of the woman, and of making a chatbot in her likeness that gave out her name, address, and personal details on a website for AI-powered sex bots.

NOTED

- Can Wikipedia Stay Neutral in the 2024 Presidential Election? (The Wikipedian)

- Patriot Front fraud and fakery (Conspirador Norteño)

- Meet Margarita Simonyan, queen of Russia’s covert information wars (WaPo)

- An AI Bot Named James Has Taken My Old Job (Wired)

- Why is the Italian prime minister suing over deepfake porn? (Channel 4 News)

- Most Americans don’t trust AI-powered election information: AP-NORC/USAFacts survey (AP News)

- How TikTokers think about misinformation (WaPo) with Pulse on TikTok (Weber Shandwick)

- Social workers in S’pore raise concerns over deepfake porn (The Straits Times)

- Is anyone out there? (Prospect)

- Poll: Vast Majority of US Voters Agree Individuals and Platforms Should be Held Accountable for Sexually Explicit Digital Forgeries (Tech Policy Press)

- Synthetic Human Memories: AI-Edited Images and Videos Can Implant False Memories and Distort Recollection (arXiv)

- How X accounts are fueling anti-India disinformation following Bangladesh protests (Logically Facts)

- Russians made video falsely accusing Harris of hit-and-run, Microsoft says (WaPo)

- Is That AI? Or Does It Just Suck? (New York Magazine)

1 Defined as “content aggregation services, internet search engine services, connective media services, media sharing services, or a digital service determined by the Minister in an instrument.”

2 I’m going to speculate that the 2004 peak was associated with the vice presidential debate in which Dick Cheney invited viewers to check out Factcheck.org for a fact check on his opponent’s attacks on Halliburton, rather than a Bush v Kerry debate. As true factcheck-ologists will know, Cheney got the url for the site wrong.

3 It appears that most people misspelled Linsey Davis as “Lindsey” Davis, who apparently writes historical whodunnits.

4 The authors are *very* good at circulating their preprints, so you may have seen a version of this paper in April.

Member discussion