🤥 Faked Up #16

TikTok's AI is summarizing conspiracy theories, Meta ran 222 ads for deepfake nudes, and Telegram apologizes to South Korea

This newsletter is a ~6 minute read and includes 45 links. I ❤️ to hear from readers: please leave comments or reach out via email.

THIS WEEK IN FAKES

Brazil’s Supreme Court unanimously upheld the ban on X (how we got here). Germany is exploring image authentication obligations for social networks. For election topics, all Google’s generative AI tools will now dodge to Search, while X’s Grok AI will now link out to Vote.gov. Awful people are using AI to make clickbait deepfakes about the rape and murder of a doctor in Kolkata.

TOP STORIES

TIKTOK’S CONSPIRACY SUMMARIES

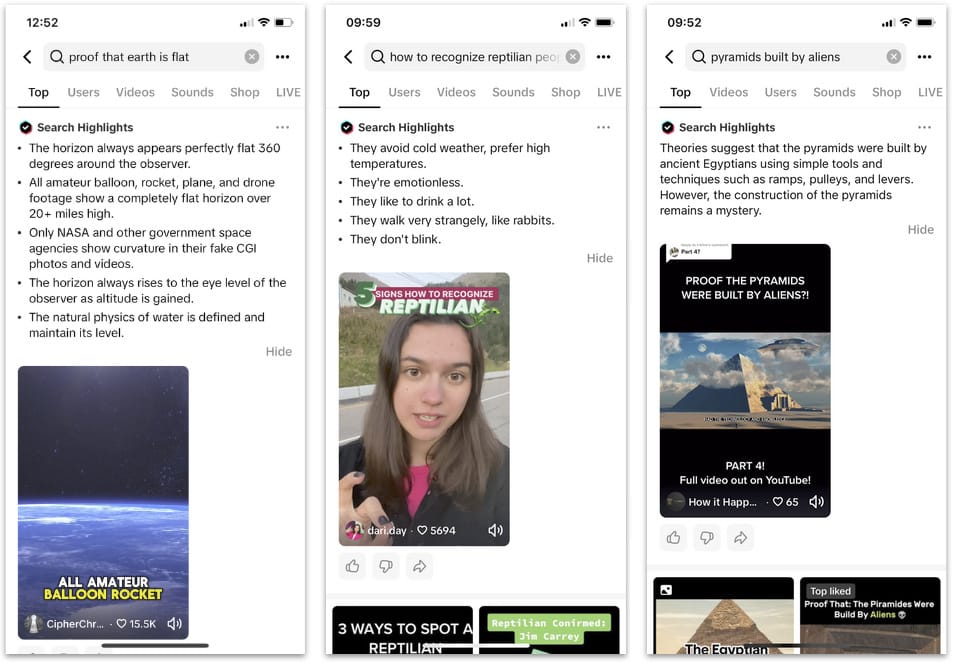

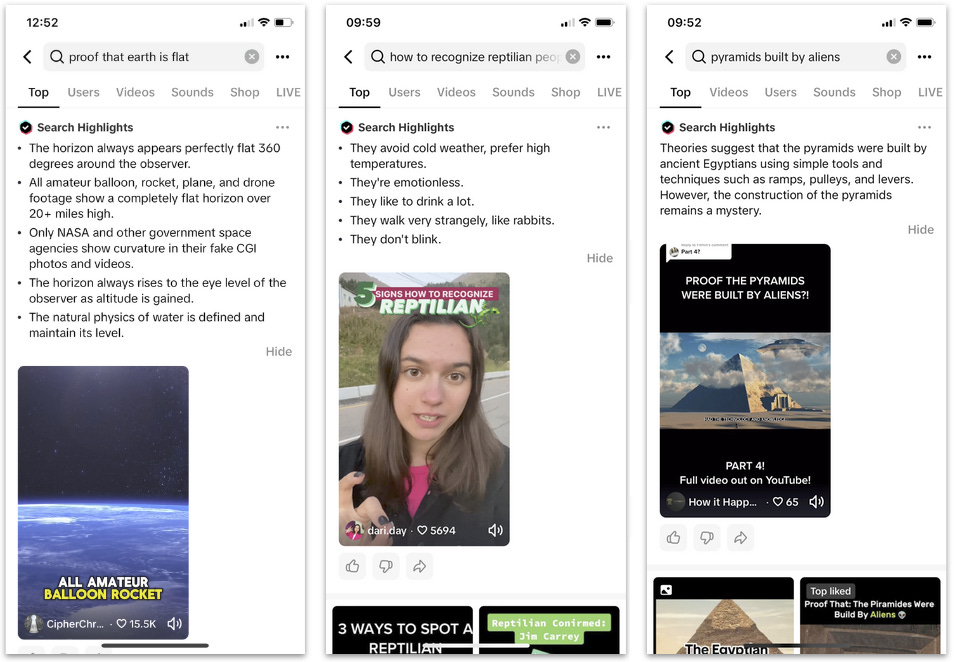

Earlier this year, TikTok launched “Search Highlights,” an AI-generated summary of the result(s) for a specific query.

In a test I conducted on Monday, the feature was very helpful at summarizing conspiracy theories.

My search for [proof that earth is flat] returned a summary that accused NASA of putting out “fake CGI photos and videos” showing curvature in the Earth’s horizon. A search for [how to recognize reptilian people] helpfully informed me that “they walk very strangely” and “don’t blink.” And the Search Highlights for [pyramids built by aliens] informed me that “the construction of the pyramids remains a mystery.”

Mind you, TikTok is suggesting my underlying search queries for relatively broad terms like [flat earth], [pyramids] and [reptilians]. I’m not going to call this a rabbit hole, but it’s still a pretty efficient conspiracy theory-serving service.

SOUTH KOREA’S MAP OF SHAME

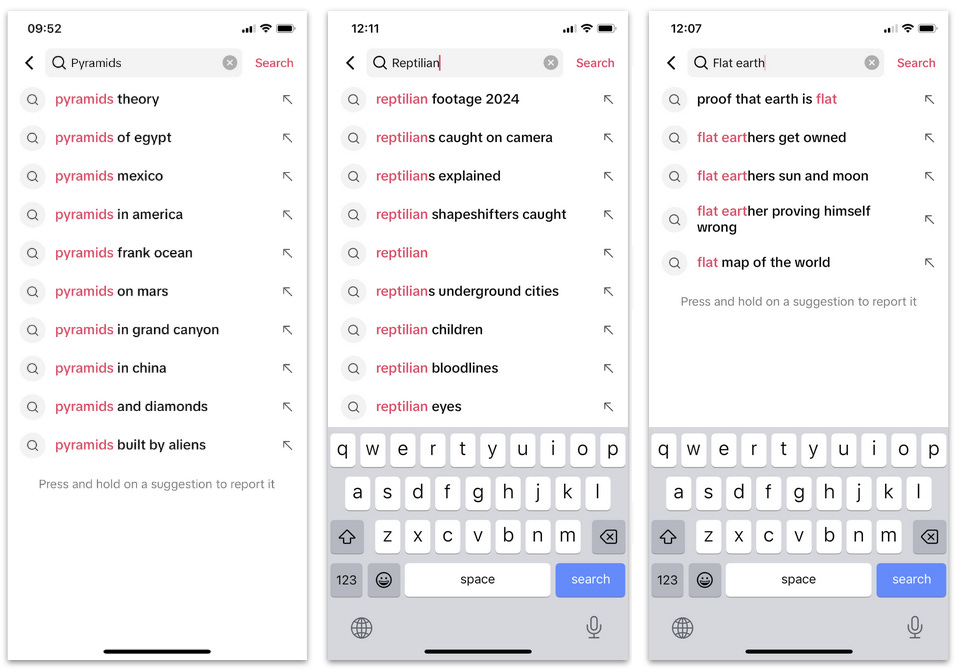

A viral map created by a high school freshman purports to show 500 educational South Korean facilities where students were targeted by deepfake nudes.

While the map’s creator did not independently verify the reports of deepfake abuse, other sources provide a sense of the scale of the issue in the country.

According to South Korean police, there have been 527 reports tied to illegal deepfake porn between 2021 and 2023.1 A survey organized by the Korean Teachers and Education Workers Union separately tallied more than 500 victims of “illegal synthetic material sexual crimes.” And an ongoing special operation to crack down on deepfake nudes led to 7 arrests this week. (Thanks a million to journalist Jikyung Kim for helping me find some of these stats).

Last week, I pointed to a Korean Telegram channel sharing deepfake nudes with more than 200,000 subscribers. Telegram is now being investigated to see whether it has been complicit in the distribution of this content (the platform appears to be in an unusually collaborative mood).

Also this week, a major K-pop agency said it was “collecting all relevant evidence to pursue the strongest legal action with a leading law firm, without leniency" against deepfake porn distributors targeting its artists.

This would be a welcome and overdue move that may materially affect websites like deepkpop[.]com, which posts exclusively deepfakes of K-pop stars and drew in 323,000 visitors in July2.

DeepKPop includes this infuriating disclaimer on its website: “Any k-pop idol has not been harmed by these deepfake videos. On the contrary we respect and love our idols <3 And just wanna be a little bit closer to them.”

AI UNDRESSERS EVERYWHERE

A few weeks ago, I found that Google Search was running 15 ads for AI undresser apps.

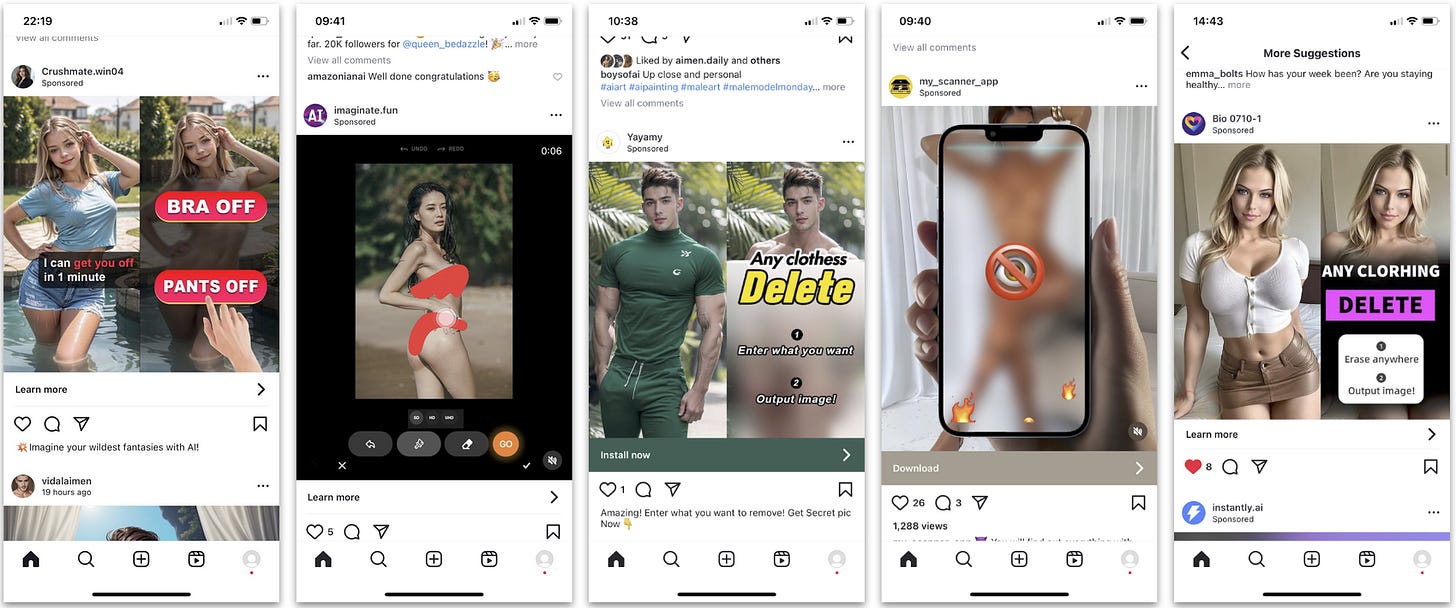

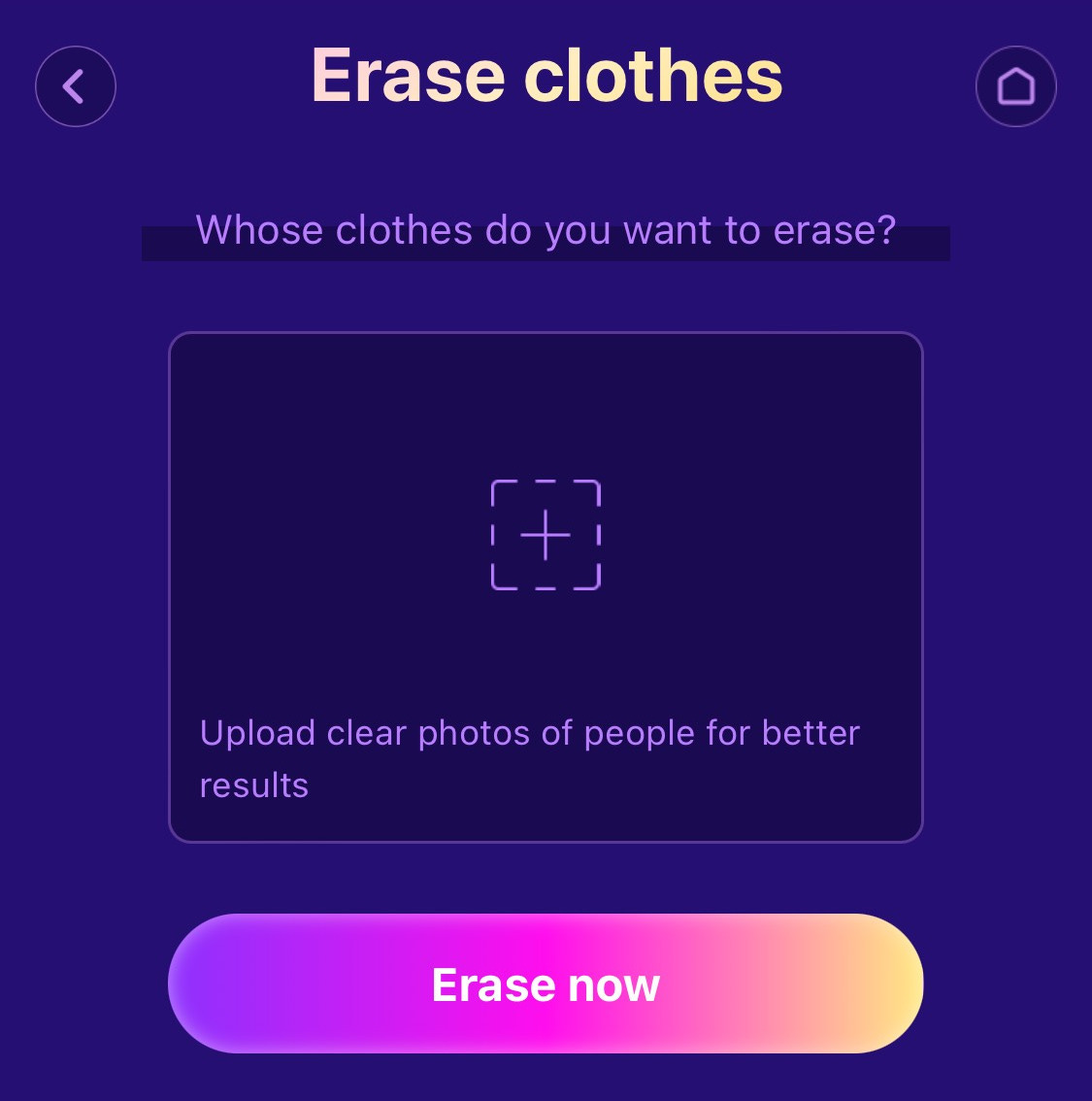

This week, I found that Meta ran at least 222 ads for five different tools that promise you can remove any clothes from an uploaded image.3

Each of these tools also has an app either on the App Store or the Play Store (or both). Here’s the full list:

One of the services (Crushmate), is clearly trying to evade detection by running ads from eight different Facebook pages while also pointing to different urls. All of its websites, though, do the same thing:

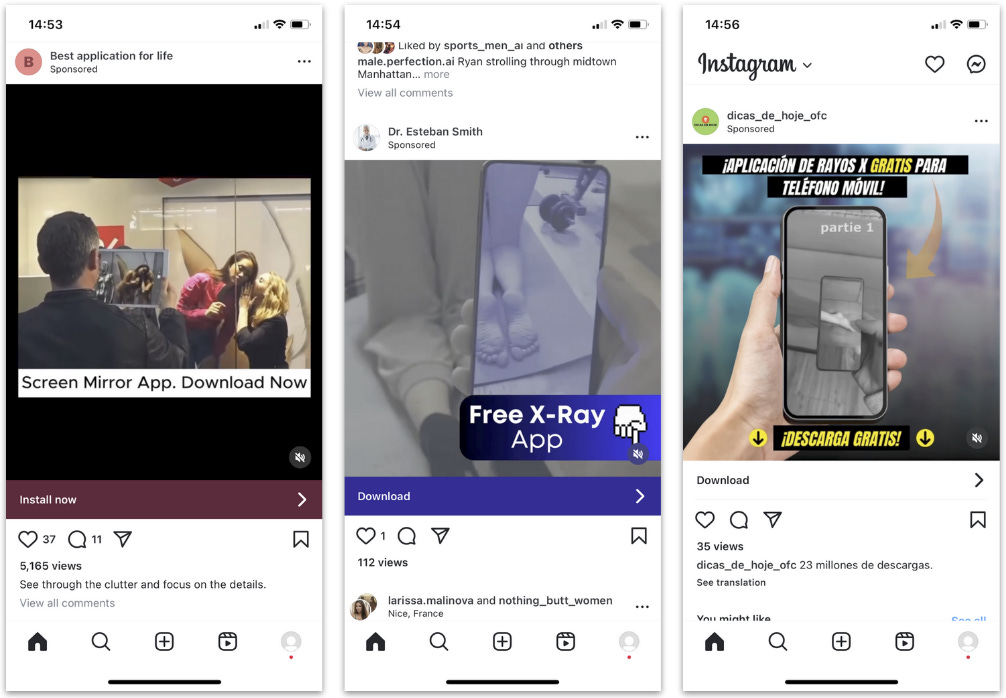

Incidentally, I found Instagram was also running several weird ads for what looked like undressers that you can use as an augmented reality filter (see below). I suspect these don’t actually work as advertised but I wouldn’t be surprised if they’re eventually good enough to introduce a whole new horrible trend of sexual harassment.

I emailed Meta, Apple and Google on Tuesday for comment on my findings.

Meta returned my email, investigated the leads, and terminated most of the ads in question under its sexual exploitation policy. A spokesperson told me that “we have removed these ads and are taking action against the accounts behind them.”

I have not heard back from the other companies yet.

If someone from Apple is reading, please also note the App Store is still hosting another faceswapping app that explicitly targets known individuals (see FU#13). As Emanuel Maiberg wrote in his perceptive piece for 404 Media: “Apple continues to struggle with the ‘dual use’ nature of face swapping apps, which appear harmless on its App Store, but advertise their ability to generate nonconsensual AI-generated intimate images (NCII) on platforms Apple doesn’t control.”

I used to write content policy, so I get that off-platform evidence can sometimes be tricky to use for enforcement. But I also think that if an app is actively marketing itself as a tool for non consensual nudes, platforms should take that at face value.

THE CON-TRAILS

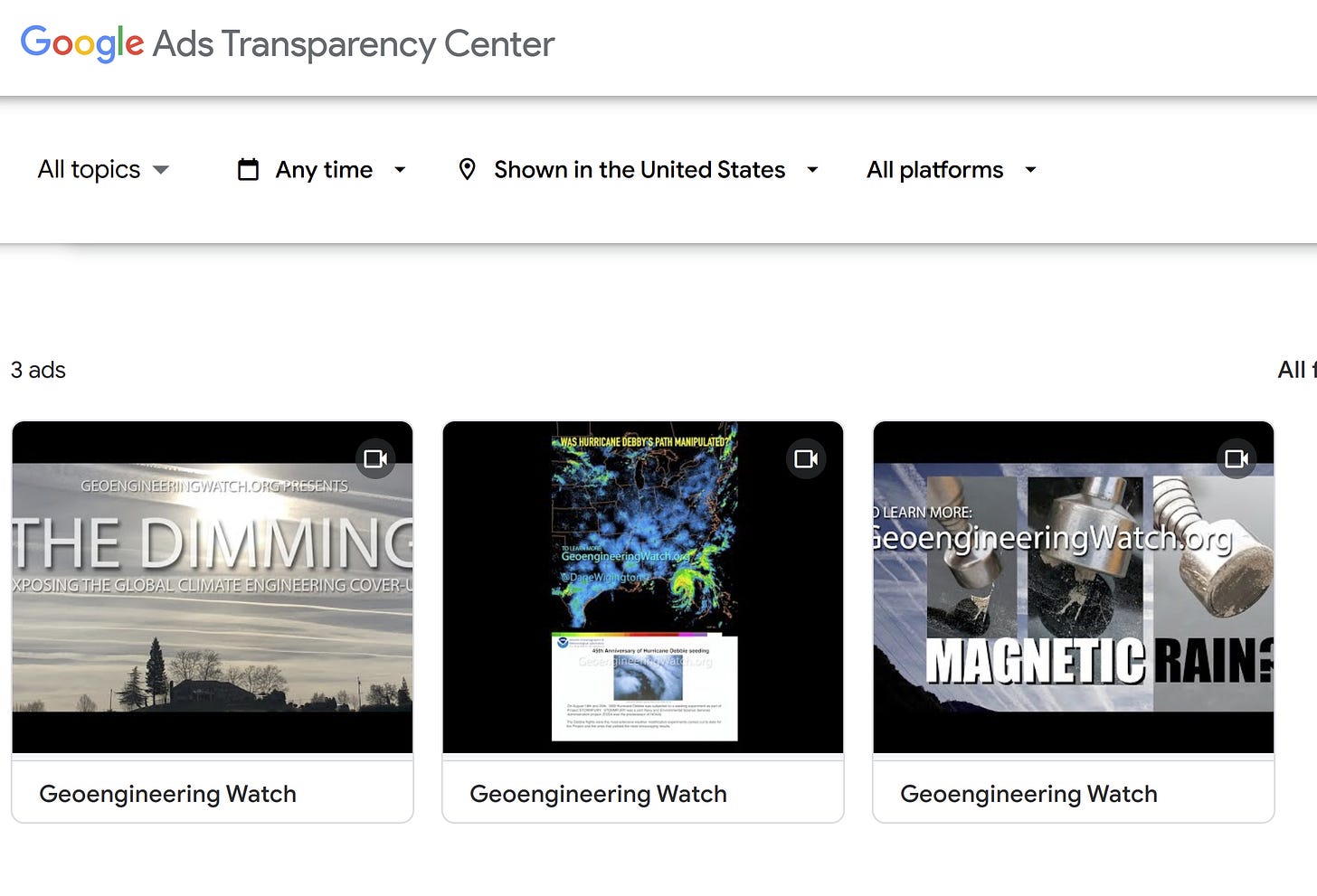

Speaking of weird Instagram ads. I recently got served this sponsored post from Dane Wigington, who runs a website that claims (among other things) that hurricanes are human-generated.

Wigington also recently paid Google to run at least 9 ads (6 text, 3 video) for its documentary “The Dimming,” which claims that contrails are actually geoengineering exercises to manage solar radiation. The three-year-old video has 25 million views on YouTube; in a review for ClimateFeedback, one expert called many of its central claims “pure fantasy.”4

Google’s policy on climate misinformation likely doesn’t apply to these ads.

PROBLEMATIC LOBBYMATIC

Politico reports that LobbyMatic, a “startup pitched as a service to integrate AI into lobbying[,] is covertly run by a pair of well-known, far-right conspiracy theorists and convicted felons who are using pseudonyms.” 404 Media adds that the startup had been posting on Medium under the fake name of Pat Smith and “for months, advertised in screenshots that major companies were using its product; many of these companies [say] that they have never been clients of LobbyMatic.”

IT’S AU REVOIR FOR FRANCE-SOIR

I missed this earlier in the summer: France-Soir, the online publication that uses the name of a once-storied newspaper, lost its accreditation as an “online press service.” French media authority CPPAP had initially made this call in December 2022 based on the website’s dissemination of COVID-19 misinformation. France-Soir appealed the decision, which has now been definitively confirmed, meaning the website can no longer benefit from some financial incentives aimed at news publications.

NOTED

- Chatbots Are Primed to Warp Reality (The Atlantic)

- Harmful 'Nudify' Websites Used Google, Apple, and Discord Sign-On Systems (Wired)

- Authorities warn AI, deepfake technology in romance scams costing WA victims thousands (ABC News)

- 42% of Russia-Ukraine War Misinformation Originated on Telegram (NewsGuard)

- Is digital technology really swaying voters and undermining democracy? (New Scientist) with The truth about digital propaganda (Reasonable People) and Misinformed about misinformation (FT)

- Factuality challenges in the era of large language models and opportunities for fact-checking (Nature Machine Intelligence)

- Teaching critical thinking in digital spaces (American Psychological Association)

- Indian Sites Spreading Harmful Disinformation Are Earning Money Through Google’s Ads (Bellingcat)

- ‘My identity is stolen’: Photos of European influencers used to push pro-Trump propaganda on fake X accounts (CNN)

- The #Americans: Chinese State-Linked Influence Operation Spamouflage Masquerades as U.S. Voters to Push Divisive Online Narratives Ahead of 2024 Election (Graphika)

- Diese Falschinformationen kursieren zu den Landtagswahlen 2024 in Thüringen und Sachsen (Correctiv)

FAKED MAP

This 1750s map by French cartographer Guillaume Delisle cracks me up because it’s really rather precise overall and then just plonks an imaginary inland sea over half of Western Canada. I love that after submerging most of British Columbia and Alberta, Delisle froze on whether to call the body of water the Western “Bay” or “Sea.”

1 The figure for teenagers — 342 — is twice as high as the total number of victims I’d tracked to date from reporting and is a reminder that we are likely missing hundreds of cases of AI-driven victimization of under-aged girls globally.

2 Data from SimilarWeb. I also found noteworthy that 81% of the site’s traffic originated from Russia, with most of the rest from South Korea.

3 I’m sick to my stomach of writing about non consensual synthetic imagery for this newsletter. I’ve covered the topic in 15 out of 16 newsletters. If I didn’t think it was one of the most harmful digital abuse vectors and everyone was doing enough to fight I’d give it a break. But here we are.

4 Incidentally this fact check led to a lawsuit, which Wigington lost.

Member discussion