🤥 Faked Up #11

Generative AI is brat | Spying the fact-checkers | DEFIANCE clears the Senate

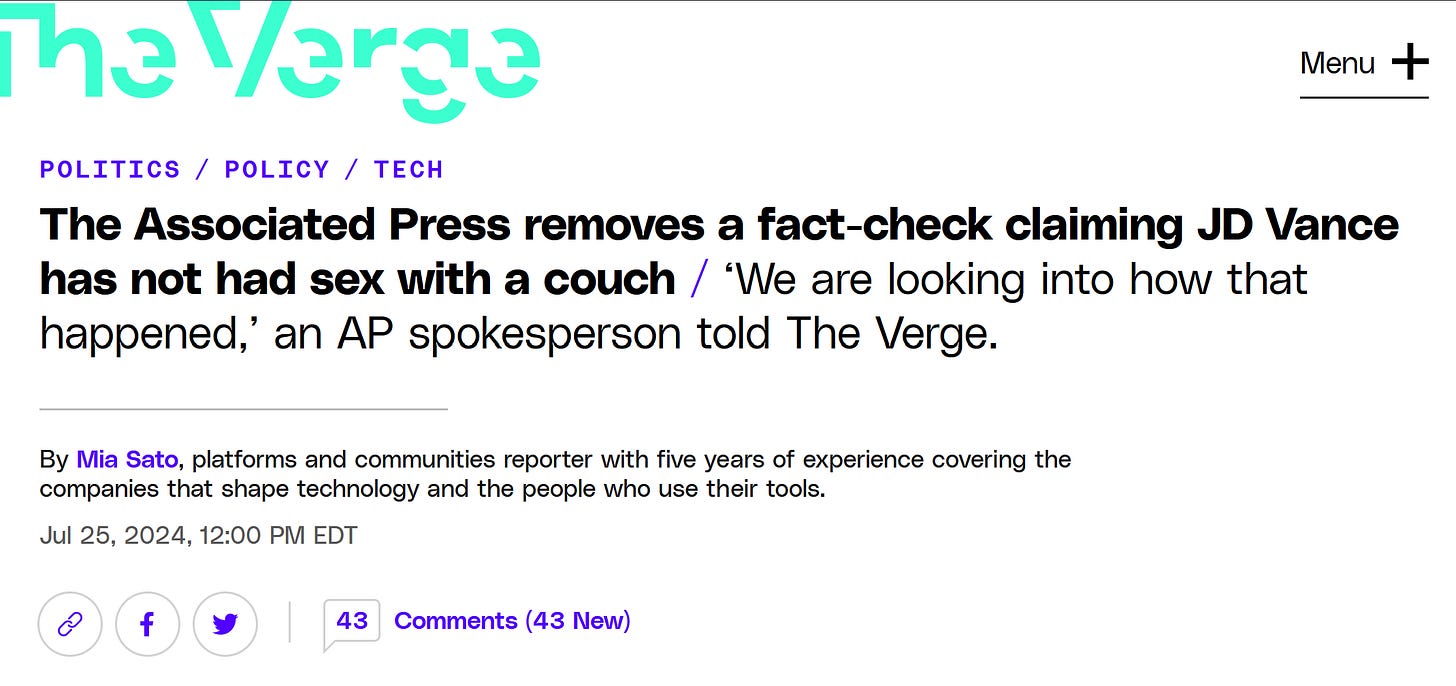

Faked Up #11 is brought to you by dumb generative AI and pristine couches. The newsletter is an ~8-minute read and contains 70 links.

Top Stories

GENERATIVE AI IS BRAT

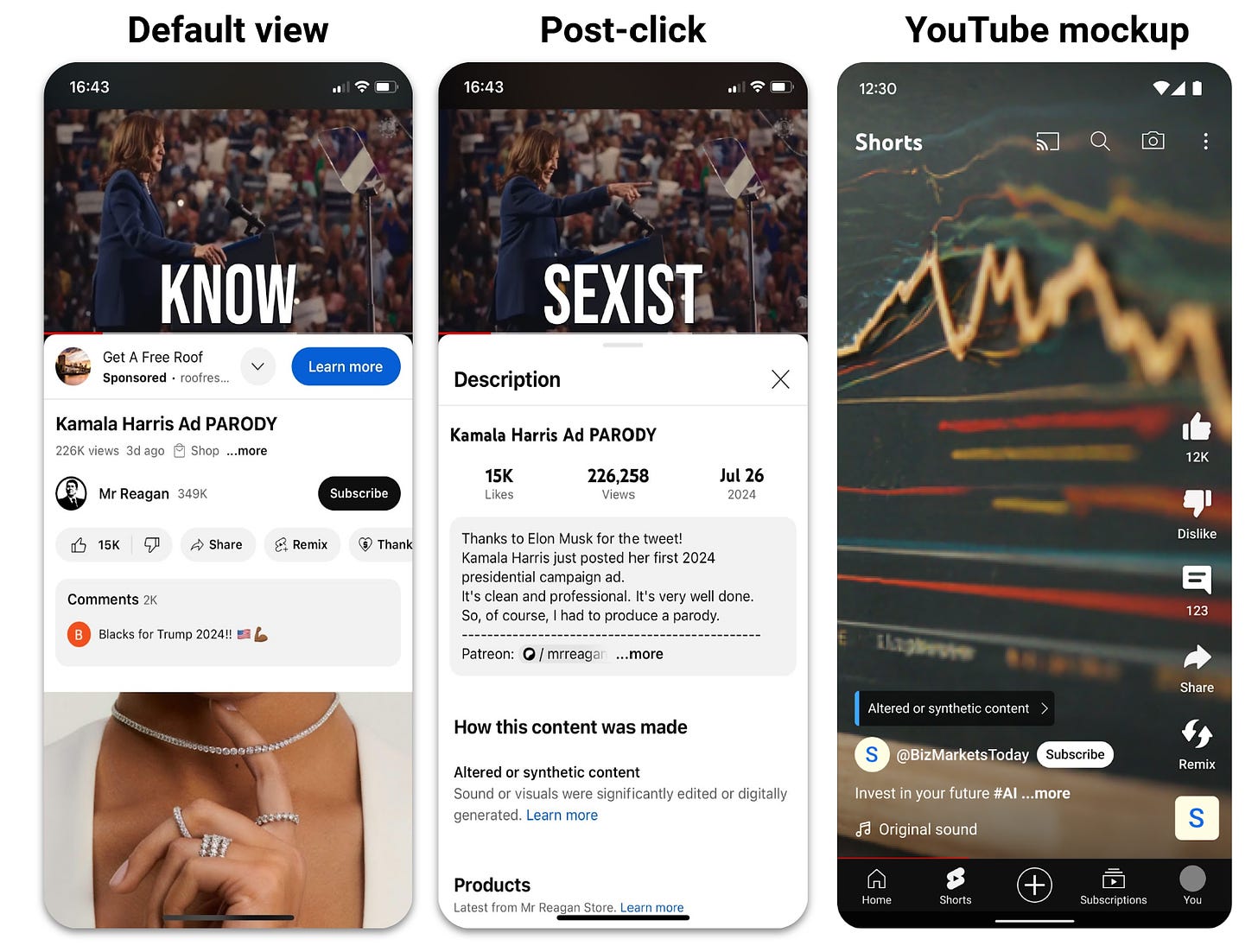

Ugh. I guess I’m going to have to write about the Musk tweet. Quick recap for those who missed the action: Conservative creator Christopher Kohls (aka “Mr Reagan”) made a spoof Kamala Harris campaign ad featuring an AI voice clone of the US Vice President. Kohls added “parody” to both his X and YouTube posts, but Musk’s tweet contained no such disclosure.

Look, I don’t think anyone actually believed Harris called Biden the “ultimate Deep State puppet.” Not anyone who would be affected by a warning label, at least.

This surface-level deception is more meme than misinformation. It also appears to be the current norm for AI-powered political discourse globally. In elections from India to South Africa, generative AI is being used primarily to entertain hyperpartisan audiences rather than deceive undecided voters.

The Harris video suggests that much AI-generated election content in the US might end up being dumb rather than dangerous. It also exposes the brittleness of the AI detection and labeling infrastructure.

AI audio generators have promised to ban voice clones of elected officials; once again they have failed to do so. Tech platforms have promised to prominently label AI-generated content; once again they have failed to do so.

Because community notes on Musk’s tweet are a partisan food fight, no label has been added to his video. But set aside the social network whose CEO spent the past few days posting antivax memes and racist claims about importing voters.

Take YouTube instead. Google’s video platform promised in March that it would add a “prominent label” on videos that use generative AI and discuss “sensitive topics” like elections. In the mockup, the label appears as an overlay on the full-screen video.

Yet on the Harris deepfake, the disclosure is visible only if you click “more” in the video description. This is on a video whose creator was open about his use of synthetic audio and (I’m assuming) had voluntarily added this information to his video upon uploading it.

Again, I really don’t think American democracy was harmed by this video. But it is a reminder that (a) generative AI is dumb (b) voice clones are going to be commonplace and (c) labels for AI content are not ready for prime time.

PS. Think you can spot an audio deepfake? Try this quiz1.

IT CAN HAPPEN TO ANYONE

Cybersecurity firm KnowBe4 disclosed that it mistakenly hired a North Korean IT worker who was masquerading as a real US-based engineer. In their words:

KnowBe4 needed a software engineer for our internal IT AI team. We posted the job, received resumes, conducted interviews, performed background checks, verified references, and hired the person. We sent them their Mac workstation, and the moment it was received, it immediately started to load malware.

The scammer used AI to turn a stock image into a unique headshot (see below). KnowBe4 ran four video interviews “confirming the individual matched the photo provided on their application,” so I’m assuming this was a face swapping tool rather than a generator.

BAD AT BREAKING

The University of Washington’s Center for an Informed Public framed the misinformation that emerged after the Trump shooting as an element of “collective sensemaking.” During moments of crisis, it is natural for rumors to circulate as most news is unverified and folks try to understand what’s happened as a group.

And good luck turning to AI for answers. Both NewsGuard and the Washington Post found that chatbots frequently refused to engage with news of the shooting, called it misinformation, or failed to debunk emerging falsehoods.

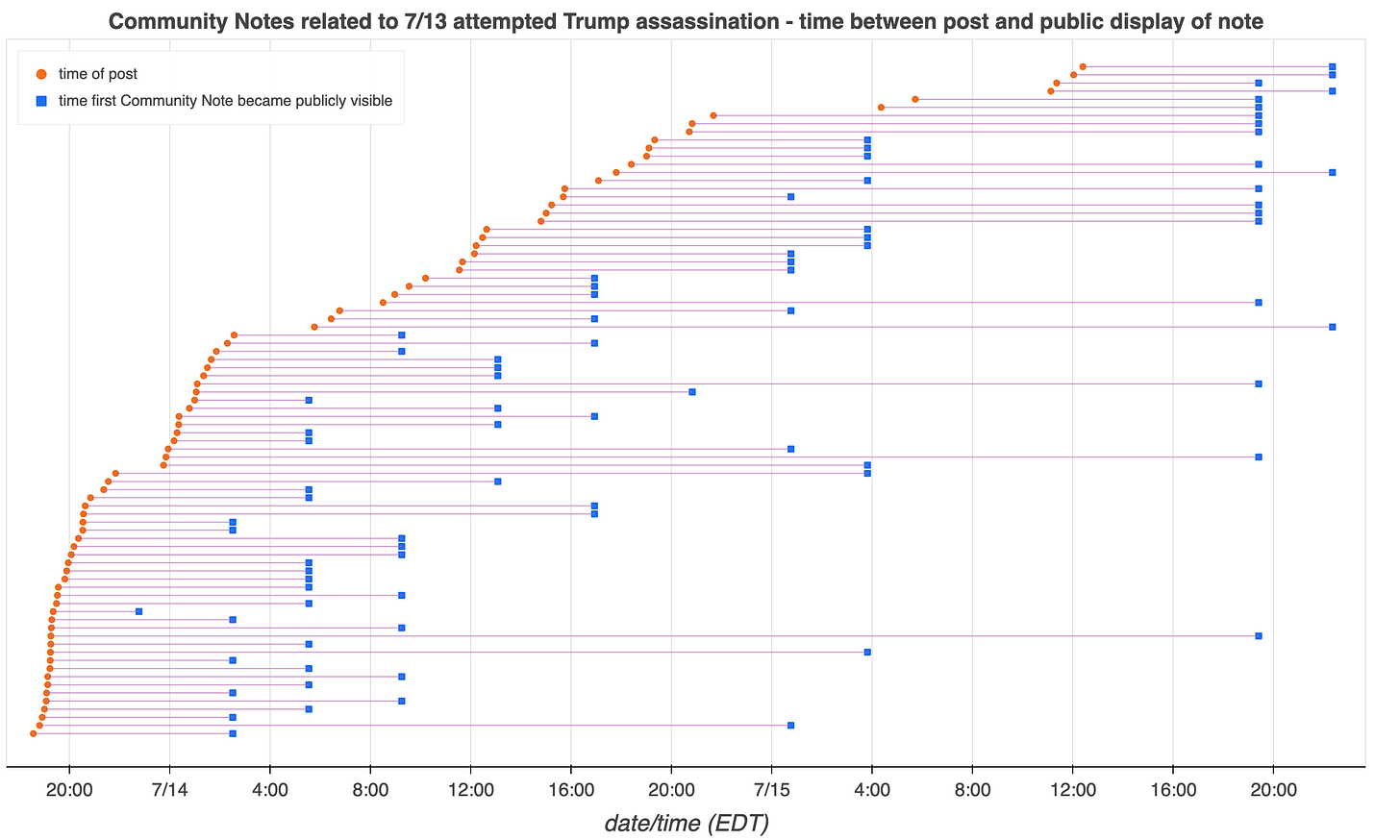

I found particularly interesting this analysis by Conspirador Norteño on X Community Notes debunking false claims about the attempted assassination. Only about 9% out of the 1000+ notes that the analyst reviewed were deemed helpful and ended up attached to a tweet. On average, it took 15 hours from submission to public display (see graph below). That’s not warp speed, but it isn’t terrible either.

Also notable is that “35 of the 83 posts with visible notes (42.2%) were subsequently deleted by their authors,” suggesting Notes have some level of shaming effect.

THE LONG TAIL

Approximately 20% of Americans surveyed by Morning Consult two days after the attempted assassination of Donald Trump said “they find it credible that the shooting was staged and not intended to kill.” This included 12 percent of Trump’s own supporters.

It’s too early to tell whether that or multiple other conspiracy theories will stick, but misinformation was top of mind for some at yesterday’s Senate hearing on the shooting. Acting Secret Service Director Ronald Rowe Jr. had this to say:

I regret that information was not passed to Congress and the public sooner and with greater frequency. I fear that this lack of information has given rise to multiple false and dangerous conspiracy theories about what took place that day. I want to debunk these conspiracies today by sharing the following confirmed details.

40 minutes later, Senator Amy Klobuchar did not seem convinced those details had been enough:

For the people in my state that keep asking me: I just don’t get how [the shooter] got on the roof — I know we’ve gone through great details and a lot of examination — could you just give a minute on what went wrong and how you think it can be fixed? Because I think it’s just going to help to dispel the conspiracy theories. There are some people that think it didn’t really happen, which of course is completely ridiculous, it did. There are some people that think all kinds of conspiracies went on within the government, which is also false.

SPYING THE FACT-CHECKERS

The Brazilian police has arrested five individuals tied to a clandestine spying ring run between 2019 and 2022 within ABIN, the country’s intelligence agency. The country’s Supreme Court released documents claiming that the criminal activity was aimed at obtaining politically advantageous information and producing disinformation dossiers to attack those perceived as rivals of the administration of Jair Bolsonaro. Among the targets of the illicit investigations were fact-checking organizations Aos Fatos and Lupa.

DISSING THE CHECKMARK

The European Commission has accused X of violating the Digital Services Act. Top among the EU’s complaints is the verified checkmark, which “does not correspond to industry practice and deceives users” because, among other things, it can be purchased by “motivated malicious actors.” The platform can now defend itself; confirmed non-compliance could trigger fines up to 6% of worldwide annual turnover.

DEFIANCE CLEARS THE SENATE

The DEFIANCE Act was approved by unanimous consent in the US Senate on July 23. The bill is now in the hands of the House of Representatives, where Congresswoman Alexandria Ocasio-Cortez is the lead sponsor. If approved in its current state, the law would give victims of nonconsensual deepfake porn the right to sue individuals who “knowingly produced or possessed the digital forgery with intent to disclose it, or knowingly disclosed or solicited the digital forgery.” While I keep my fingers crossed for a rare show of bipartisanship in Congress, I’ll also acknowledge the bill may face legal challenges if approved2.

DOWN THE DOPPELGÄNGER STACK

Following a Correctiv investigation, two European hosting companies have suspended multiple accounts tied to the Russian influence operation Doppelgänger. The services hosted domains used to spoof reliable news outlets and spread pro-Kremlin talking points to Western audiences on social media. (For a closer look at the operation’s infrastructure of redirects and fake geolocation, take a look at this analysis.)

FACE FRAUD FACTORY

From 404 Media: “An underground site is selling videos and photos of real people to bypass selfie checks for online services. In essence, these individuals have been turned into stock models for services specifically designed for fraud.” Cool, cool, cool.

VICTIM SCAMS VICTIMS

Must-read piece by the Wall Street Journal on the scam farms of South East Asia, which employ “hundreds of thousands” in a state of “forced criminality.” The WSJ profiles one such victim-criminal who shared this awful story of a man he was forced to scam:

One Pakistani man, who had a wife and four children, became obsessed with the woman Billy portrayed and wrote her long essays that read like desperate love poems. When Billy stopped responding, he said the man sent him a video of himself throwing acid on his face.

Earlier this month, UN official Benedikt Hofmann described a busted scam farm that employed 700 people north of Manila. Hofmann said these all-purpose “criminal service providers” sell “cybercrime, scamming and money laundering services, but also data harvesting and disinformation.”

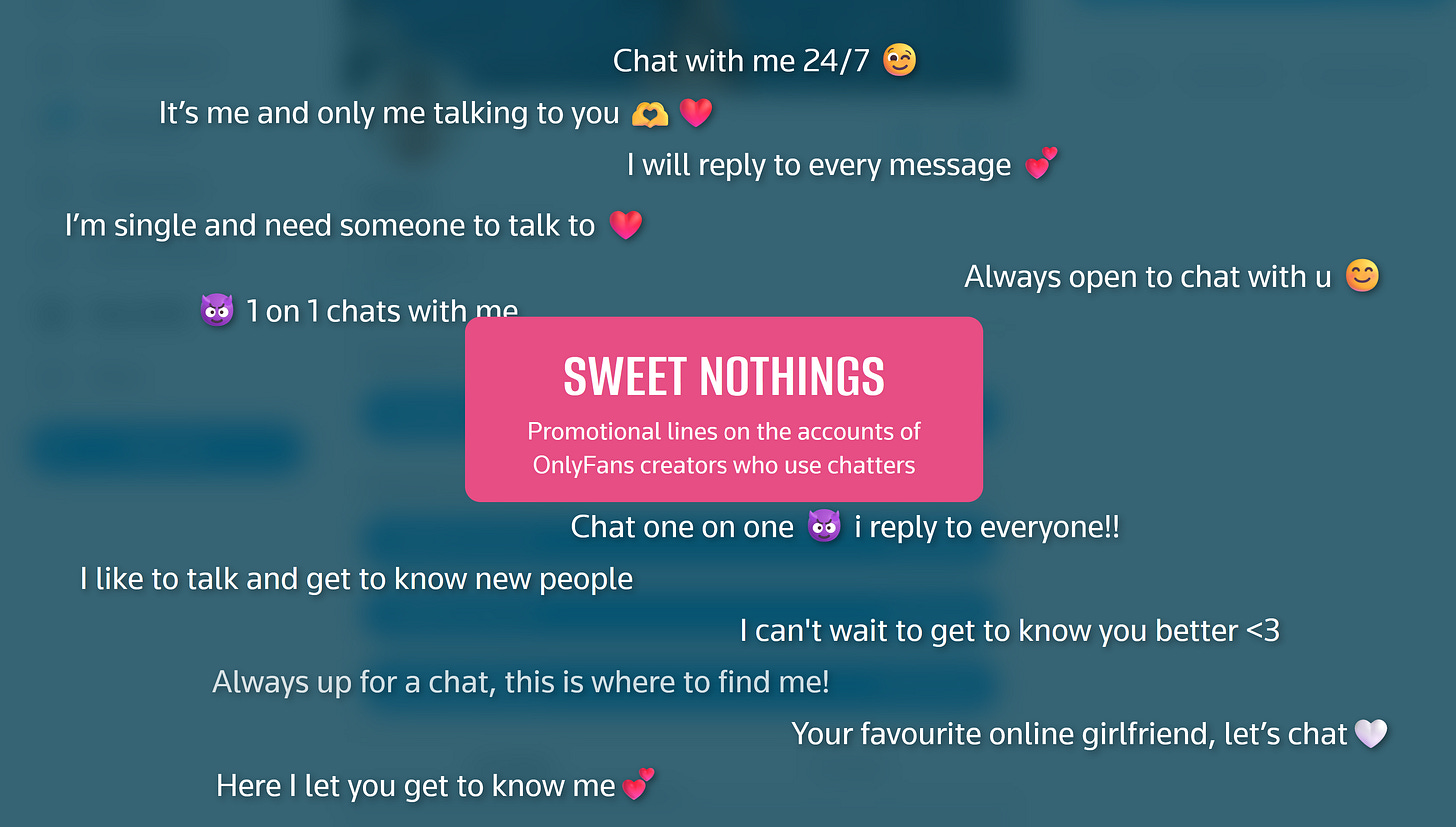

LONELYFANS

You might remember from FU#3 that OnlyFans creators employ underpaid chatters to do the dirty work of engaging with their horny fans. Well, you’re going to want to read this blockbuster Reuters follow-up into the practice that relies on interviews with 15 chatters. It does seem like only a matter of time before OnlyFans ends up having to rein in this practice.

Meanwhile, the platform’s success seems to be encouraging deception elsewhere. Wired reports that several alt-weeklies appear to be running AI-generated sponsored content reviewing OnlyFans performers.

JANKOWICZ V FOX NEWS

The defamation lawsuit filed by the former director of the Department of Homeland Security’s Disinformation Governance Board Nina Jankowicz against Fox News has been dismissed. District judge Colm Connolly concluded that the claims aired by the network were either not about Jankowicz, not false, or clearly labeled as opinions. Jankowicz’s lawyers told Reuters that they would appeal.

Headlines

- Fake video of threat to Olympic Games appears to be from Russia, researchers say (NBC News)

- Ozy Media, CEO Convicted of Fraud After Staged Goldman Call (Bloomberg)

- Chum King Express (The Verge)

- ‘I Need to Identify You': How One Question Saved Ferrari From a Deepfake Scam (Bloomberg)

- Un año de libertad vigilada para 15 menores de Almendralejo por manipular imágenes de niñas (El País)

- Popular Ukrainian Telegram channels hacked to spread Russian propaganda (The Record)

- Targeted Countries Should Demand Accountability from the Pentagon and Platforms for COVID Disinformation (Tech Policy Press)

- Conspiracy theories continue to mobilize extremists to violence (Institute for Strategic Dialogue)

- China deploys censors to create socialist AI (Financial Times)

- Deepfake-detection system is now live (Northwestern)

- A man, a couch, a meme (Renée DiResta)

- Beyond the headlines: On the efficacy and effectiveness of misinformation interventions (Advances)

- O grupo brasileiro de ódio a mulheres que fabrica com IA imagens pornô falsas sob encomenda (BBC)

- AI trained on AI garbage spits out AI garbage (MIT Technology Review) with AI models collapse when trained on recursively generated data (Nature)

- Goldman Sachs: AI Is Overhyped, Wildly Expensive, and Unreliable (404 Media)

- Hocked TUAW (404 Media)

- Temporary deepfake ban discussed as way to tackle AI falsehoods during Singapore election (Singapore Straits Times) with The ACLU Fights for Your Constitutional Right to Make Deepfakes (Wired)

- Foreign actors will seek to influence reshaped U.S. presidential race, says intelligence officer (Reuters)

- New Decision Addresses Meta’s Rules on Non-Consensual Deepfake Intimate Images (Oversight Board)

- Finding Fake News Websites in the Wild (arXiv)

Before you go

This is the dumbest timeline, bar none

The finest minds of my generation are making it possible for us to create out nothing a video of a cat, with hands, drinking beer

Sydney Sweeney’s face at Samsung’s “AI 3D image” of her gives big “Thanks Ma, I love this collage you made from my high school pictures” energy

1 The selection option only worked on Chrome for me, fwiw.

2 See “one more bill” item in FU#8 and also this Wired article on the ACLU’s attitude towards deepfake legislation that does suggest, however, that a very narrowly tailored law against non consensual intimate imagery will not face their opposition.

Member discussion