Elon Musk is a magnet as well as a megaphone for misinformation

🤥 Faked Up #29: Tracking the Tesla Phone hoax across platforms, majorities around the world think platforms should moderate false content, and BlueSky starts banning dupes

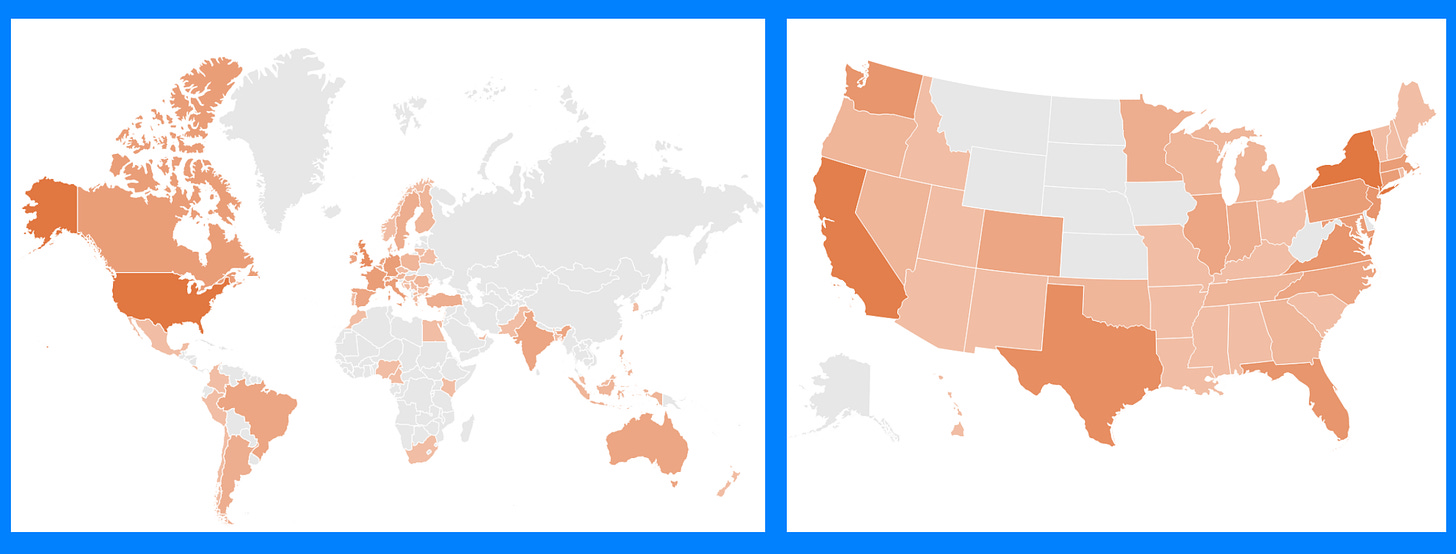

Hey! Faked Up is currently read in 40 U.S. states and 59 countries. Amazing, but incomplete. Do you know anyone in Alaska, Japan, Kazakhstan, Panama, Wyoming or any of the territories in gray below who might enjoy this newsletter? Tell them they can get six months of paid subscription for free ($36!) by emailing mantzarlis@protonmail.com an interesting story on the misinfo beat in their location.

This newsletter is an ~7 minute read and includes 55 links.

HEADLINES

Enshittification is Macquarie Dictionary’s word of the year. Rumble is suing California over an upcoming law requiring platforms to block some election deepfakes. Dinesh D’Souza apologized for the election misinformation in his movie “2,000 Mules.” Stanford Professor Jeff Hancock apologized for three AI hallucinations in his testimony supporting a Minnesota law against election deepfakes. FBI director nominee Kash Patel once said “there’s a lot of good” about QAnon. Irish far-right candidates are claiming the election they lost was rigged. TikTok removed three small influence operations targeting the Romanian presidential election.

TOP STORIES

THE MISINFO ANGLE

I did not wake up this morning a South Korea expert, unfortunately, but I did want to cautiously flag that the short-lived martial law decree banned “fake news, public opinion manipulation, and false propaganda” while placing “all media and publications” under control of the government. President Yoon Suk Yeol has a history of calling “fake news” any reporting that is critical or embarrassing for him. His centrist predecessor’s plans to regulate misinformation had also come under fire in 2021 for restricting press freedoms and was ultimately shelved.

MUSK IS MEGAPHONE AND MAGNET

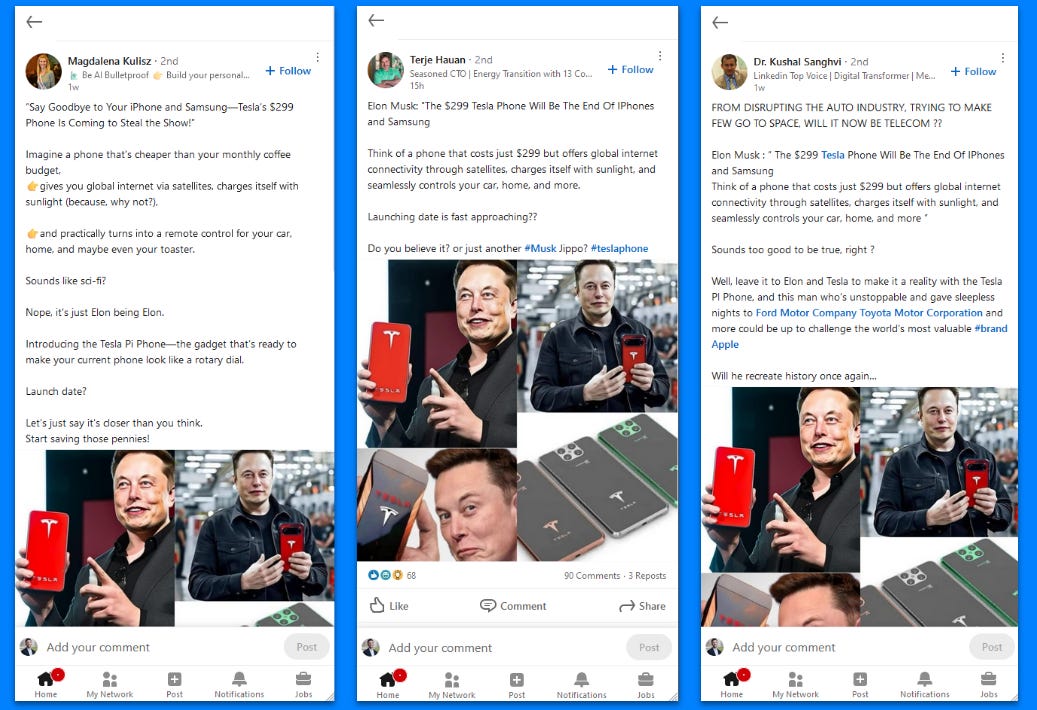

Last week on LinkedIn, just as I was about to post some braggadocious update about a minor career accomplishment, I was served a post by an Italian creative director claiming Elon Musk was about to launch a new phone.

The post cites Musk as saying “the Tesla phone will be the end of iPhone and Samsung.” The device would allegedly cost $299 and be solar powered.

Setting aside that at least two of the four images are patently AI-generated, the claim is completely false. On Joe Rogan’s podcast a month ago, Musk said “No, we’re not doing a phone […] We could do a phone. The operating system of Tesla is Linux-based but we’ve written a massive amount of software on top of that. So like probably Tesla is in a better position to create a new phone that’s not Android or iPhone than maybe any company in the world, but it’s not something we want to do unless we have to or something” (h/t Vera Files).

This didn’t stop the hoax from getting shared widely across LinkedIn. One poster even wrote a follow-up hustle post about going viral with the hoax (she has no regrets).

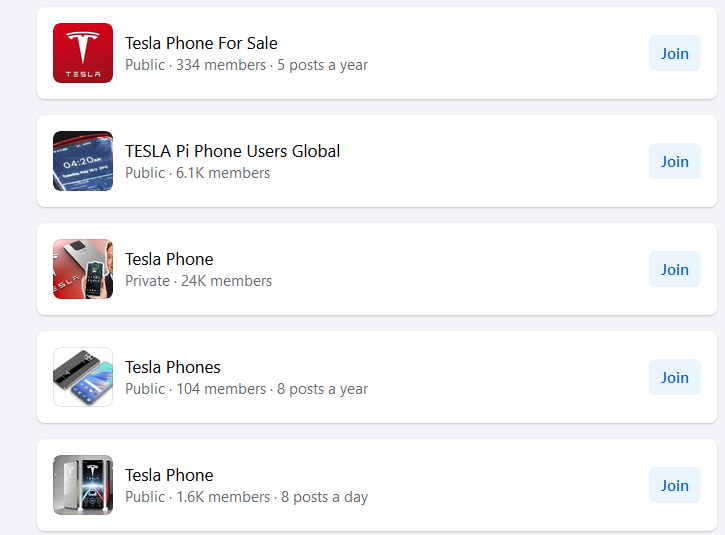

Of course, it didn’t stop on LinkedIn. Facebook, the zombie social network, has several groups dedicated to the phone and its users (which to be clear, do not exist).

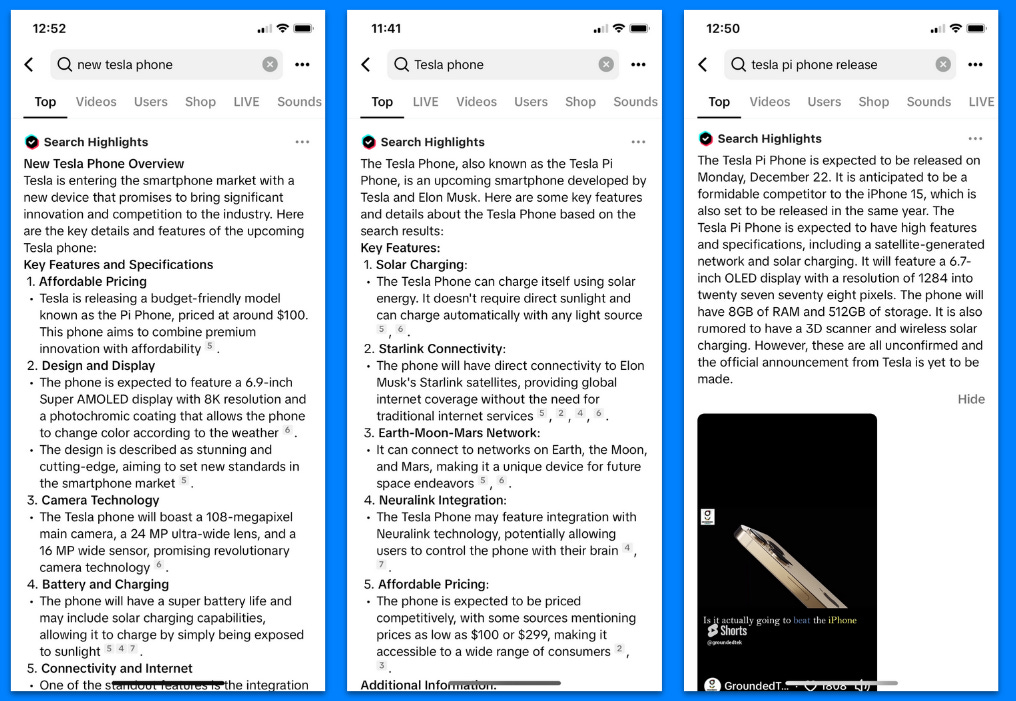

The Tesla Phone is also all over TikTok, which oh-so-helpfully provides AI-generated search highlights summarizing made-up features such as Starlink Connectivity, Earth-Moon-Mars networking and Neuralink Integration.

Heck, the hoax was even mildly popular on BlueSky!

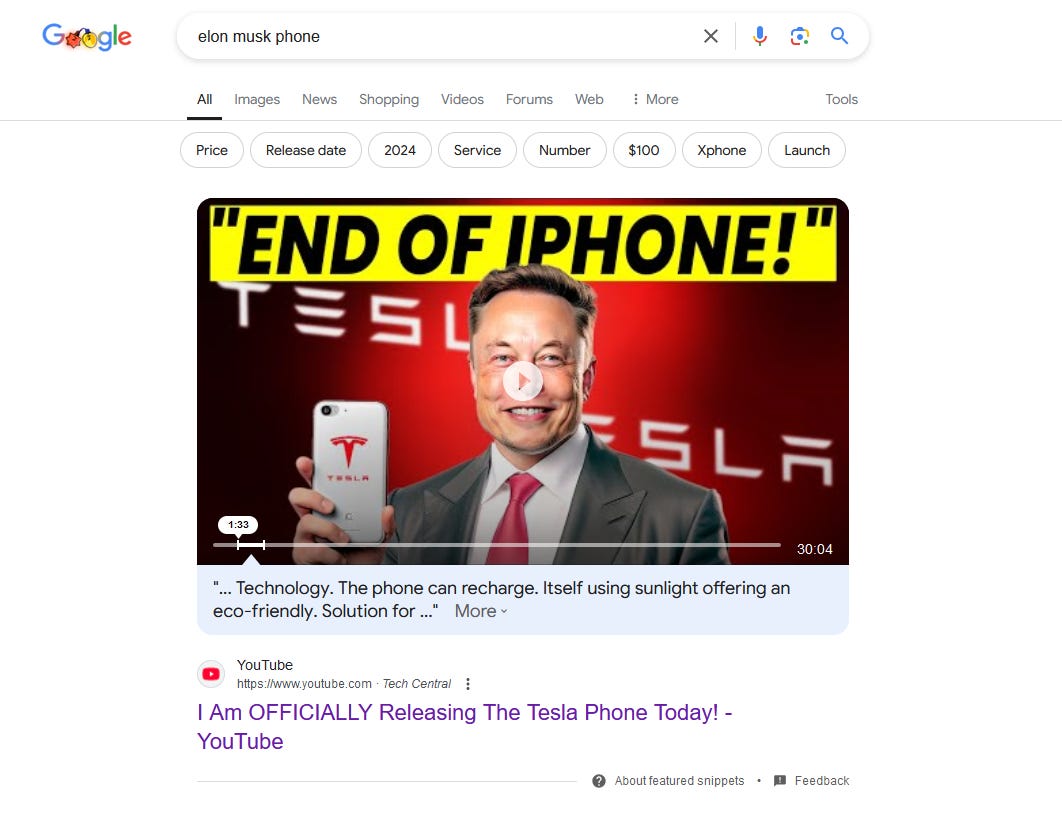

The Tesla Phone also thrives on Google products. Related queries spiked in Google Search over the past week, and a search for [elon musk phone] returns a largely AI-generated highlighted YouTube video that spends 30 minutes reviewing the made up features of the inexistent phone.

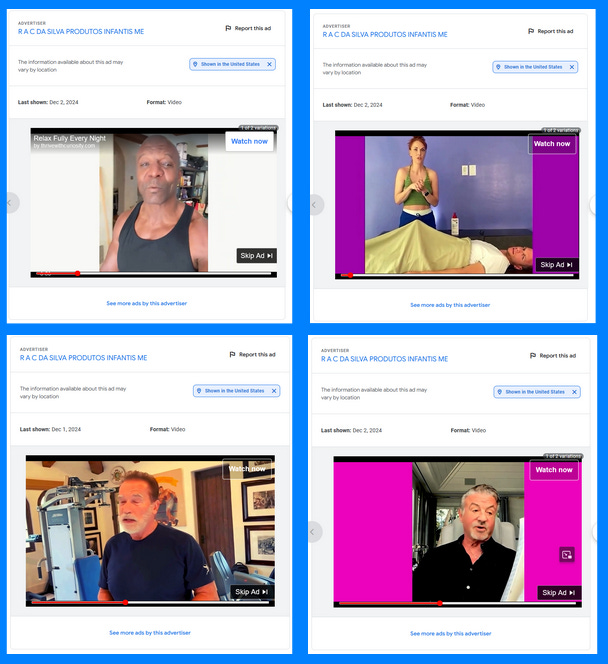

(As an aside, YouTube is monetizing this hoax video, including through an ad for an alleged erectile dysfunction cure called Prolong Power. The company behind that ad was running at least 300 ads for the supplement — including deepfaked endorsements by Arnold Schwarzenegger, Mike Tyson, Sylvester Stallone and Terry Crews — until we asked Google about it.1 So deepfake Elon Musk clickbait was used to sell deepfake Schwarzenegger endorsements of shady supplements. That’s 2024 in a nutshell for you.)

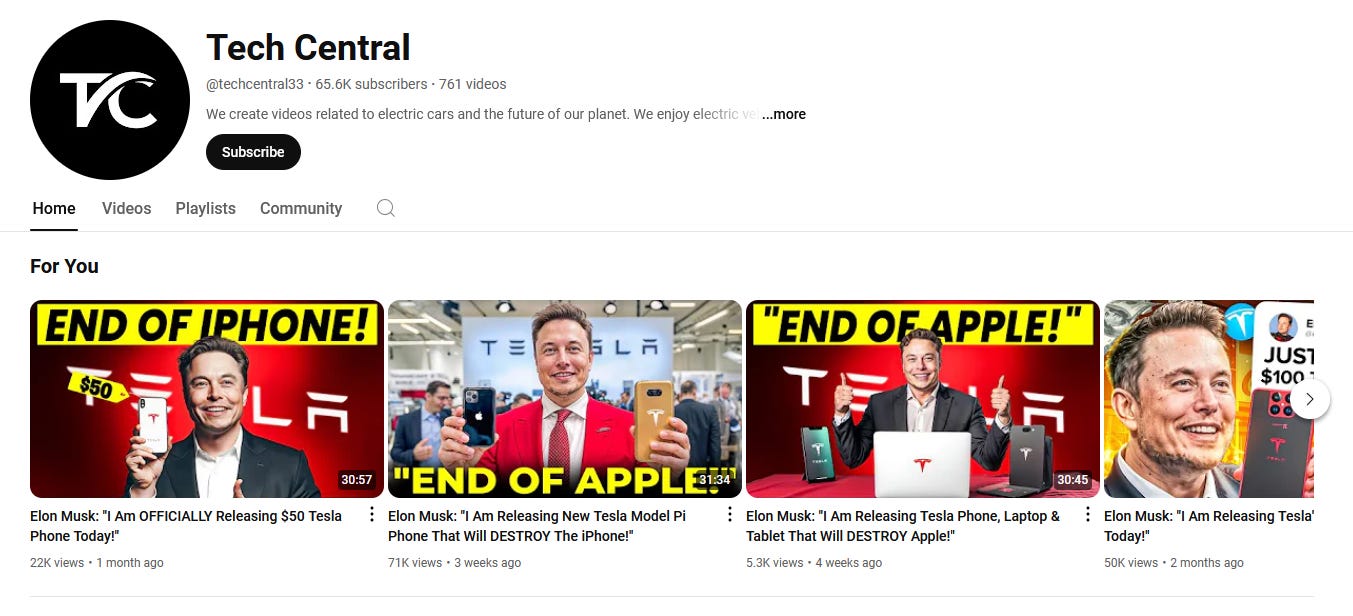

But let’s get back to Musk’s phone. The channel in question, Tech Central, has posted multiple versions of this hoax in the past few months.

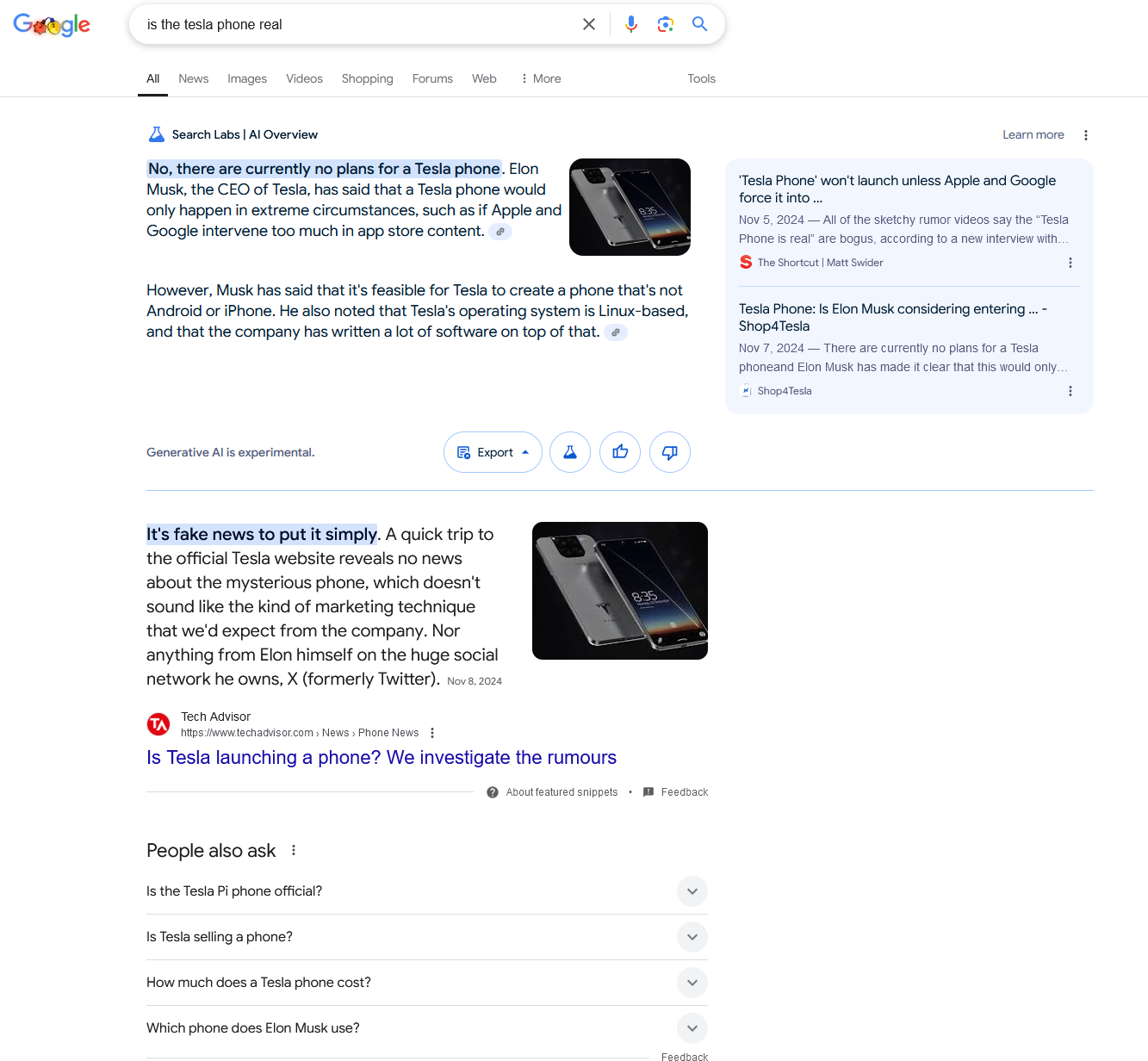

Trying to verify whether the phone is real or not on Google Search is complicated by its AI Overview feature. While [is the tesla phone real] debunks the misinformation, other queries do not.

[tesla pi phone details], for instance, gullibly reheats all the outrageous claims made about the Tesla Phone in low quality sites around the web and serves them in a single summary.

The fake Tesla Phone is on the lower end of the harm spectrum for a falsehood (until people start buying fake ones, I guess). But it serves as a reminder that Musk is both a megaphone and a magnet for misinformation.

He has spread false claims, most notably about undocumented voters swaying the US election, and rolled back most misinformation moderation on his platform X.

And yet he is also, if not a victim, a frequent target of misinformation. Over the past few weeks alone, fact-checkers have debunked claims that the billionaire bought CNN, launched a $10,000 Tesla house, and sued Whoopi Goldberg.

Musk has been deepfaked into thousands of crypto scam ads and frequently features in what can only be termed “fan non-fiction” showing him accomplishing incredible feats going viral on Meta around the world.

His key advisory role to U.S. president-elect Donald Trump means Elon Musk’s centrality to the misinfo-generating complex is bound to continue well into 2025.

A PLATFORM’S RESPONSIBILITY

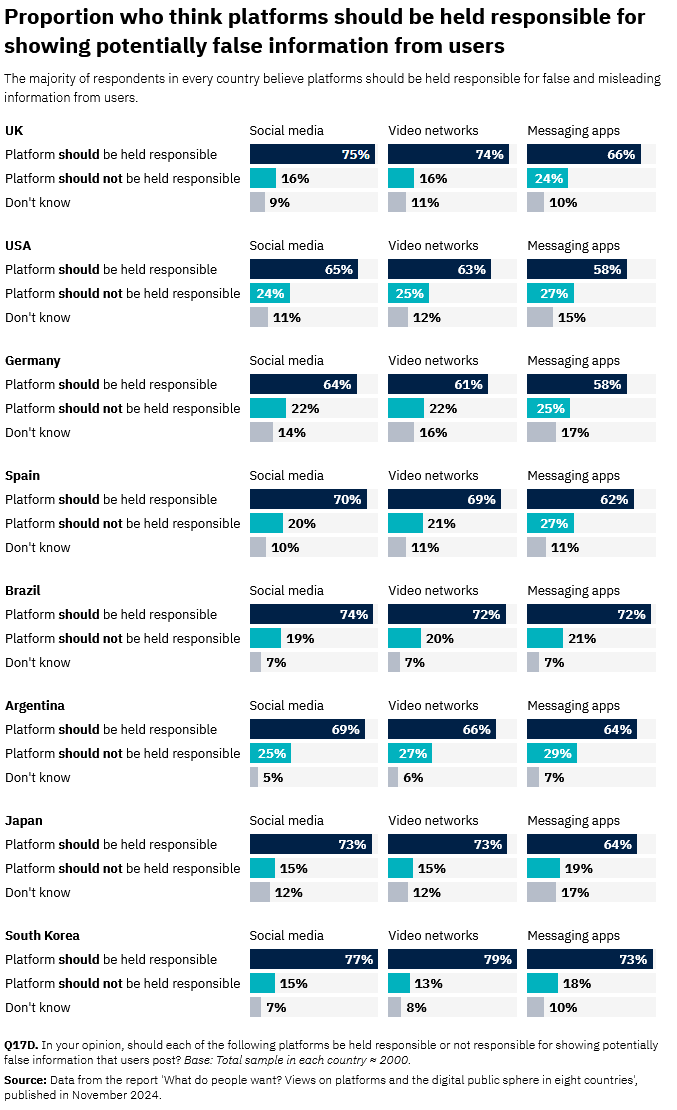

The Reuters Institute for the Study of Journalism asked ~2,000-strong representative samples of the populations of eight countries around the world (Argentina, Brazil, Germany, Japan, South Korea, Spain, the UK, and the USA) a range of questions about the role of digital platforms in contemporary media environments.

69% of respondents thought platforms have made spreading misinformation easier, with only 11% believing the contrary. And across every single country surveyed, wide majorities believe that platforms should be held responsible for this.

Of course, misinformation can sometimes be in the eye of the beholder. But remember this report the next time a handful of American commentators and elected officials suggest that content moderation is unwanted censorship.

CHECKING IN ON THE BLUESKY DUPES

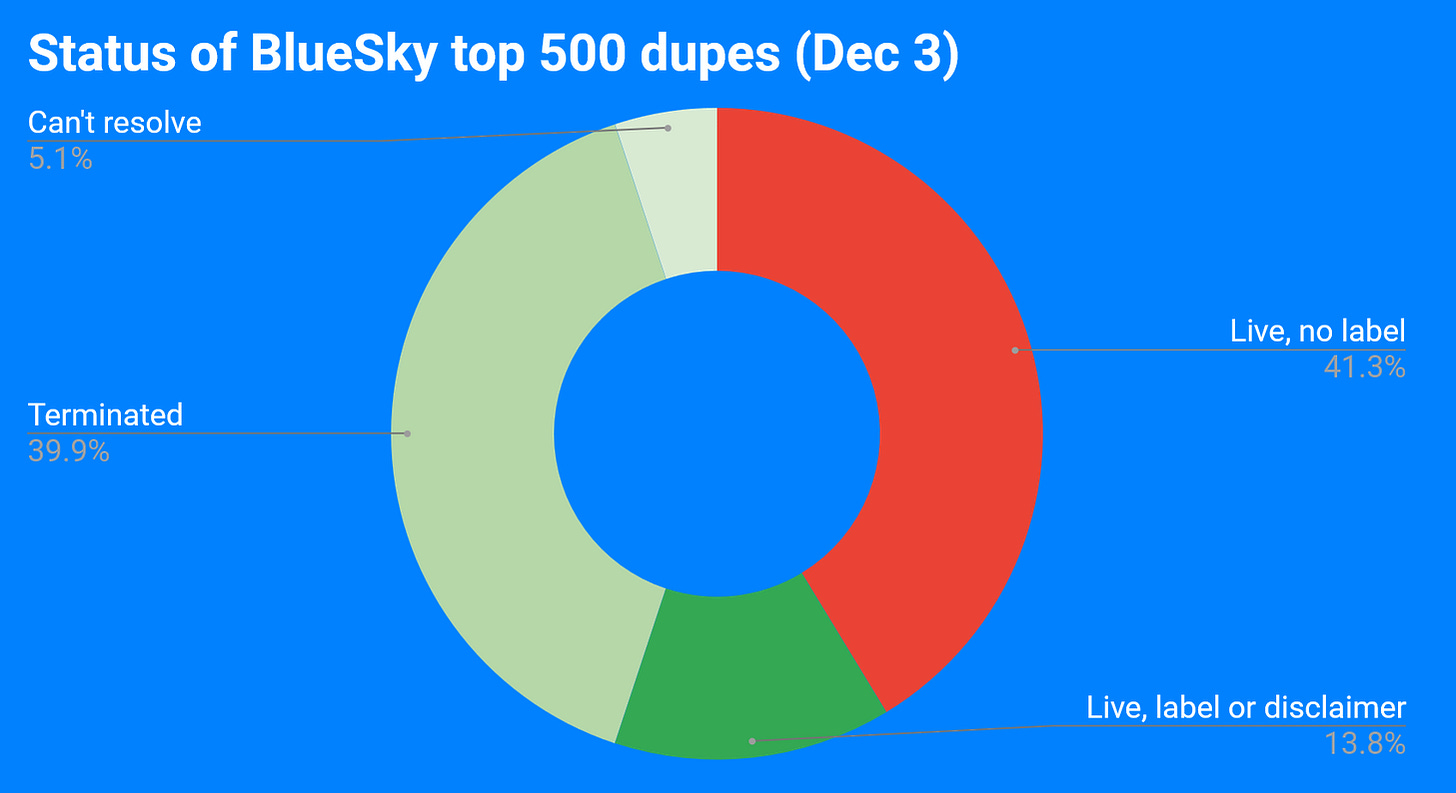

It was great to see a bunch of media outlets pick up my findings last week about BlueSky’s verification challenges. It was even better to see the platform update its relevant policy. In a thread, the BlueSky Safety Team said the new policy was “more aggressive” and that “impersonation and handle-squatting accounts will be removed.” Their community guidelines don’t really define impersonation, but based on this post I am assuming that an identical dupe of a real account that doesn’t clearly signal it is a parody or fan account will be removed.

With that in mind, I returned to my list of dupes from last week to see how capably the ban hammer has gone down on them. The results are mixed.

BlueSky has indeed terminated 55 of the 139 accounts in my sample. Another 7 “can’t resolve,” because they have changed their handles — presumably to avoid being banned for impersonation.

But of the accounts that are still on the platform, only 19 carry a label or some form of disclaimer. The remaining 57 doppelgänger accounts (41% of the total, and the largest subset of them all) are still live and unlabeled.

This includes some notable accounts, such as:

- An account impersonating New York congresswoman Alexandria Ocasio-Cortez which recently posted she has “big news coming soon #2028 ;)” At least a couple of replies appear to take it at face value.

- A duplicate account for British journalist Emily Maitlis, which has more than 9,500 followers.

- An impostor account of American media analyst Kat Abughazaleh which is still up despite trying to manipulate BlueSky’s verification system by connecting their profile to a website identical to the legitimate account’s handle.

Cumulatively, these unlabeled dupes have 23,679 followers. Not huge, but not trivial. If someone from BlueSky T&S is reading, I’m happy to share my full list for your review (though I doubt it’s anything you haven’t seen already).

OUTRAGEOUS AND FALSE

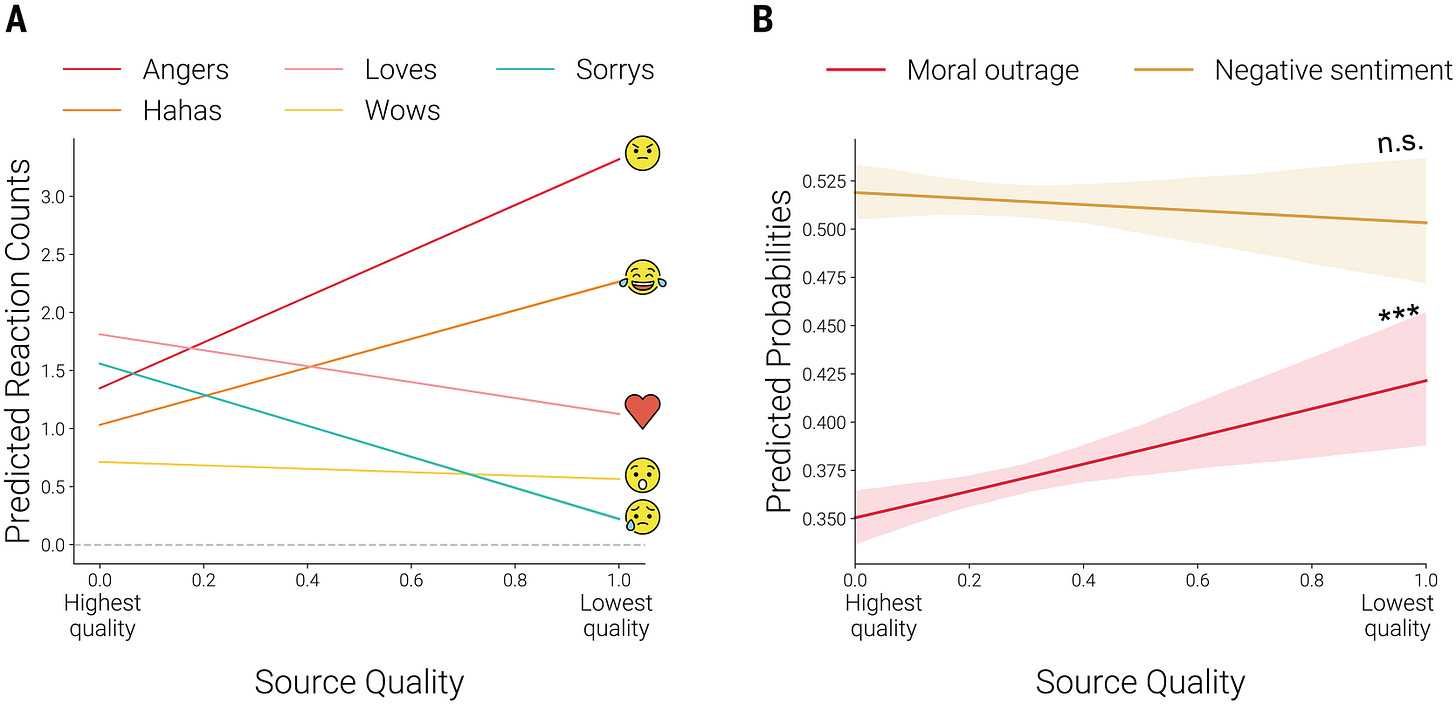

I know this is the definition of confirmation bias but it remains nice to see a study that makes intuitive sense. On Science, researchers at Northwestern, Princeton and St John’s universities conclude that, by and large, online misinformation exploits outrage to reach its audiences. They ran eight studies across Facebook and Twitter data that found “misinformation sources evoke more outrage than do trustworthy news sources” and “outrage facilitates the spread of misinformation at least as strongly as trustworthy news.”

“LESS THAN 1%”

Meta claims that in this year’s elections in the US, Bangladesh, Indonesia, India, Pakistan, the EU Parliament, France, the UK, South Africa, Mexico and Brazil “ratings on AI content related to elections, politics and social topics represented less than 1% of all fact-checked misinformation.”

From my monitoring and other reporting, it does look like AI in this year’s elections — with notable exceptions — was used primarily for memes rather than misinformation. But it’d be great to go beyond one fascinating factoid and get the full context. Assuming “fact-checked misinformation” is that rated as part of the Third-Party Fact-Checking Program, I’d want to know:

- Is the presence of AI in the fact-checked content detected by Meta or flagged by fact-checkers in their reporting?

- Fact-checkers can flag more than one post as containing the same misinformation (and Meta has publicly said it does fuzzy matching as well to find other links). Is the 1% based on the total number of posts flagged or does it exclude duplicates?

- Is the 1% weighted on the reach or engagement of the misinformation?

- Was most of the fact-checked AI content audio, image, video or text?

I sent these questions out to Meta and will update the post if I hear back. I will also try to find other ways to quantify the share of AI content in 2024 election misinformation (perhaps through the Fact Check Explorer, or in partnership with individual fact-checking organizations). If you have ideas and want to collaborate, hit me up!

NOTED

- Cloned customer voice beats bank security checks (BBC)

- Como a desinformação foi elemento central no plano golpista investigado pela PF (desinformante)

- No, l’uomo bianco non è “scomparso” dalle pubblicità dei grandi marchi (Facta.news)

- Analyzing the AI Nudification Ecosystem (arXiv)

- Improving debriefing practices for participants in social science experiments (PNAS Nexus)

- Brazil’s electoral deepfake law tested as AI-generated content targeted local elections (DFR Lab)

- Beijing’s online influence operations along the India–China border (The Strategist)

- "Better Be Computer or I’m Dumb": A Large-Scale Evaluation of Humans as Audio Deepfake Detectors (arXiv)

- Un-notorious BIG: A digital information manipulation campaign targeting French overseas departments, regions, territories and Corsica (Viginum)

1 I shared the advertiser link with Emanuel Maiberg at 404 Media, who did a bigger analysis of the situation and reached out to Google for comment. Read his piece!

Member discussion