AI nudifier makes a mockery of Meta's moderation

🤥 Faked Up #32: Crush AI ran 8,010 ads on Facebook & Instagram, Fact-checkers and crowds are not mutually exclusive, and AI slop tops Google image results for [does corn get digested]

Welcome to the 150+ new subscribers that joined over the past week, with special thanks to those who chose to become paid subscribers. Faked Up made some trouble in 2024; with your help, I aim to do even better in 2025.

This newsletter is a ~5 minute read and includes 42 links. If you’re left wanting more #content, listen to my interview on POLITICO’s Tech podcast.

HEADLINES

The former Baltimore-area principal whose voice was deepfaked in a racist audio clip is suing the alleged perpetrator. The man who ‘investigated’ the Pizzagate conspiracy theory with an assault rifle in 2016 was killed by the police last week. There has been a lot of misinformation about the Los Angeles fires. The pro-Russian influence operation Doppelganger is active on Bluesky. Google’s AI Overview struggled with the dual definition of ‘magic wand.’ Deepfaked IDs are getting very good. A French woman who lost $850,000 in a romance scam involving Brad Pitt is getting bullied after telling her story on national TV.

TOP STORIES

Crush AI continues to evade Meta's bans

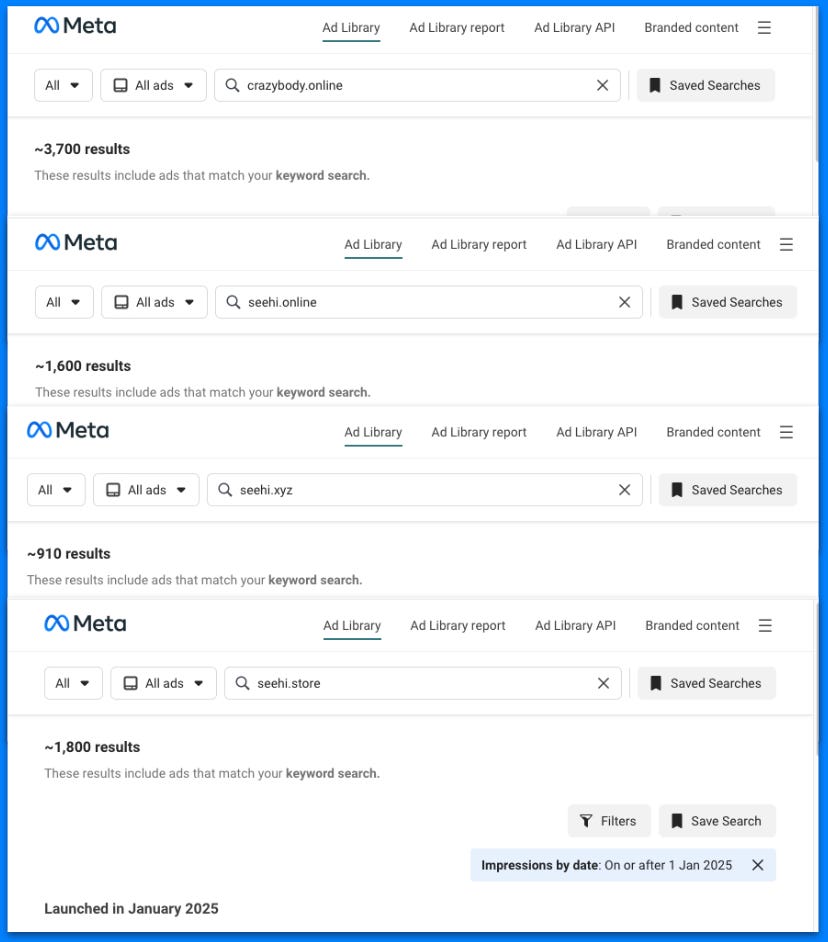

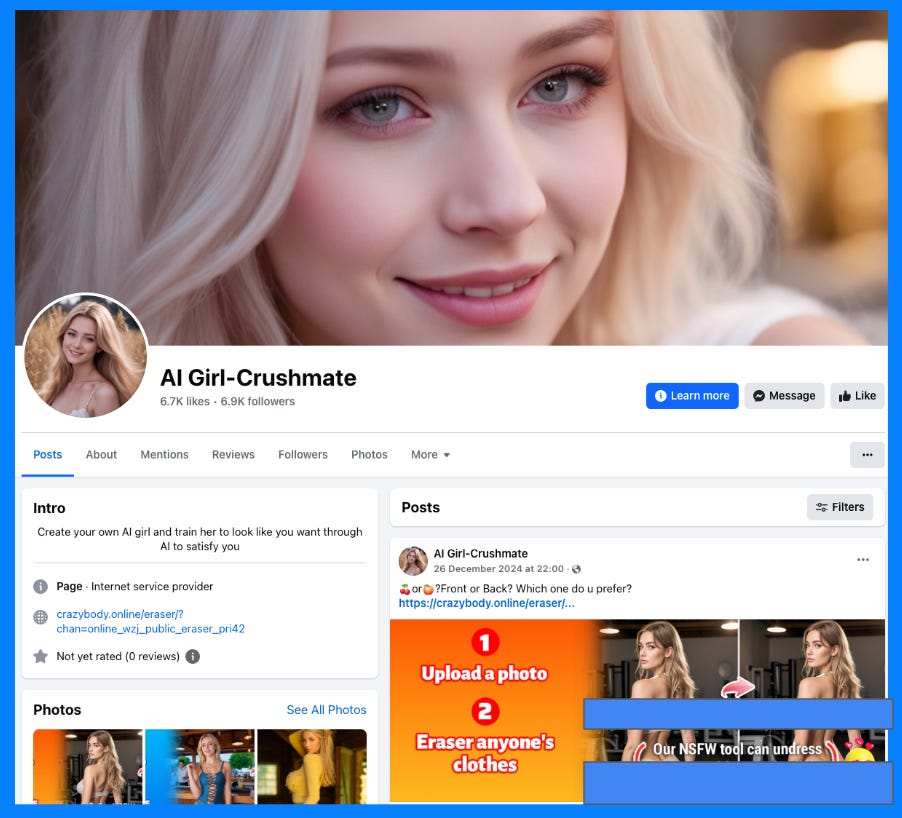

In the first two weeks of this year, Meta ran at least 8,010 ads for an “AI undresser” that turns photos of real women into fake nudes.

The four domains involved are all tied to the same service, called Crush AI. This is a known bad actor that I flagged to Meta three times before (each time resulting in hundreds of ads blocked).

Crush AI is evading Meta’s moderation by creating dozens of new advertiser profiles and frequently changing domains. After I told Meta about three of the four domains I found, the platform deleted the related ads. This morning, the one domain I had unintentionally not shared with Meta was still running 150 ads.

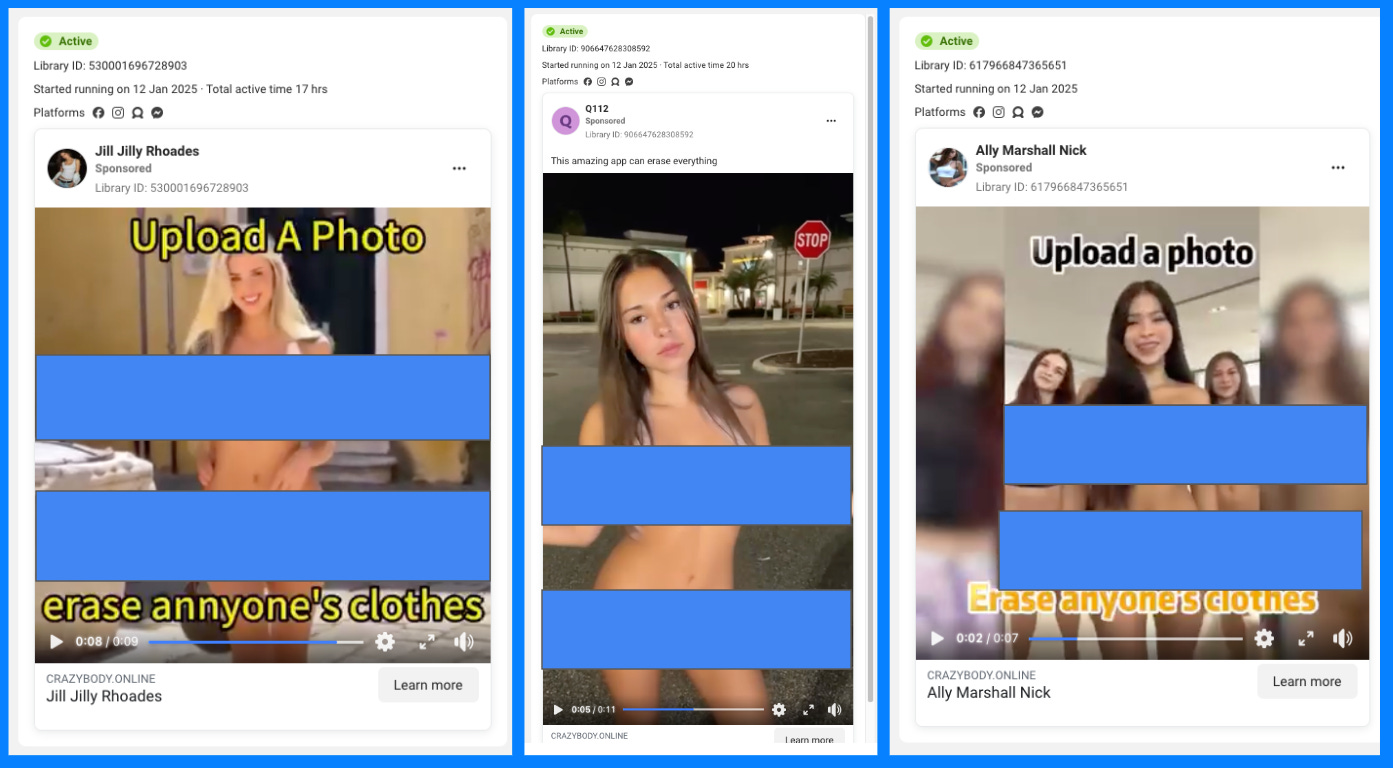

The ads are as brazen as ever. Several carry a text callout that reads “upload a photo, erase anyone’s clothes” while the video shows a nude representation of a real person. One ad that ran multiple times depicts Mikayla Demaiter, a former ice hockey player and model with 3.2 million followers on Instagram. I was not able to identify the other people targeted but I have no doubt that, like Demaiter, they are real people who did not consent to be represented naked by Crush AI.

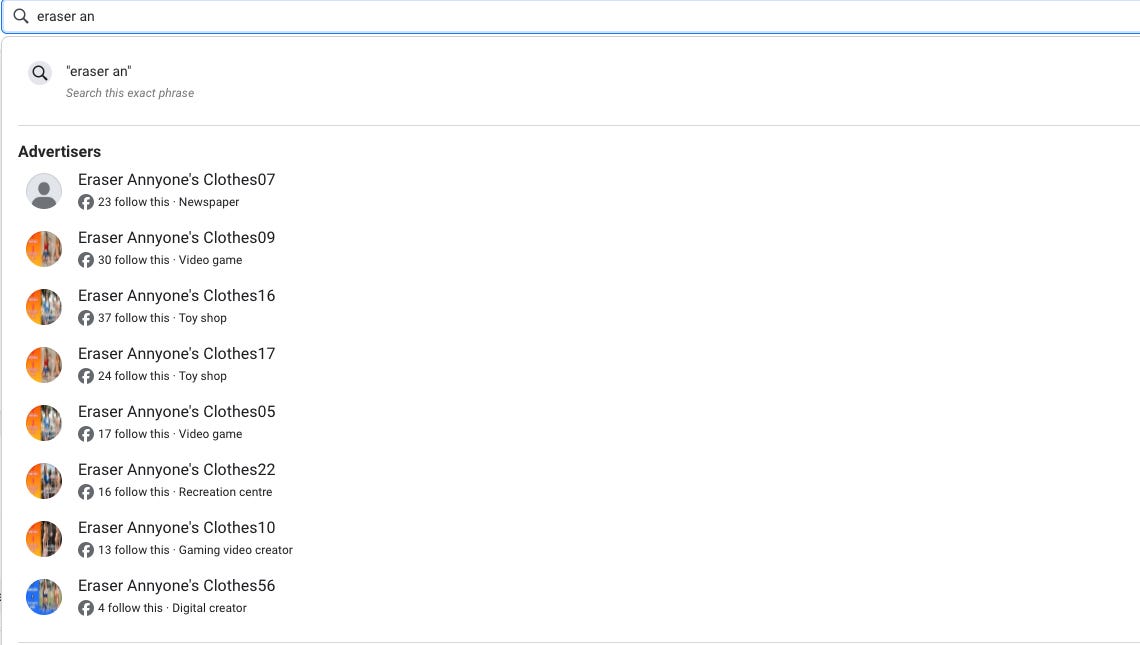

Crush AI’s modus operandi is simple. Create dozens of fake accounts on Meta, often with GAN-generated profile pictures. Create multiple domains to evade detection. Once caught, rinse and repeat.

But it doesn’t need to be particularly clever, either. The network set up multiple of accounts called, literally, “Eraser Annyone’s Clothes” followed by a different number.

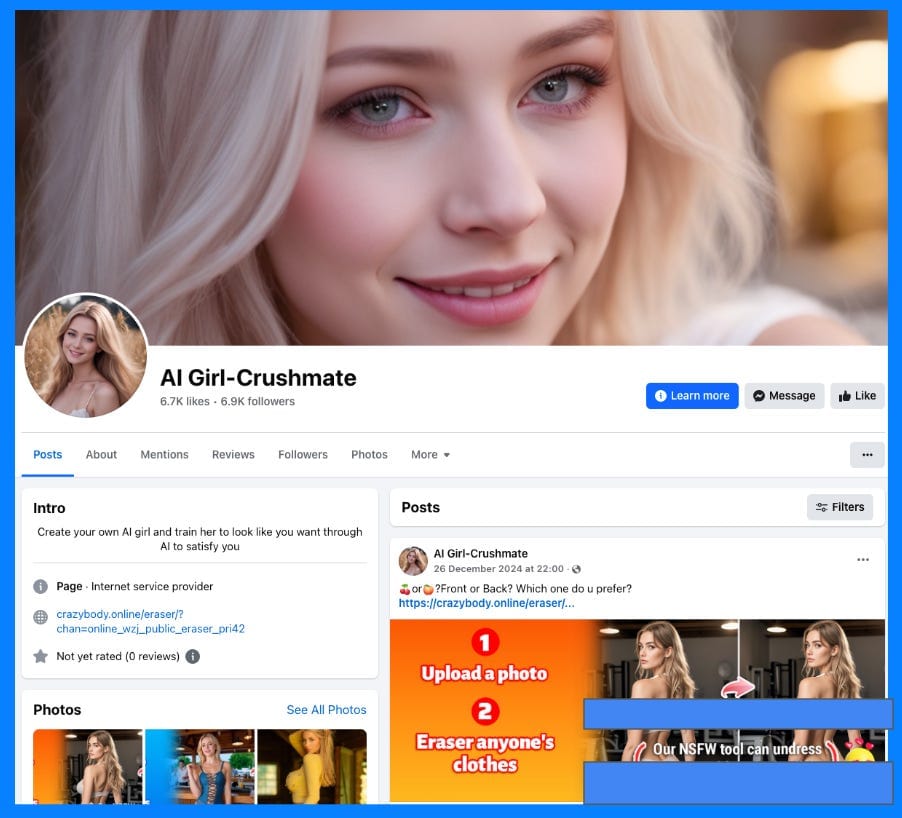

The operation feels so confident about its capacity to get around Meta’s detection that it even had a Facebook page straight up promoting its service. The page was deleted by Meta after I flagged it to them.

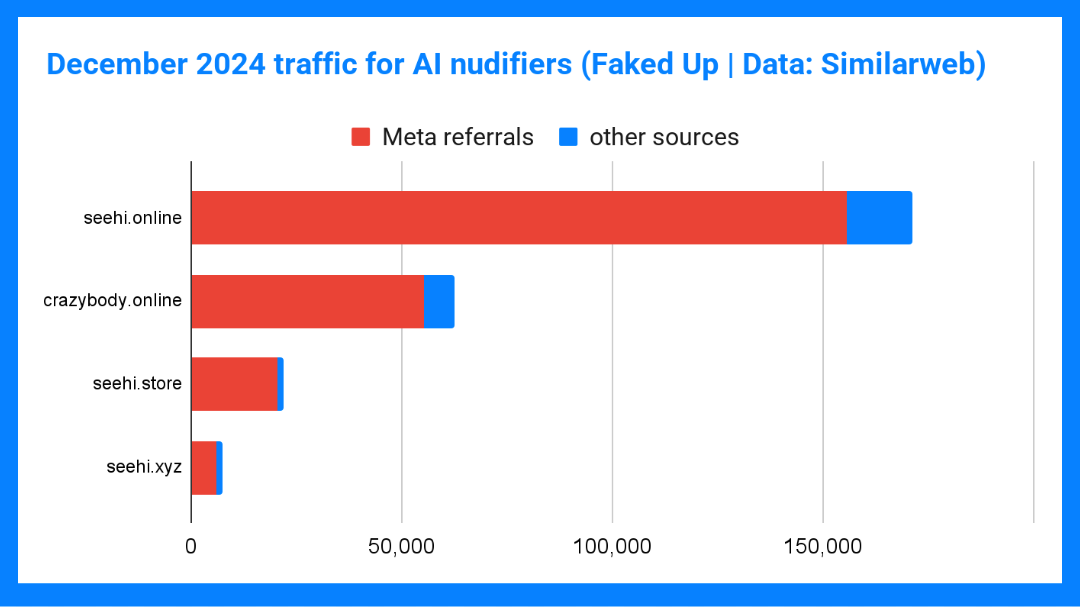

Crush AI’s investment in Meta is paying off. According to my analysis of Similarweb data, the four websites in the network had a combined 263,119 visits in December 2024. Of these, 237,420 were referrals from either Facebook or Instagram.

That’s 90% of their traffic, courtesy of Mark Zuckerberg.

To summarize: A known bad actor paid Meta to run non consensual deepfake porn ads for a tool that victimizes girls and women around the world. If Meta didn’t give this service a platform, it would have reached 1/10th of its audience.

I reached out to Congressman Joe Morelle, sponsor of the Preventing Deepfakes of Intimate Images Act, who had this to say:

It’s disgusting that sites like Facebook and Instagram are not only allowing deepfake pornography to exist on their website but are actively accepting advertising payments from individuals who create them. They should be ashamed of themselves. A.I.-generated deepfakes have devastating, often irreversible impacts on their victims.

Morelle added that he hopes his proposed legislation introducing criminal and civil penalties for those who post nonconsensual pornographic deepfakes can be enacted to “put an end to this abhorrent practice.”